Awhile back I talked about a possible test apocalypse and how you might respond to that. In honor of the current film Avengers: Endgame, I started thinking about this topic again. Here I’ll use the well-known “snap” of Thanos as my starting off point, trusting that this makes the article title a little more clear.

Instead of a Leftovers Rapture-style event as in my previous post, let’s consider that the Thanos Snap is responsible for decimating our tests, essentially removing half of them from existence.

Ironically, Thor’s look there might match that of testers who are terrified by this possibility.

However, I would argue that a good portion of that possible terror comes from treating tests as an artifact. I would further argue it comes from test cases being an artifact crutch. Because that’s often what test cases — as distinct from tests — become.

At this point I will recommend everyone read Michael Bolton’s series on Breaking the Test Case Addiction. It’s posted in a series of parts and each should be required reading for testers, whether you agree or disagree. I happen to strongly agree with that series.

However, I’m not going to talk overly much here about our reliance on test cases as an artifact versus tests as part of a process. Right now I don’t really have anything unique to say about that.

What I do want to talk about is that idea of half of our test cases disappearing. Would we be able to function? How compromised would we be? Maybe a better question to really be asking though is: how can we write tests robustly enough that even if many of them were snapped out of existence, we could still do good testing?

Yeah, but … isn’t that impossible, though? Wouldn’t we have to ensure a ton of redundancy — and thus duplication — in our test cases if we had to assume an extinction level event could befall them?

I will preface this right here and tell you that, like my previous post, I don’t plan on giving a lot of answers. In fact, I don’t plan on giving any answers at all. I’m simply proposing a thought experiment that I think it’s worth people playing around with.

Making Do With Lots of Tests

As we all know, for any reasonably complex application, the problems come in when you consider every possible combination of tests you can possibly run. Doing that and then multiplying them out by certain factors, such as browsers to test on, you get a combinatorial explosion. There are tools and techniques to help you combat this. Combinatorial tooling, using various algorithms, can apply a little math to help you figure the exact number of unique tests that are possible with some group of variables that you are considering.

And that’s interesting except when you run into the fact that we scope tests to abstraction layers.

So when I talk about a Test Snap here, you have to imagine half of your unit tests, your property tests, your contract tests, your service tests, your end-to-end tests, and so on and so forth. Half of that entire population has been decimated.

That’s one issue. The other issue is that even with that notion of tooling that looks at variables, you do have to consider something I talked about way back in 2014, regarding independent paths.

I won’t dig into all that here. I’m just reaffirming something all of us likely know: there are lots of possible tests. So an extinction level event of half of any of that is substantive.

Do Snaps Happen Anyway?

Speaking as test specialists for a moment, let’s consider that we have to identify independently testable categories within whatever domain we’re dealing with. The categories I would apply when testing clinical trial applications is quite a bit different from the categories that I apply when testing hedge fund trading applications. Both of those are practically identical, however, when I consider the categories I have to consider when testing games. All of that’s presumably obvious.

We also have to identify representative classes of values for each of the categories. Those tend to be valid paths through the application. Note that “representative” can still mean classes of values that are invalid. Those are invalid paths through the application. But they are representative in that they can happen and thus the application should deal with them. And, thus, of course, we should test that the application does in fact do so.

Note, if you will, how this gets us past talking about writing enough “happy path” or “sad path” tests to survive the snap. It also gets us past the harmful notion (in my opinion) of speaking of “positive tests” and “negative tests.”

Yet these delineations are, in fact, exactly how many testers try to reduce time under delivery constraints. They say, “Well, I’ll only run the happy path.” Or “Let’s just focus on the positive tests.”

So it’s kind of like a Thanos Snap happened anyway.

Incidentally, I should note that while identifying representative classes of values, it’s important — at least initially — to ignore interactions among values for different the categories. I’ll trust the reasons for this are obvious to test specialists. If you are not a test specialist and reading this, just know that, similar to any experiment, we have to minimize the number of dependent and independent variables that we are changing.

I should also note that a combination of values for each category corresponds to a way to frame testing for that area of the application. This is why tooling that helps us figure out combinations or even all-pairs is not quite enough.

After the above two identification points, it’s crucial to identify constraints. At the simplest level you can introduce constraints to determine what impossible combinations exist. But note that here is where testers often don’t test those combinations because the assumption is those combinations can’t or won’t happen. They are called “impossible”, after all, right?

Again, a Thanos Snap has happened.

We’ve immediately ruled out a set of tests. But, in fact, the constraints are just a guide. It’s telling you what shouldn’t happen. But what if it does? Do you know that it can’t? Do you know that it can’t under the widest possible variation of test and data conditions you can think of?

As with the representative values, a combination of values for the constraints for categories corresponds to a way of framing tests for that area of the application.

What I just listed there were some heuristics for how to frame tests and how to consider what you might be leaving out. You might be snapping out entire subsets of tests and barely realize you are doing so.

What I just listed there were some heuristics for how to frame tests and how to consider what you might be leaving out. You might be snapping out entire subsets of tests and barely realize you are doing so.

Okay, let’s step back a bit and get a little tangible.

Practice With Examples

In Avengers: Infinity War, Thanos tells Thor that “You should have gone for the head.” Definitely good advice. I didn’t want to be in the position where someone said to me “You should have gone for some examples.” So, to that end, let’s consider a few.

Consider the following screenshots from my Stardate Calculator application and ask yourself what each alone tells you about what to test.

Given the nature of the screenshots I’ve clearly given away some of the (hopefully obvious) game: testing around the TOS (The Original Series) and TNG (The Next Generation) series. But there are many other things you can be taking from those screenshots, minimal as they are.

So a question to ask would be: how many total test cases would you write? How would you write them robustly enough that if half disappeared in a Snap Event, you could still do effective testing?

Now consider my Warp Factor calculator application and ask yourself the same questions:

This one might seem a bit easier. But is it? Maybe it is. The question really hinges on how much information you need to gather — and thus how much testing you have to do — at a minimum to get enough information to make an informed assessment of risk.

You might notice with both of these examples that they hinge on something we all know: many of our bugs come not because we didn’t test a feature at all. Rather, they come in because we didn’t test the feature with enough variation, such as data conditions that would probe at certain edges and boundaries.

This does provide a way for thinking about how to make tests robust given that many of them may end being dusted by a Mad Titan who just seeks balance in the universe.

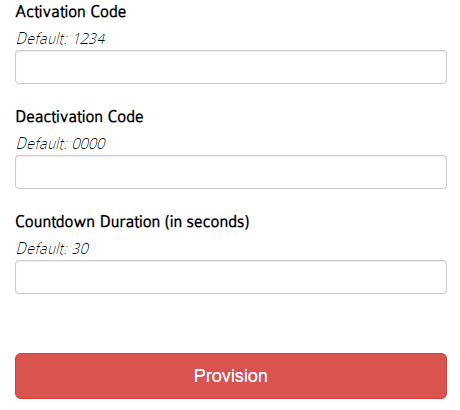

Let’s consider one more, a bit in line with my Thanos theme. Let’s say someone wrote an app for Thanos to construct his own Infinity Gauntlet with some parameters.

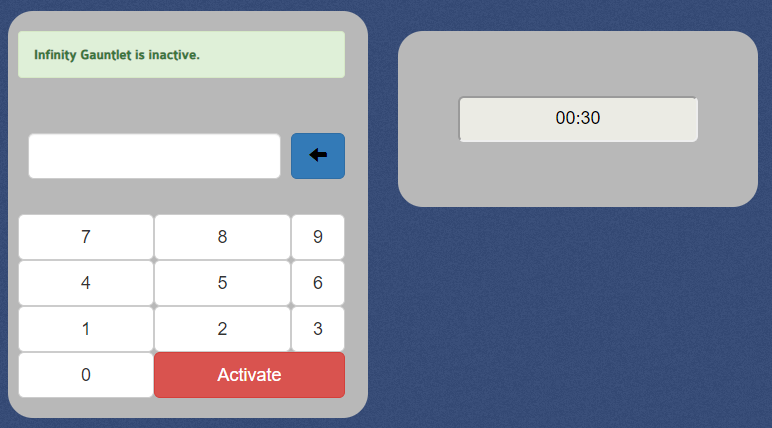

Once Thanos has done his configurations, he provisions his Gauntlet and has an interface:

So contrary to the previous two applications I showed you, this one is a bit more workflow focused. Thus, were a Snap Event to occur, you have to consider a bit of a wider lens around how you would try to determine a reasonable level of quality given the fact that now data conditions and test conditions are spread not just spatially but temporally.

Hint: I talk about spatial and temporal coupling in my plea for testability. The notion of abstraction layers matters there quite a bit, which is something I also mentioned above.

I can’t stress enough that it’s a good exercise to try to think up tests for the above applications and decide how you could write tests in such a way that a loss of many of them would not seriously impact your ability to determine if there was a reasonable level of demonstrable quality in place. While you do that keep in mind that artifact/process distinction.

My regular readers might recognize that I use Test Quest to help testers hone their intuitions around this.

The Snap Event is About Scope

One of the axioms that many people take into testing is that our effort devoted to testing should provide meaningful benefit without excessive cost. Thus we try to establish a positive balance between the benefits of testing and the cost of creating and maintaining those tests, including any tooling we put in place to help us with that creation and maintenance.

Practically speaking, we need to focus on testing at those places where we are most likely to make mistakes. Also practically speaking, we want testing to find those mistakes as quickly as possible.

In the book Building Microservices: Designing Fine-Grained Systems, a very good point about testing is brought up:

“It’s hard to have a balanced conversation about the value something adds versus the burden it entails when talking about testing as a whole. It can also be a difficult risk/reward trade-off. Do you get thanked if you remove a test? Maybe. But you’ll certainly get blamed if a test you removed lets a bug through.”

The author, Sam Newman, then goes on to say something that inspired a bit of this post beyond just our famous Avengers (where the bolded emphasis is mine):

“If the same feature is covered in 20 different tests, perhaps we can get rid of half of them, as those 20 tests take 10 minutes to run.”

Sam here is talking about so-called “larger-scoped, high-burden tests.” Yet someone could argue that such larger-scoped tests are exactly what we would need if we operate off of the assumption that a Snap Event could take out half of them. This goes back to my need for redundancy and thus duplication from earlier.

What this really means, however, is that we have to decide what “larger-scoped, high-burden” tests are. This will help us decide the right level of abstraction to focus on for tests, which goes back to what I mentioned regarding that we scope tests to abstraction layers.

For example, consider integration tests. What are we really testing with an integration test? The business rules? How the GUI, whether web or otherwise, behaves? How we communicate with external systems? How we publish our business rules as a web API? How we connect with third-party services that give us information? How we provide and utilize persistence mechanisms? The answer probably depends on how we define “integration.” But it’s fairly non-controversial to say that an integrated test suite goes across several problem domains or several layers of abstraction.

A point for me here is that thinking this way lets us ask powerful questions. For example: what makes it easier for developers to write highly interdependent modules that in turn become more difficult to test with the low-level tests? And which in turn forces us to write more tests at a higher abstraction? We are, in effect, snapping out entire subsets of tests because we simply feel they are too difficult to create and/or run.

And that kind of thinking and questioning lets us explore ideas like perhaps the energy devoted to writing complex tests for a lot of hard-to-test code could be channeled into making the code itself more testable, allowing simpler tests to be written. The same applies if we move up an abstraction layer to the user interface, such as those I showed above.

This changes the dynamic of how we consider a Snap Event.

Snap Events May Not Be Bad

Let me revisit a point I stated earlier. What I listed here were some heuristics for how to frame tests and how to consider what you might be leaving out. As I hope I showed, you might be snapping out entire subsets of tests and barely realize you are doing so.

Separate and distinct, but related to that, is the question I’ve been playing around with here. Assume you have lots of tests, perhaps applying the above heuristics, and a Thanos Snap happens. Half of your tests are gone.

How do you keep your test suite viable in that context? Or do you? Is it not even a fair question? After all, if half of anything that we rely on disappeared, it’s kind of hard to imagine how we might deal with it. It’s like asking how I will still get to work if half of my car disappeared.

Except that … as I think and hope I showed … we tend to do this all the time with tests. We exclude whole categories, either out of deliberation or ignorance. We enshrine testing (our process) as test cases (an artifact) and then count on those artifacts to see us through. Yet sometimes we don’t run them all. Or they are flaky so we just re-run them until they either work or we treat them as part of some percentage that it’s “okay” to ignore.

Or we deal with poor testability by bringing in more harder to maintain (higher-level, slower-running) tests at the expense of easier to maintain (lower-level, faster running) tests under the assumption that the former are simply too difficult. Yet having many more of something that is relatively small often makes surviving extinction level events more viable. (Ask the mammals and dinosaurs how that worked out, for example, about 66 million years ago. Each would have quite different answers.)

Just like controlled fires that are set to make sure unplanned fires don’t get out of control, maybe thinking in terms of a Snap Event is a way for us to not let our test cases get out of control, which forces us to put more emphasis on the process (testing) than the artifact (test cases).

What Kind of Snap?

Again, all of this is my way of saying: let’s think about it.

How much testing do we really need to do? How much variation in data conditions do we have to apply? How much breadth and depth is required? How much time are we spending determining if things work versus determining how and where they might be degrading? How — truly — does our code coverage relate to our test coverage? How — truly — does our feature coverage relate to our test coverage?

And perhaps implicit in all of this: how much am I scared that my artifacts will disappear and I won’t be able to test? That I won’t be able to know my coverage? That I won’t know how much variation I’m actually applying?

Answering these questions is interesting in light of the fact that nothing in your brain has disappeared. You, as a human, still know how to test. You, as a human, can still explore the application and put it to the test. You, as a human, can help people understand coverage.

And all of that leads us to: How much of what you as a human are doing needs — truly needs — to be encoded as tests?

Just keep in mind, even if there is a Test Snap, that doesn’t imply that there’s a Testing Snap.