For this post I’m going to be giving you all the commands you need to run algorithms through Pacumen. But I will note that the readme file of the Pacumen project does provide some context for you should you choose to play around with the project. You can also reference my exploring testing and AI post for more details on how to use Pacumen.

Let’s take stock of our context first.

Our Context

Many problems in AI can be solved, at least in theory, by intelligently searching through many possible solutions. This means reasoning can be reduced to performing a search and that’s why I’ve focused on search problems during these posts. What I covered in the last couple of posts was the basics of search algorithms. We started with depth first search, moved on to breadth first search, then uniform cost search, until finally we got to A* search. I mainly looked at these search algorithms as a tree search but also stepped a bit into the idea of a graph search.

So if we were doing this in the context of our development shop, our goal would be to implement these search algorithms and test them out. Let’s say we’re in the business of providing effective and efficient machine learning to our customers to better help them understand aspects of their business. Many of their problems are search problems and so we are testing our ideas on the Pac-Man abstraction.

Thus at some point it would be time for the developers to write some search functions to help the pacman agent plan routes through the maze. All of these search functions would essentially be returning a list of actions that will lead pacman from the start state to the goal state. These actions all have to be legal moves. That means valid directions only and no moving through walls.

So what are you thinking as a tester?

Whose Testing Is This, Anyway?

You might be asking: “Well, who actually does this testing? It seems like the testing is so tied to the implementation that this might be programmer testing.” If you feel that, trust me, you are part of the problem!

Kidding. But only slightly. What I led you towards here was a common sentiment in the industry that feels any “technical” testing is solely in the province of the developer; test specialists need not apply. This is false. But it is a persistent sentiment and it’s one that many testers reinforce, even if such is not their intention.

If you want to play along, I recommend you get the Pacumen repo, specifically the search-algorithms branch. In the search.py file you’ll find the following methods filled in:

depth_first_searchbreadth_first_searchuniform_cost_searchastar_search

You should feel free to experiment with these. I’ll show you how to run them in a moment. But in terms of the experimenting, as a simple example, you could put in print statements to figure out what’s going on. You might want to try some simple commands to understand the search problem that’s being passed in. Consider the following statements you could add:

|

1 2 3 4 5 6 7 8 9 10 11 |

def depth_first_search(problem): start_node = problem.get_start_state() print("Start:", start_node) print("Is the start a goal?", problem.is_goal_state(start_node)) print("Successors from start:", problem.get_successors(start_node)) children = problem.get_successors(problem.get_start_state()) print("Children node count: ", len(children)) print("Successors of (5,4):", problem.get_successors((5, 4))) ... |

What you are doing here is using the API that is provided by Pacumen to call actions like is_goal_state, get_successors and so on. If you ran this search with the tiny_maze, here’s what you would get as output:

Start: (5, 5) Is the start a goal? False Successors from start: [((5, 4), 'South', 1), ((4, 5), 'West', 1)] 2 Successors of (5,4): [((5, 5), 'North', 1), ((5, 3), 'South', 1)]

Integration or Integrated?

What we have here is the basis of an integrated versus an integration aspect of test design. Here you are learning information by querying the system itself, similar to how a developer might do it but at a slightly different level of abstraction. You’re aren’t checking the integration of the classes that provide you this information: you are checking the integrated nature of what those classes can tell you about the quality of the system. This is part of the integration pact I wrote about before.

Tester as Explorer

So let’s get to work!

One thing I hope you were able to glean, even if only in broad strokes, from my posts leading to this one is that the algorithms of depth first search, breadth first search, uniform cost search, and A* differ only in the selection of the next node to be expanded, meaning how the fringe is explored.

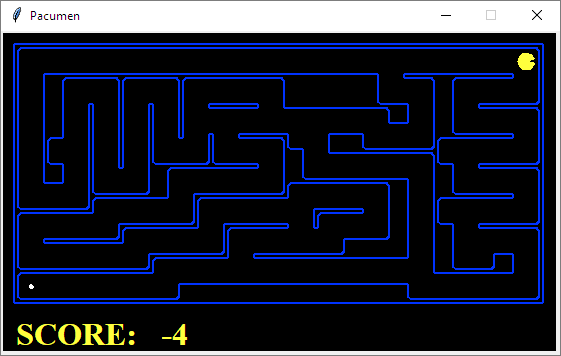

We’re going to run those algorithms which I have provided in the above-mentioned branch. What I want you to do is think about what you expect to see prior to running the commands I provide you. I’ll also note that while executing the search strategies, the pacman board will show an overlay of the states explored and the order in which they were explored. A brighter red means earlier exploration. Here’s an idea of what that looks like:

Finally, I’ll also note that if you want to slow pacman down so you can see more of what’s happening, you can add the following to any of the commands given here: --frameTime 0.2. You can choose a number that works for you.

Let’s consider our mazes for the purposes of this experiment.

Tiny Maze

Medium Maze

Big Maze

Searches

I hope you’ve read through the previous posts because what I’m going to ask you to do now is run some tests. But I want you to think about what you expect to see from these tests. These testes are made up of the algorithms that we talked about. Each algorithm is going to explore the above mazes in a different way. At the end you are going to get output from each execution.

This output will tell you the total cost incurred, the number of nodes expanded during the search, and the final score of the game. Keep in mind that there is a living reward of -1. So each time the pacman agent moves, the score goes down by 1. Eating a dot gives 10 points and, in these mazes, there is only one dot. Winning the game — which means eating the sole dot — gives you 500 points.

What search algorithms do you expect to fare better than the others? Being able to answer this is part of understanding the business domain and that’s what the previous two posts were about.

Tester as Experimenter

Here are the commands. We’ll break these up by the maze type, which could be considered the test suite. Each command runs an algorithm. The type of algorithm is passed in at the end of the command. The A* search can also be provided with a heuristic.

Here are the commands for tiny_maze:

python pacman.py -l tiny_maze -p SearchAgent -a fn=dfs

python pacman.py -l tiny_maze -p SearchAgent -a fn=bfs

python pacman.py -l tiny_maze -p SearchAgent -a fn=ucs

python pacman.py -l tiny_maze -p SearchAgent -a fn=astar

python pacman.py -l tiny_maze -p SearchAgent -a fn=astar,heuristic=euclidean_heuristic

python pacman.py -l tiny_maze -p SearchAgent -a fn=astar,heuristic=manhattan_heuristic

And those for medium_maze:

python pacman.py -l medium_maze -p SearchAgent -a fn=dfs

python pacman.py -l medium_maze -p SearchAgent -a fn=bfs

python pacman.py -l medium_maze -p SearchAgent -a fn=ucs

python pacman.py -l medium_maze -p SearchAgent -a fn=astar

python pacman.py -l medium_maze -p SearchAgent -a fn=astar,heuristic=euclidean_heuristic

python pacman.py -l medium_maze -p SearchAgent -a fn=astar,heuristic=manhattan_heuristic

And, finally, for big_maze:

python pacman.py -l big_maze -z .6 -p SearchAgent -a fn=dfs

python pacman.py -l big_maze -z .6 -p SearchAgent -a fn=bfs

python pacman.py -l big_maze -z .6 -p SearchAgent -a fn=ucs

python pacman.py -l big_maze -z .6 -p SearchAgent -a fn=astar

python pacman.py -l big_maze -z .6 -p SearchAgent -a fn=astar,heuristic=euclidean_heuristic

python pacman.py -l big_maze -z .6 -p SearchAgent -a fn=astar,heuristic=manhattan_heuristic

Tester as Observer

What have you observed?

Your observations here are going to speak very much to the quality of the proposed solutions. And gaining insight into quality is exactly why you are working as a test specialist.

Even if you were totally clueless about what was going on initially, and didn’t read my previous posts, executing the commands gave you a running system and output. That output shows you a pattern. Those patterns should tell you something. So what did you observe? What would you report?

Even without knowing what those different algorithms are, even if you didn’t read the previous posts, that would not stop you at all from being able to execute the above tests and make observations about the system.

So here’s where we close off this series of posts. You’ve come a long way here. We started out with a general context: exploring the role of testers in the context of artificial intelligence and machine learning. Then we looked at a specific implementation of a possible project (Pacumen). Then we get slightly more than knee deep in the waters of algorithms. Finally we ended up here: with you able to run examples and see what kind of observations you would make.

I hope this was an interesting series of posts. I plan to revisit these ideas in more and varied contexts in the future.

Thank you for the series and would love to see more of this from you in near future. Thumbs up.