In my post Forgetting How to Test, I said “we are at a time where forgetting how to test is not just a technical dilemma, but an ethical and moral dilemma.” I still believe that. Here I’ll try to show a bit of how test thinking should lead us inexorably to that idea.

This post will be somewhat in line with my “Testing is Like…” concept. I also think this post will serve as yet another example of what I talked about when I framed the question-as-statememt “So, you want to be a tester?”

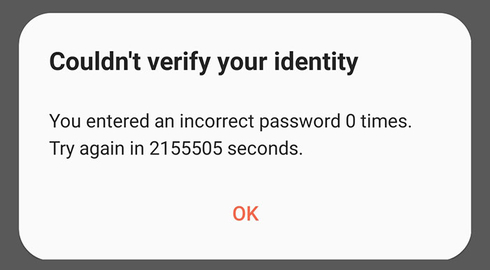

When you want to be a software tester, you operate in a world where it feels like there must be some key on the keyboard like the above. This is because we seem to be awash in blunders, oversights, and errors — essentially mistakes.

Testing is Like Thermodynamics

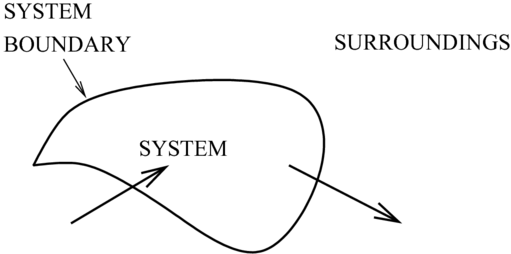

In thermodynamics, you have a system of some sort that has a boundary. There are inputs and there are outputs.

That’s pretty much what we deal with in testing all the time. But that’s a simplistic enough analogy that it’s borderline useless. Yet in his book Einstein’s Fridge: How the Difference Between Hot and Cold Explains the Universe, Paul Sen says:

“Thermodynamics is a dreadful name for what is arguably the most useful and universal scientific theory ever conceived.”

His point being that the word seems to suggest a very narrow area of applicability, usually associated only with heat. But, as correctly noted, thermodynamics is “more broadly a means of making sense of our universe.”

And this is a challenge I feel testing comes up against. Testing is often associated with a very narrow area of applicability: someone gives you something and you test it. That implies a lot, right? It implies there was no testing done (or perhaps even possible) before you were given something. It fails to recognize that, like thermodynamics, testing is a multi-faceted discipline that, at minimum, requires thinking of testing as both design activity and execution activity.

What this means is, just as in thermodynamics, our surrounding context matters. And there’s a wide universe out there beyond the thing we’re dealing with:

That wider universe for testing are the business domain, the requirements (those stated and those only implied), the design thinking that goes into providing a user experience, and so on.

Understanding thermodynamics means understanding energy, entropy, and temperature. Those are three concepts that underlie the science of thermodynamics. For testing you have to understand investigation, exploration, and experimentation. A challenge is that those sound like easy words. They sound like something people can just do, right? “Oh, you want me to investigate? Sure, I’ll do that.”

But each of those concepts has just as much heft to them as does energy, entropy and temperature.

Testing Is Like Zoology

Contrary to what a lot of people might think, physicists don’t just spend their time identifying and classifying particles. The same could be said of, say, zoologists, They don’t just sit there all day identifying and classifying various animals. Someone with a scientific focus attempts to explain what they see in the world around them. So let’s stick with zoology a bit.

Zoology, and in a wider context the whole discipline of evolutionary biology, is focused on proposing mechanisms for explaining the nature of life. Yet a lot of people think that such specialities just focus on coming up with stuff like this:

And likewise in testing, the idea is that testers just come up with lists of test cases and associate those with lists of requirements and those then can get associated with bugs.

In other words, it’s purely a classification exercise for the most part.

Arik Kershenbaum, in his book The Zoologist’s Guide to the Galaxy: What Animals on Earth Reveal about Aliens – and Ourselves, says:

“Why do lions live in prides, but tigers hunt alone? Why do birds have only two wings? Why, for that matter, do the vast majority of animals have a left side and a right side?”

What Arik is suggesting is that the goal here isn’t just to observe the above aspects but rather to start formulating and deriving what might be termed the “rules for life.” This is no different than how astronomers do the same for planets and stars and how physicists do the same for particles and forces. This is no different than what specialist testers do when determining the “rules for quality” on any given project.

In both cases, this requires creative thought to understand how things are constructed, how they seem to work based on how they are constructed, how they work optimally or (usually) sub-optimally.

A key thing is that if those biological (or astronomical or physical) rules are universal rules, they will work just as well on another planet as they do here on Earth. And that’s important. The same applies to how we view our applications. Yes, there is a massively wide diversity in the types of applications and how they work. But, fundamentally, they are all using roughly the same “building blocks of life”, as it were.

This goes along with the following from Arik’s book:

“Most people are confident that the laws of physics and chemistry are unequivocal and universal. They work here on Earth just as they would do on any exoplanet. Predictions that we make here about how physical and chemical materials will behave in different circumstances, are going to be good predictions about how those same materials would behave in those same circumstances in other parts of the universe.”

The underlying thought there is really crucial. We absolutely rely on science to work in this way and it’s why (for the most part) we trust what science tells us. The same applies to testing. There are many good and valid reasons for why we believe in the scientific method. And since testing is predicated on the scientific method, there should be equally good and valid reasons for why we believe that testing is necessary.

Yet one thing is interesting in the above discussion of the various sciences, which is in that planets are pretty rigid and predictable in how they act. Whereas we could argue biology is pretty much the exact opposite.

A physicist understands exactly how a ball rolls down a hill, and can give you a set of equations you can use to predict the motion of balls on hills everywhere in the universe. Physics experiments rely on highly controlled and simplified conditions. Which is pretty much not at all what we find in the biological world.

The challenge of testing in the software and hardware context is that it lies at an intersection of the physical and biological worlds. By which I mean what we test is ultimately software running on some hardware. But that software is written by human beings. And that software depends on other software, also written by human beings.

Where that all becomes really interesting is that human beings are subject to many cognitive theories of error when it comes to just about anything, but certainly when thinking about and building complex things. This means humans make mistakes. And it’s those mistakes that, as specialist testers, we are most interested in.

Testing is Like an Intuition Pump

Those ideas of theory of error and humans making mistakes leads us to this interesting idea:

“We philosophers are mistake-specialists. While other disciplines specialize in getting the right answers to their defining questions, we philosophers specialize in all the ways there are of getting things so mixed up, so deeply wrong, that nobody is even sure what the right questions are, let alone the answers.”

Replace “philosophers” with “specialist testers.” Sound good? That comes from Daniel Dennett in his book Intuition Pumps and Other Tools for Thinking.

I believe that sentiment is very relevant for the discipline of testing where you often have to help people ask the right questions in the first place. This is as opposed to providing them answers. This allows you to shade from providing just test results to providing the means by which an informed assessment of risk can be achieved. This is how you move from simple metrics like “test coverage” to more viable metrics like “feature coverage.”

The idea of the “intuition pump” is that you don’t frame arguments, you frame stories or narratives. Thus instead of aiming to provide a conclusion, you provide a “pump” to intuition.

I talked before how testers need an intuition for abstraction and what I’m saying is pretty much what I meant there.

What I’m describing here is very a much a thinking tool, which is one of the best testing tools you can bring to bear when testing complex things. Thinking tools, like an intuition pump, are, in Dennett’s words, “handy prosthetic imagination-extenders and focus-holders that permit us to think reliably and even gracefully about really hard questions.”

Really hard questions … like “How do we actually build this thing so that it provides value to our users and makes it easier for us to evolve it as our users’ needs change?”

Testing is Like Experimenting

Testers are certainly detectives, to an extent, but even more fundamentally they are experimenters. They are dealing with an experiment that is predicated around the idea of: “I need to understand this thing and how it works. I want to provide an informed assessment of how it works to other people.”

As such testers are usually dealing with a hypothesis. The hypothesis could be: “This thing probably works great and I just need to show that to people.”

But the hypothesis really should be “Something is probably wrong with this thing I’m figuring out and I need to help people understand where it’s wrong.” Along the way, of course, the tester will find that some aspects that are exactly right! But the goal is to verify their hypothesis that there is probably something wrong.

Conducting experiments means conducting tests. Like any good experimenter, a tester has to bring a series of techniques to their work to conduct tests along a variety of aspects: performance, behavior, security, usability, and so on. A good experimenter knows when to rely on tools but also knows the limits of those tools and what they can provide.

Since testers are obviously dealing with the creations of human beings, this means testers, as experimenters, must also have a good theory of error. They understand how and why humans make mistakes when building complex things.

This means testers — the really good ones; the specialists — understand human biases and how they come about in creative endeavors, such as writing software.

That knowledge of error and bias informs the techniques that testers use as part of their experiments. It also allows testers to work with other team members to try and find errors as close as possible to when they are introduced.

We make our biggest mistakes at two points in software: when talking about what to build and then when actually building it. So if testers only engage after something is built, they are already well behind the cost-of-mistake curve. Thus, as I hope this path has shown, much of testing really does come down to specializing in the understanding of mistakes: how they are made, how they propagate, how they avoid being found (often for disturbingly long periods of time). This is why specialist testers put as much, if not more, stock in books like The Logic of Failure and To Engineer is Human than they do in books about automation or general software testing.

The Social Context of Testing

Regardless of where we are, or what we do in our day-to-day lives, we are all part of societies that are coming to rely on technology more and more, as it infuses itself into our recreation, our professions, our debates, our politics, our monetary systems, our homes, our transportation, and even into our bodies.

As such — and I do very much believe what I say next — we have an ethical and moral mandate to do our best to work with others to help make sure that the world we’re building is capable of absorbing and responding to these technologies, particularly when they do something they shouldn’t and thus when mistakes are made.

Specialist testers are in a particularly good spot to be champions of and evangelists for the responsible application of that mandate.