In our testing industry we’ve borrowed ideas from the physics realm to provide ourselves some glib phrases. For example, you’ll hear about “Schrödinger tests” and “Heisenbugs.” It’s all in good fun but, in fact, the way that physics developed over time certainly has a great deal of corollaries with our testing discipline. I already wasted people’s precious time talking about what particle physics can teach us about testing. But now I’m going to double down on that, broaden the scope a bit, and look at a wider swath of physics.

So let’s start at the seventeenth century. It was in this century (1687, to be exact) that a guy named Isaac Newton listed some laws for the motion of particles experiencing a force. That subject was initially called Newtonian mechanics and, eventually, after some radical new developments that I’ll talk about later, came to be called classical mechanics.

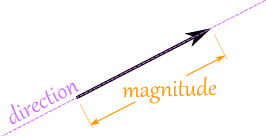

In Newtonian mechanics, particles are characterized fairly simply by a mass (how much they “weigh”, basically) and two vectors that evolve in time. If you’re not too sure on what I mean by vector, just think of a line with an arrow.

That right there is pretty much all there is to a vector. Testing is often about keeping things simple, right?

KISS: Keep It Simple (Sort of)

A vector has a size — usually referred to as magnitude — and a direction. In the case of those two Newtonian mechanics vectors, one vector gives the position of the particle, the other provides its momentum. So what does this mean? It means that given a force acting on a particle, you can solve for the evolution in time of the position and momentum vectors. Uh huh. Whatever. Yeah, that’s a mouthful. Putting that more simply, this really just means you can figure out how a particle moves. Simple things are simple, right? This happens in testing all the time. We can start with a concept, we have a few terms. They all seem to line up. Even our visuals are fairly easy to express. And it all boils down to something pretty simple.

You figure out the specifics of how a particle moves by solving a differential equation that is given by Newton’s second law. What this law, and thus equation, specifically says is this:

“The acceleration of an object as produced by a net force is directly proportional to the magnitude of the net force, in the same direction as the net force, and inversely proportional to the mass of the object.”Uh huh. Whatever. Well, you might recognize this better when it is stated more simply:

“Force equals mass times acceleration.”Notice that a couple of times there I reframed ideas in a simpler fashion. We often have to do this in testing, particularly in a technical context. The question then is whether the lower fidelity we are going for in terms of explanation compromises the meaning we want to convey. Consider the two definitions above, for example.

Sometimes we simply use both descriptions of something, but choose one based on our audience at any given time. This is a balancing act we’re always dealing with. Yet, even when using a simpler means of expression, we can’t deny the underlying complexity …

If you were talking to an “expert” they would tell you that the important point here is something like this:

“A force is equal to the differential change in momentum per some unit of time as described by a certain calculus of reasoning.”

In the context of mathematics, a “calculus of reasoning” means a way to construct relatively simple quantitative models of change and to determine the consequences of those changes.

If that’s hard to wrap your head around, consider that poor old Newton had to develop both the mechanics named after him and the differential and integral calculus at the same time.

Frame the Language

I should probably state that calculus is the language that allows the precise expression of the ideas of Newtonian mechanics. Without that language, Newton’s ideas about physics couldn’t be formulated in any useful way. Basically, differential calculus has you looking at the rate of change of something at any point. It’s very unit-like in nature. Integral calculus allows you to take the sum of many infinitesimal points. It’s very integration-like in nature. In testing, we create our own languages that allow expression of the ideas of how to test an application. This is no different than how code, often in vey different types of programming languages, is used to express the ideas of a business domain. Notice, also, that we have a very unit-based style of reasoning as well as an integration-based style of reasoning.

But Don’t Attribute It All To One Person

Did you, like I sometimes do, feel slightly stupid when I said that Newton had to develop both classical mechanics and the differential and integral calculus at the same time? It’s a little humbling, right?

Well, cheer up! Newton didn’t just invent calculus one day. In fact, the ideas and concepts of mathematical calculus were developed and worked on over the course of a very long time. What Newton did do is provide one form of simplifying algorithm for doing the “work” of calculus. That’s nothing to sneeze at, to be sure. But don’t get hung up on one guy. We see this in the testing industry all the time, such as with “Dan North invented BDD” or “Kent Beck invented extreme programming” or “Martin Fowler said everything that is ever worth saying about programming ever.” It’s important not to discount the public work these people did but it’s also important to realize that they were simply following in a tradition. This is important because that tradition needs to continue to evolve. And often their solutions are only “approximately right.” Keep that “approximately right” part in the back of your mind; I’ll return to it.

Expanding Our Field Of Knowledge

Okay, so Newton knew that particles experienced a force. And when they did, they moved. And Newton helped us understand the laws for that movement.

That brief paragraph could actually describe, at a very high level, the work of the seventeenth and eighteenth century. Moving forward a bit, in the nineteenth century, one of those forces — the electromagnetic force that acts between charged particles — was starting to be understood. A new language for this understanding was required and the subject eventually became known as electrodynamics. Eventually it would be called classical electrodynamics.

Electrodynamics adds a new element — the field — to the particles of Newtonian mechanics. Specifically, an electromagnetic field was added. Much like in testing, we might need a new language, or means of expression, when a new element is added. Consider how we used the “language” of Selenium for a long time to test web sites. But then mobile sites came along and we needed a new language for expression, which we find in Appium. Then Windows-specific applications got Winium. In truth, these are all speaking the same language — delegating down to a common Selenium API — but using different means of expression.

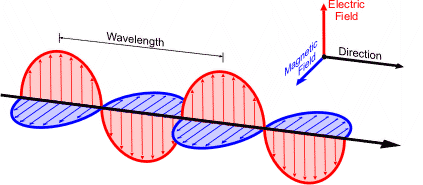

Let’s stick with this idea of the field for a moment. A field is basically something that exists throughout an extended area of space. This is quite different from something like a particle that exists at just one point in space. Now hang on to your socks because here we’re going to reuse our vector idea from particle motion. The electromagnetic field at a given time is described by two vectors for every point in space.

In this context, one vector describes the electric field, the other the magnetic field. The above visual shows this with the particle vector from before added in.

So notice that before we had:

And now here we have:

Are these an equivalence class, though? Meaning, even given the slightly different wording as I did here, are we still fundamentally talking about the same kinds of concepts? A question that we often have to ask in testing.

Eventually a guy named James Clerk Maxwell formulated what came to be known as the Maxwell equations. (It was actually a guy named Oliver Heaviside who summarized Maxwell’s theory into what would become known as the four “Maxwell equations.” So, again, not everything is always due to one person, even if everyone acts like it.) These were a set of differential equations whose solution determined how the electric and magnetic field vectors interacted with charged particles and how the field itself evolved in time.

- the evolution in time of particles (their position and momentum) was described by differential equations

Now here I’m saying this:

- the evolution in time of fields (and their interactions with particles) is also described by differential equations

As you would imagine, the equations are probably different, but again: are we still fundamentally dealing with the same thing? Notice how spotting the similarity in wording when describing concepts is critical.

Shifting Contexts, But Same Domain

So as I ramble my way through all this, what you should get here is that we were learning better ways to communicate about nature. It became necessary to take one context — particles acting under a force — and apply them to a wider context — fields that exist throughout all of space. This required further refinement as we realized that since the fields exist throughout space, they must co-exist with the particles. And if that’s the case, perhaps there are some interactions between the particles and the fields.

Historically speaking, this is a perfect example of the broadening of context and a better understanding of scope within a particular domain. It’s not any different from what we have to do as we understand a business domain, or consider how tests and code have to interact to provide a more full picture of reality.

When Things Integrate…

So we have particles all over space. We have fields that exist throughout space. This means particles and fields are not just “units” but rather are “integrated.”

As it turned out, the solutions to Maxwell’s equations could be quite complicated in the presence of charged particles. But in a vacuum the solutions were relatively simple. Further, those solutions corresponded to wavelike variations in the electromagnetic field.

As it turns out, these wavelike variations were light waves. Imagine that! Being slightly more descriptive, not to mention accurate, these waves seemingly could have arbitrary frequency and they traveled at the speed of light. So yeah: light waves. This is really important to understand. What Maxwell’s equations did was describe simultaneously two seemingly different sorts of things — the interactions of charged particles and the phenomenon of light. This is often the quest of test tools. Find the means to describe the application and the ways by which the application is tested. In reality, we have two bits: the production code and the test code. Our “field” that permeates all of space is the business domain that our code sets operate within. That domain is impacted by its interaction with code — i.e., the attempt of code to make the domain realizable. But think of all the language attempts we use to describe all this. Just in the testing sphere alone, we use natural language requirements, fluent interfaces, APIs, DSLs, structured abstraction layers like Gherkin, and so forth.

Things Get … Complicated

In the twentieth century, it became clear that the explanation of certain sorts of physical phenomena required ideas that went beyond those of Newtonian mechanics and electrodynamics as put forward by Maxwell. There’s a whole lot of detail here that I have to skip over but just know that it became clear that electromagnetic waves had to be “quantized.” A common way to visualize what this means is via the following:

This quantization idea said that for an electromagnetic wave of a given frequency, the energy carried by the wave could not be arbitrarily small, but had to be an integer multiple of a fixed energy. Going around saying the “integer multiple of a fixed energy” was probably just as cumbersome then as it is now. So a simplifying term was used that became the justification for everything in science fiction: the “quantum.” What you see here is the emergence of a new way of thinking. This happens a lot in testing and coding, right? We get exposed to design patterns that make us entirely reconsider how we think of expression. We encounter entirely new libraries of code that do certain things — like, say, create a Virtual DOM — and that impacts how we express tests to handle that new way of doing things.

The basic ideas for a consistent theory of the “quantum” were worked out over a relatively short period of time after initial breakthroughs by Werner Heisenberg and Erwin Schrödinger. (Yes, these are the people for whom “Heisenbugs” and “Schrödinger tests” are named. They would no doubt be so proud.) We can all relate to certain individuals in the field who guide ways of thinking. Testers might point to James Bach. Developers might point to Martin Fowler. And so on. It’s still important not to get too stuck on the people however.

This overall set of ideas came to be known as quantum mechanics. Once the broader implications of these ideas became known, this revolutionized our picture of the physical world. In fact, it was here that “mechanics” and “electrodynamics” as they were understood up to that point were given the title “classical.” And here we have not just a new way of thinking, but a new way of doing. A new mechanics was on the scene. And yet …

Old and New Coexist

Quantum mechanics subsumes classical mechanics, which now appears merely as a limiting case that is approximately valid under certain conditions. But those “certain conditions” are pretty wide. For example, we still uses Newton’s equations to launch rockets and satellites even though they are only ever going to be an approximation to the true state of affairs. So we don’t necessarily throw out everything we had before. We just realize that we have different levels of abstraction that we can work on. The amount of fidelity — i.e., the correspondence with reality — required determines what level of abstraction we work with. Certainly approaches like TDD and BDD have introduced many layers of abstraction and/or expression.

It cannot be overstated that the picture of the world provided by quantum mechanics is completely alien to that of our everyday experience. Our everyday experiences are in a realm of distance and energy scales where classical mechanics is highly accurate, at least so far as we can tell. We have to either consider the very, very small or the very, very large before quantum theory starts to “actually” matter. Think here of the cognitive friction testers or developers go through as they have to become acquainted with a new way of thinking and behaving. A perfect example is idea of BDD where the emphasis is much more on communication and collaboration. But also consider those cases where sometimes we don’t have to go for the “latest and greatest” because the domain we’re working in doesn’t require the “true” or “pure” vision, but rather the one that is “merely” highly accurate. This goes back to what I said before about the ‘big names’ in our industry often only being “approximately right.”

The Space of Ideas

Okay, let’s not lose our path on the winding trail here. Remember those vectors we talked about before? Yeah, so, it turns out that in quantum mechanics, the state of the world at a given time is not given by a bunch of particles, each with a well-defined position and momentum vector. Rather, the state of the world is given by a mathematical abstraction. Literally. What we have are vectors specified not by three spatial coordinates, but by an infinite number of coordinates. How often have you felt like you started with something on your projects that was relatively simple and then, as you learned more, it felt like you ended up with something approaching infinity? Yeah, me too.

I don’t know about you but I have a hard time visualizing an infinite state space. To make things even harder to visualize, these spatial coordinates don’t have the decency to be real numbers. Instead they are called complex numbers, which basically just means a number that has a real and an imaginary part. Try presenting your next set of test metrics that way (“the real part looks bad, granted, but the imaginary part — fantastic!”). Certainly as we get into more distributed architectures, it can be difficult to visualize things. New applications, likewise, demand a level of code complexity that can make it very difficult to determine what is happening. Machine learning, expert systems with rules-based languages, and data science are often dealing with the very complex.

This infinite dimensional set of vectors to represent a “state space” was eventually given a name. It was called Hilbert space, named after a mathematician by the name of David Hilbert.

The Space of Ideas Needs Simplicity

An interesting thing about complexity is that it often presents itself as a fundamental simplicity. I bet all of this above talk can seem very complex if you’ve never been exposed to the ideas. But you can focus on the simplicity initially.

For example, if you’re trying to impress your spouse and/or significant other how smart you are, you might say that the fundamental conceptual and mathematical structure of quantum mechanics is really quite simple. After all, it only has two components.

- At every moment in time, the state of a system is completely described by a vector in Hilbert space.

- Observable quantities correspond to operators on Hilbert space.

That’s it! What this simplistic description did is frame itself in terms that can be asked about. For example, going with the second point, the most important of those operators is referred to as the Hamiltonian operator. It basically tells you how the state vector changes with time. Here’s a perfect example where the “business rules” can be stated quite simply and concisely but they introduce terminology that needs context and provides the path to the complexity.

The Space of Ideas Also Needs Complexity

Okay, hold on! What’s Hilbert space? Basically it’s a Euclidean space — that which we all likely learned about in grade school — generalized to infinite dimensions. Consider that three-dimensional space has three independent dimensions, which we can combine in different amounts to give a specific “address” or “identifier” to any point in space. So to address, or identify, the point (2,4,6) we might say “go two units in the x-dimension, go 4 units in the y-dimension and go 6 units in the z-dimension.” With Hilbert spaces the dimensions can be arbitrary.So, just being a bit silly here, consider the set of all test cases which can be executed an integer amount of times in a given time period. I could, if were a madman, create some arbitrary function by combining all these test cases together. I then consider each test case to be an independent “dimension” in a Hilbert space. The function I created is now a “point” in this abstract space. Huh. Nifty. Okay, so what’s the Hamiltonian operator? The Hamiltonian operator is the thing that “causes translation in time.” This is just jargon for the fact that the Hamiltonian tells you how the system moves forward in time. In particular, how that system movies forward in time as it is describe by something called the Schrödinger equation. Uh … yeah. Okay, and the Schrödinger equation is …? Well, like some other stuff we talked about, it’s a differential equation. That equation is used to find the allowed energy levels of any system that can be described as “quantum.”

So going with my test case example, I may have a Hamiltonian operator that tells you how the test case is executed over a time period. That is it’s “translation in time.” The Schrödinger equation would then limit the possible states that the test could be in at any time: it can be, say, passed or failed, but not somewhere in between those states.

So if you do what I do and pretend all this makes sense, here’s what you get: the state of any system made up of particles and subject to forces is described by some vector in an infinite dimensional, abstract space (Hilbert space). These vectors have operators applied to them (Hamiltonians) that cause them to evolve in time and equations (Schrödinger) can be applied to all of this to tell us what the allowed energy is as that evolution in time occurs. How many times do we, particularly when working with business, have to figure out a bunch of domain terminology and then try to explain it in some way that is concise, necessarily abstracts away some details, but doesn’t give a false picture of whatever is we’re talking about? We often have to come up with examples that are illustrative of what I’m talking about. My test case idea above was one, probably poor, attempt to do just that.

Method to the Madness?

A bit of an aside, but perhaps a relevant one: a lot of people hear that quantum mechanics is entirely probabilistic. The idea being that the entire world is nothing more than a set of fortuitous happenings based on some sort of “roll of the dice” occurring below our levels of observation. C’mon, be honest: don’t a lot of our projects feel that same way sometimes?

However, you should note that the fundamental structure I’ve been describing to you so far is not probabilistic. Rather it’s just as deterministic as the classical mechanics we started off with.

It is? Sure. If you know precisely the state vector at a given time, then the Hamiltonian operator tells you precisely what it will be at all later times. The explicit equation you solve to do this is the Schrödinger equation. Sounds pretty deterministic to me.

Well, here’s the rub. Probability comes in because you don’t directly measure the state vector. Instead you measure the values of certain states that are called “classical observables.” These are things like like position, momentum, and energy. The tricky part is that most states don’t correspond to well-defined values of these observables. In fact, the only states with well-defined values of the classical observables are special ones that are called “eigenstates” of the corresponding operator. Oh, just what I needed: a new term. What’s an eigenstate? This actually gets a little complicated. Just know that it’s basically a quantum mechanical state that has a particular value — called a wave function — and that wave function is an eigenvector — not just a vector, notice — that corresponds to a physical quantity in real space.

What all this means is that our operator acts on the state in a very simple way. If you remember that part of these states is an imaginary number, the operator just multiplies the state by some real number. This real number is then the value of the classical observable — such as momentum, for example.

So when you perform a measurement, you don’t directly measure the state of a system. Rather, you interact with it in some way that puts the system into one of those special states that does have well-defined values for the particular classical observables that you are measuring. This must sound like testing to you, right? Putting systems in a state so that you can measure something about them. Yet that very act of putting them into a state means, by definition, you are interacting with the thing being observed and thus you might change what can be observed about it.

It’s at this very point that probability comes in. This means you can predict only what the probabilities will be for finding various values for the different possible classical observables. I’m willing to bet that many of you were able to follow along with all of this relatively well until we got into the probability and, more particularly, the eigenstates. I presented the material this way on purpose to show that, eventually, you can’t abstract away the details any more. True knowledge does require specialized knowledge of some sort. There does come a point in our work — whether it’s understanding the requirements, understanding how best to apply automation, and so on — where we start to run into cognitive friction.

Don’t Count on Your Intuition

While I’m necessarily skipping a whole lot of details that could prove what I’m saying, there is actually a fairly important point I hope you understand. The disjunction between the classical observables that you measure and the underlying conceptual structure of the theory is what leads to all sorts of results that violate our intuitions based on classical physics. A corollary I find here is when I try to train testers in modern testing practices, particularly when they have been more “traditional” — dare I say, classical? — testers. They have a very hard time building up their intuition for this brave new world. I’ve talked before about how there are limits to our intuitions in testing. The same applies in physics.

Probably one of the most famous examples of this intuition-violation is the uncertainty principle attributed to Werner Heisenberg. This principle’s name originates in the fact that there is no vector in Hilbert space that describes a particle with a well-defined position and momentum. The reason for this is that the position and momentum operators don’t commute. This means that applying them in different orders gives different results. That’s really weird, by the way. It’s like saying a × b does not equal b × a.

The result of this oddness is that state vectors that are “eigenstates” for both position and momentum simply don’t exist. Read that again: they literally do not exist. How this applies in the real world is that if you consider the state vector corresponding to a particle at a fixed position, it contains no information at all about the momentum of the particle. Similarly, the state vector for a particle with a precisely known momentum contains no information about where the particle actually is.

If you think way back to our vectors in classical mechanics (ah, how simple it all was then), you will realize that this new “quantum” way of viewing the world totally upends everything we know. Which is odd because “everything we know” still seems to work pretty much like it did when classical mechanics was formulated. So we get a double-dose of intuition-violation. (Thank you very much, universe!)

Can I Have a Visual for This?

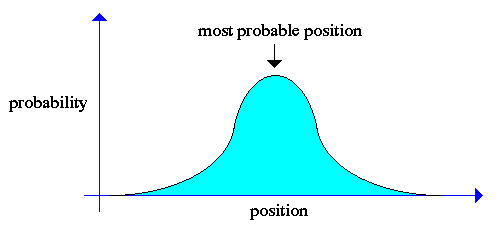

If you find a good one, do me a favor and let me know. But here’s one that gets passed around a lot:

Well, that’s helpful, huh? What that visual means is “wave functions exist in the context of Hilbert space”. That’s what you got out of that, right? Don’t worry. No one else did either. Well, to be fair, you might get that if you knew the terms. And the context behind them. But you also might not.

While states in quantum mechanics can abstractly be thought of as vectors in Hilbert space, a somewhat more concrete alternative is to think of them as “wave functions.” This is a term I introduced above but didn’t really get into.

Being simplistic, wave functions are complex-valued functions of the space and time coordinates. When a function is described as “complex-valued” it just means functions whose values are complex numbers. What this wave function idea basically says is that the probability of finding a given particle with one of the classical observable states (say, a particular position) is determined by all of the values of the wave function (throughout all space) as well as the localized values of the wave function (at each point in space).

So you end up with this kind of visual:

Unified Vision for Explanation

As all of these thoughts on physics were developing, there were two competing views of quantum mechanics. One was the wave mechanics proposed by Schrödinger and the other was referred to as matrix mechanics, led mostly by Heisenberg and a few other folks. (Max Born, Pascual Jordan, and Paul Dirac, if curious.) It turns out they both work the same, just using different methods to get to the same conclusions.

The point I want to make, however, is that as this picture continued to emerge, you might notice how we’re bringing in specific determined states — like the particles of classical mechanics — and the waves — like those in classical electrodynamics. We’re also correlating these new functions to the previous idea of vectors, which applied in both mechanics and dynamics.

The reason this matters is because, depending on context, it can be useful to think of quantum mechanical states either as abstract vectors in Hilbert space or as wave functions. These two points of view are completely equivalent. Remember before how I kept asking about whether what we were dealing with is equivalent? Well, here’s an example of where it can be — you just need those higher level ideas to tie everything together.

But, again, you probably didn’t get that from the visuals. Then again, you probably didn’t get that from any of my explanations either. But I hope, at the very least, that you started to see the convergence of the ideas.

Are You Done Yet? (Please be done.)

Yeah, I think we’re at a good point to close out here.

So did, as the title of this post states, testing help us understand physics? Or was it the other way around?

A key thing to understand is that all of these ideas — regardless of which approach you take and which visuals you use and what explanations you suffer through — requires a lot of effort to develop intuitions about.

However, that practice of developing intuition, and using those intuitions to further your own insights, is critically important. And that’s what I hope this post helped you do a little bit. One of my goals is to get people to start thinking a bit more broadly about the discipline of testing as well as quality assurance. Sometimes one of the most effective ways to do that is to present the context of testing as embedded within the thinking of a different discipline.

I mostly followed all this. The whole eigenstate concept makes very little sense to me so I was glad to see you affirm this was a likely spot we might get confused! 🙂 Two things you said makes me wonder about the equivalence point you were trying to draw.

“As it turned out, the solutions to Maxwell’s equations could be quite complicated

in the presence of charged particles. But in a vacuum the solutions were relatively

simple.”

and

“What Maxwell’s equations did was describe simultaneously two seemingly different

sorts of things — the interactions of charged particles and the phenomenon of light.”

But was there really an equivalance class here then? It sounds like you would have different equations. So this reads to me like they would be tests that work one way (in a test environment; “charged particles”) but another way (in a production environment, “vacuum”).

Indeed, yes, they are an equivalence. And, yes, you also have different equations. There are many ways — all of them of equal validity — that these equations can be written. Which particular way you want to use really depends on the application you are going for. The most popular incarnation of the equations, and the one that Heaviside formulated for Maxwell, has to do with how electrostatics was merged with magnetism, giving us electromagnetism.

But a key point is that regardless of which equation set you use, they give identical results. This is in the same way that the wave mechanics and matrix mechanics formulations of quantum mechanics similarly give the same results, even though the formulations are very different. If something didn’t work in one, it wouldn’t work in another and vice versa. Thus like an equivalence class in testing wherein if a bug is found with one test, it will be found with another — if those tests are in the equivalence class.

So, in your scenario, if you have tests that respond differently in that test environment than they do in the production environment, it’s a clear sign that there is not an equivalence in something. It could be a configuration in the environment. It could be due to the lack of certain data that the tests rely on. It could be due to certain sensitivities in one environment that are not present in another.

So part of what I get out of this is that Newton’s theories still work but we now know they are incomplete or wrong. But how can they work if they are wrong?

Putting on the science hat, it has to do with reductionist observation and the use of approximation in the reliability of measurement over (relatively) short-term effects. Consider that Copernicus pretty much demolished any notion of an Earth-centered system. Einstein smacked around Newton’s illusion of absolute space and time. Quantum theory put a somewhat decisive end to the notion of a controllable measurement process. Yet prior to those developments, those ideas — geocentrism, absolute space and time, always measurable processes — did work in some very real sense. They explained observations and even allowed certain amount of prediction. They simply had a breaking point where the scale they were considering was no longer “short-term.”

Putting on the testing hat, Newton’s mechanics are an abstraction. Just like we may have, say, a series of specifically worded tests about a particular aspect of our application. For example, say we have a major billing component. I can write tests about that billing component but billing, as a concept, is not just spatial in nature; it’s temporal. So any tests I write are, by definition, a static representation of the temporal duration that is the reality of how billing works. The tests are correct insofar as they allow me to accurately test aspects of billing. But there is a wider aspect that they don’t capture. When I reach that wider aspect, I need to refine the tests.

Sheesh, reminders of the school days. I never did quite get all the wave functions and probabilities. Good read, though.

Yeah, it’s by no means intuitive in many cases. One way that I sometimes present it to people — when it’s okay to skimp on details — is just to say that in quantum mechanics, this thing called the wave function essentially stores up a lot of complex numbers to represent particles. These numbers can be added and combined in lots of ways and, when they are, the absolute values of the squares of these sums are interpreted as probabilities. The probability can be visualized as an amplitude: the higher the amplitude, the more likely is the probability of finding the particle right there or the more likely the particle has an energy of this or a momentum of that.

The wave function is (apparently, anyway) nature’s way of encapsulating a certain amount of complexity but providing an API of sorts that we can call on. Each call is like a REST endpoint that it returns us something tangible. So if I want to know something about a particle, I query the API. But the trick is that I can’t query the API about two “complementary” aspects of the particle. So I can’t query for its speed and its direction. I can only query for one of those.

To be sure, I can query for an approximation of, say, the speed. And that will then allow me to have at least an approximation of the direction. But the more I query one aspect and the more refined my knowledge becomes, the less I can query the other aspect. So I can’t have “complete knowledge” of the system under observation/test.