I should note that this will be a very generalized case study, more for pedagogical purposes than anything else.

So let’s say we write our requirements as tests and provide those tests as part of a repository. The repository is full of feature specs.

I should note that this will be a very generalized case study, more for pedagogical purposes than anything else.

So let’s say we write our requirements as tests and provide those tests as part of a repository. The repository is full of feature specs.

This case study would apply regardless of the format of your requirements or tests but I’ll be using the Gherkin structured style as an example.

We want to leverage our requirements-written-as-tests by using them as a knowledge base. Our hypothesis is that this knowledge base could be used to help us internally answer questions about our product. But then we realize something. These requirements-as-tests indicate how to use the system and under what conditions it provides certain behaviors. Further, these requirements-as-tests are augmented with actual data conditions that provide an understanding of how data is used in the context of our features.

So a second hypothesis forms: we can expose these requirements-as-tests to our customers. This will improve the ability for our customers to get reliable information about our product.

But we can’t just expose a bunch of tests and hope that’s useful to someone, right? Instead, we’ve been interested in exploring the idea of solutions like ChatGPT to augment the knowledge gathering process. On the other hand, we’re also skeptical enough because we know tools like ChatGPT can get things wrong.

So, in our case study, we want to tokenize the feature spec repository and provide this representation not only to ourselves internally but also to our users. The goal is to be able to query this repository and get valid responses regarding how to use our application and what data it can be used with.

This case study would apply regardless of the format of your requirements or tests but I’ll be using the Gherkin structured style as an example.

We want to leverage our requirements-written-as-tests by using them as a knowledge base. Our hypothesis is that this knowledge base could be used to help us internally answer questions about our product. But then we realize something. These requirements-as-tests indicate how to use the system and under what conditions it provides certain behaviors. Further, these requirements-as-tests are augmented with actual data conditions that provide an understanding of how data is used in the context of our features.

So a second hypothesis forms: we can expose these requirements-as-tests to our customers. This will improve the ability for our customers to get reliable information about our product.

But we can’t just expose a bunch of tests and hope that’s useful to someone, right? Instead, we’ve been interested in exploring the idea of solutions like ChatGPT to augment the knowledge gathering process. On the other hand, we’re also skeptical enough because we know tools like ChatGPT can get things wrong.

So, in our case study, we want to tokenize the feature spec repository and provide this representation not only to ourselves internally but also to our users. The goal is to be able to query this repository and get valid responses regarding how to use our application and what data it can be used with.

The above is a basic idea of the total system but right now we’re just focusing on that part on the right, which is the ability to tokenize the feature spec repository, which is serving as our knowledge base so that we can situate that behind a natural language query interface.

The above is a basic idea of the total system but right now we’re just focusing on that part on the right, which is the ability to tokenize the feature spec repository, which is serving as our knowledge base so that we can situate that behind a natural language query interface.

Design and Development Context

The front-end for this would likely be a chatbot style interface and the approach would be so-called “conversational AI,” consistent with our experiment around a ChatGPT-style interface. The back-end for this would be a model, possibly a neural network-based model. Common architectures for these kinds of chatbot systems include sequence-to-sequence models, transformer models, or a combination of different techniques. In fact, ChatGPT is based on a transformer style architecture: As a tester on this project, you’re told that the model will be trained to understand user inputs, generate appropriate responses, and provide information based on the tokenized representations of the feature spec repository. So, as a tester, you have to understand what’s actually happening here. You don’t have to understand the transformer architecture necessarily, which is why I’m going to spend zero time explaining that.

Instead, you’ll want to focus on the representation of the feature spec repository that ultimately gets exposed to users. What is that representation? How is it created? What is it made up of? How complete is it? How accurate is it?

As a tester on this project, you’re told that the model will be trained to understand user inputs, generate appropriate responses, and provide information based on the tokenized representations of the feature spec repository. So, as a tester, you have to understand what’s actually happening here. You don’t have to understand the transformer architecture necessarily, which is why I’m going to spend zero time explaining that.

Instead, you’ll want to focus on the representation of the feature spec repository that ultimately gets exposed to users. What is that representation? How is it created? What is it made up of? How complete is it? How accurate is it?

Initial Steps: Preprocessing

The feature spec repository will likely have undergone preprocessing. This is essentially preparing the text data for training the model. This involves things like text cleaning, where you remove any irrelevant or unwanted elements from the text. Essentially you jettison anything that may not contribute to the model’s understanding of the text. Which, as you can imagine can be tricky or, at the very least, nuanced. Testers are trained to remove incidentals from tests — and help product folks remove them from requirements — so the extent to which this has been done well does speak to part of the preprocessing. There will often be removal of so-called “stop words” like “the”, “and” and “is.” You might also deal with lemmatization or stemming. These are basically techniques that reduce words to their base or root form. So the goal of lemmatization, for example, is to convert words to their dictionary form (or lemma) while the goal of stemming is to reduce words to their root form by removing prefixes or suffixes. Consider this sentence:A person that weighs 200 pounds weighs exactly 75.6 pounds when standing on Mercury.In this example, the words would be stemmed as follows:

- “weighs” stemmed to “weigh”

- “pounds” stemmed to “pound”

- “standing” stemmed to “stand”

- “weighs” lemmatized to “weigh”

- “standing” lemmatized to “stand”

A 200 pound person will weigh exactly 75.6 pounds on Mercury.In this example, the lemmas remain the same as the tokens because they are either already in their base forms or are numerical values. The stemming would be similar to the previous case.

Tokenization

Once preprocessing is done, you get into tokenization. This is the process of splitting the text into smaller meaningful units, usually words or sub-words. This process breaks the text down into discrete tokens, hence the name of the process. Those tokens serve as the basic units of input for the model. With the above example, the tokens represent different components of the sentence, such as:

Articles ("A")

Numerical values ("200," "75.6")

Nouns ("pound," "person," "Mercury")

Verbs ("will," "weigh")

Adverbs ("exactly")

Tokens can then be organized in a hierarchical structure like a tree or graph, where relationships or dependencies between the tokens can be represented a bit more easily. This is useful for tasks like syntactic parsing or semantic analysis.

A

/ \

200 pound

| \

person will

/ \

weigh exactly

| \

75.6 pounds

|

on

|

Mercury

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 |

{ "value": "A", "children": [ { "value": "200", "children": [] }, { "value": "pound", "children": [ { "value": "person", "children": [] } ] }, { "value": "will", "children": [ { "value": "weigh", "children": [ { "value": "exactly", "children": [ { "value": "75.6", "children": [] } ] }, { "value": "pounds", "children": [] } ] } ] }, { "value": "on", "children": [ { "value": "Mercury", "children": [] } ] } ] } |

Feature: Calculate Weight on Other Planets

Implementation:

Weights are calculated as the weight of a person multiplied by the

surface gravity of the planet.

Background:

Given an authenticated user on the planets page

Scenario: Weight on Mercury

When the weight calculated is 200

Then the weight on Mercury will be exactly 75.6

And the weight on Mercury will be roughly 75

Scenario: Weight on Mercury (Condensed)

* a 200 pound person will weigh exactly 75.6 pounds on Mercury

* a 200 pound person will weigh approximately 75 pounds on Mercury

Scenario: Weight on Mercury (Query)

* a weight of 200 is what on Mercury?

Scenario: Weight on Venus

When the weight calculated is 200

Then the weight on Venus will be exactly 181.4

And the weight on Venus will be roughly 180

Obviously this is a very contrived example but the main point to understand is that the tokenizing process likely means converting the text into numerical representations that the model can process. Tokenization maps words or sub-words to unique numerical identifiers or vectors or linked lists or tree/graph models. The latter are particularly effective if you start to think along the lines of ontologies.

Training the Model

Once the tokenizing is completed, the tokenized data is used to train the model. The training process typically involves an iterative optimization algorithm. You can think of an “iterative optimization algorithm” as being something like the “learning process” for the AI model. The algorithm involves a series of steps where the model makes predictions, compares those predictions to some desired outcomes, and then adjusts its internal parameters to get closer to the correct outcomes when there are deviations. A common technique here is the use of stochastic gradient descent. This technique iteratively updates the parameters of a model to minimize the difference between its predictions and the true values in whatever the training data is. It’s called “stochastic” because it randomly selects a subset of training examples in each iteration to compute the gradient of the loss function. Speaking technically, the gradient represents the direction and magnitude of the steepest descent, indicating how the model parameters should be updated to minimize the loss. It might help to think of this gradient as representing the direction and steepness of a hill that the AI model needs to climb in order to improve its performance. The steeper the slope to climb, the more that the model’s predictions are inaccurate. Technically speaking: a steeper slope means a larger gradient magnitude, which implies a larger error or discrepancy between whatever the model predicted and the expected values from the training data. In our context, during training, the model learns to predict appropriate responses given input queries, leveraging the information from the tokenized feature specs.Lots of Context!

So that’s the context you, as a tester, may have for this case study. Now let’s get into the testability aspects we talked about in the previous post. In the following, when I refer to “source of record” that refers to the sources we’re considering as authoritative for our model training, specifically the entirety of the feature specs and perhaps any supporting material related to those specs. While I provided the above context of a feature spec repo, I would ask you to keep in mind that what I talk about here would apply to any source of record. Perhaps you’re tokenizing a user guide or a knowledge base or an internal wiki.Controllability

Several aspects of controllability would be important for testers in the context I just gave you. Testers should be able to control the preprocessing and cleaning steps applied to the sources of record before tokenization. This may involve removing noise, normalizing text, handling special characters, handling missing values, or any other data preparation steps.

Controllability over preprocessing not only helps drive towards consistent and reproducible training data but also allows testers to see what preprocessing is perhaps needed more than is being applied or, alternatively, what preprocessing is being applied that has negligible effect and thus should be abandoned.

Testers should have control over the parameters and configurations used for tokenization. This includes options such as the tokenization method — usually word-level, character-level, or sub-word-level — vocabulary size, special token handling, and tokenization algorithms. Controlling these parameters allows testers to assess the impact of different tokenization approaches on the AI model’s training and performance.

Testers should have the ability to validate and verify the tokenization process itself. This includes checking if the tokenization accurately represents the original text and making sure that tokenized data is in the expected format. Having control over validation like this allows testers to detect any tokenization errors or inconsistencies.

Depending on the specific requirements of the sources of record and training objectives, testers should be able to customize the tokenization rules. This can involve defining domain-specific rules, handling specific cases or patterns, or incorporating custom tokenization logic. Controllability over tokenization rules like this allows testers to adapt the tokenization process to the unique characteristics of the sources of record.

So, taking the above points, let’s consider this tokenization of our example sentence from above along with one additional statement:

Testers should be able to control the preprocessing and cleaning steps applied to the sources of record before tokenization. This may involve removing noise, normalizing text, handling special characters, handling missing values, or any other data preparation steps.

Controllability over preprocessing not only helps drive towards consistent and reproducible training data but also allows testers to see what preprocessing is perhaps needed more than is being applied or, alternatively, what preprocessing is being applied that has negligible effect and thus should be abandoned.

Testers should have control over the parameters and configurations used for tokenization. This includes options such as the tokenization method — usually word-level, character-level, or sub-word-level — vocabulary size, special token handling, and tokenization algorithms. Controlling these parameters allows testers to assess the impact of different tokenization approaches on the AI model’s training and performance.

Testers should have the ability to validate and verify the tokenization process itself. This includes checking if the tokenization accurately represents the original text and making sure that tokenized data is in the expected format. Having control over validation like this allows testers to detect any tokenization errors or inconsistencies.

Depending on the specific requirements of the sources of record and training objectives, testers should be able to customize the tokenization rules. This can involve defining domain-specific rules, handling specific cases or patterns, or incorporating custom tokenization logic. Controllability over tokenization rules like this allows testers to adapt the tokenization process to the unique characteristics of the sources of record.

So, taking the above points, let’s consider this tokenization of our example sentence from above along with one additional statement:

[ "a", "200", "pound", "person", "will", "weigh", "exactly", "75.6", "pounds", "on", "Mercury", "a", "200", "pound", "person", "will", "weigh", "approximately", "75", "pounds", "on", "Mercury" ]Our design team might customize the tokenizing so that “200 pound”, “75 pounds”, “75.6 pounds” are treated as single tokens and say that these should be understood as domain-specific data conditions. The design team might also have another custom tokenization rule that says “exactly (data condition)” and “approximately (data condition)” should be understood as specificity. Now any data conditions can be applied to a specificity identifier. Testers should be able to control the selection of training samples from the tokenized source of record. This includes the ability to choose specific subsets of the tokenized data based on various criteria. That might be topic, or difficulty level, or relevance, or really any other factors relevant to the training objectives. Controlling the sample selection allows testers to focus on specific aspects of the source of record during training. Testers should have control over filtering or excluding specific portions of the tokenized source of record for training. This can involve removing irrelevant or noisy data, filtering out specific topics or categories, or excluding problematic segments. Controllability over training data filtering ensures that the AI model is trained on what’s considered to be relevant and high-quality information. Testers should be able to augment the training data generated from the tokenized source of record. This can involve techniques such as data synthesis, data expansion, or data perturbation to increase the diversity and robustness of the training data. Controlling the data augmentation process like this allows testers to enhance the model’s generalization capabilities. As just one example, a synthesized sentence from the above might be:

On the planet Mercury, a person weighing 200 pounds will have an exact weight of 75.6 pounds.Whereas data expansion might be:

75.6 pounds is the exact weight of a person weighing 200 pounds on Mercury.The filtering out part might be that we removed any approximate measures and only focused on data that specified exact measures. Key to this, of course, is making sure that what gets output from a query is still valid when such data manipulation is done. Finally, I would say that testers should have control over the training parameters used in the AI model’s training process. This includes aspects such as the learning rate, batch size, optimizer selection, regularization techniques — all of which are referred to as hyperparameters. Controlling these parameters — which are not learned from the data but are established by humans — enables testers to explore different training settings and evaluate their impact on the model’s performance.

Observability

Several aspects of observability would be important for testers in the context we’re talking about. Testers should have access to logs or records that capture the tokenization process. These logs should provide information about the input data, tokenization parameters, tokenization outputs, and any relevant metadata associated with the tokenized source of record. Using our previous example, we might have something like this:

Testers should have access to logs or records that capture the tokenization process. These logs should provide information about the input data, tokenization parameters, tokenization outputs, and any relevant metadata associated with the tokenized source of record. Using our previous example, we might have something like this:

Tokenization Parameters: Language: English Case sensitivity: Case-insensitive Punctuation handling: Keep punctuation marks as separate tokens Split on whitespace: Yes Custom rule: Treat number and weight as data-condition Custom rule: Treat adverb-modify-weight as specificity Tokenization Outputs and Metadata: Sentence 1: Raw text: "a 200 pound person will weigh exactly 75.6 pounds on Mercury" Tokenized output: ["a", "200", "pound", "person", "will", "weigh", "exactly (75.6 pounds)", "on", "Mercury"] Metadata: None Sentence 2: Raw text: "a 200 pound person will weigh approximately 75 pounds on Mercury" Tokenized output: ["a", "200", "pound", "person", "will", "weigh", "approximately (75 pounds)", "on", "Mercury"] Metadata: NoneHere you can see, based on the custom rules applied, the “exactly (75.6 pounds)” and “approximately (75 pounds)” as part of the tokenized output that was logged. The parentheses are simply being used to demarcate the data condition as distinct from the specificity word. Observing the tokenization logs like this helps testers understand how the original text is transformed into tokens and that can help identify any potential issues or inconsistencies. In keeping with the idea of cost-of-mistake curves, you want to find these problems as soon as possible. Testers should have access to comprehensive training logs that capture information about the training process, such as training iterations, batch processing, computational resource utilization, and other relevant details. Observing training logs helps testers understand the training workflow, diagnose potential issues, and, most importantly perhaps, gain insights into the system’s behavior. An example might be this:

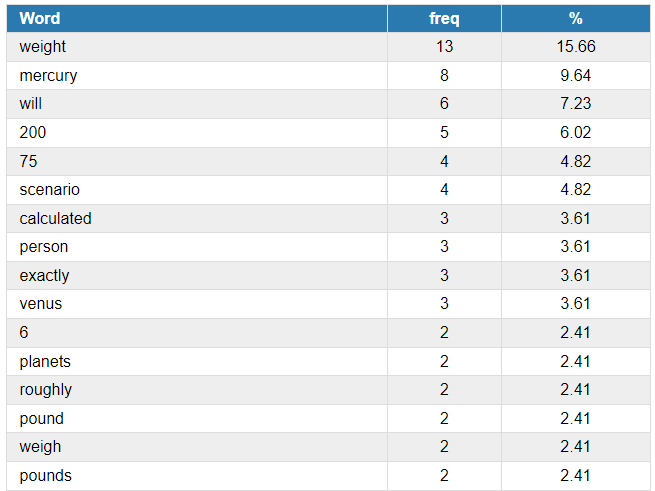

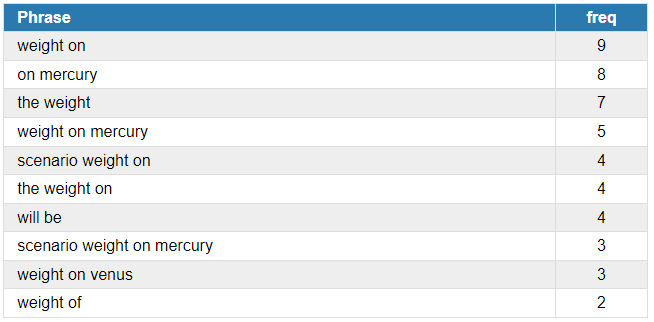

Training Log: Iteration 1: - Epoch: 1/10 - Loss: 2.512 - Accuracy: 0.720 - Training Time: 5 minutes - Resource Allocation: 1 GPU, 16GB RAM Iteration 2: - Epoch: 2/10 - Loss: 1.986 - Accuracy: 0.785 - Training Time: 4 minutes - Resource Allocation: 1 GPU, 28GB RAM ... (additional iterations) Training Completed: - Total Epochs: 10 - Final Loss: 1.108 - Final Accuracy: 0.875 - Total Training Time: 43 minutes - Resource Allocation: 1 GPU, 28GB RAMIn this hypothetical training log, each iteration represents a training step where the model is exposed to a batch of sentences and updates its parameters based on the loss calculated during training. The loss and accuracy values are indicators of how well the model is learning from the data. The training time represents the time taken to complete each iteration. The resource allocation section specifies the hardware resources allocated for training, such as the number of GPUs and the amount of RAM utilized. The above showcases something important: testers should be able to monitor and observe the progress of the AI model’s training process. This includes information about the loss function, accuracy metrics, convergence behavior, training iterations, and any other relevant training progress indicators. Observing the training progress allows testers to track the model’s performance during training and identify potential issues or abnormalities. Again, finding these as soon as possible keeps your cost-of-mistake curve tight. Testers should be able to observe statistical information about the tokenized source of record. This includes metrics such as the total number of tokens, token distribution, average token length, vocabulary size, and any other relevant statistics. An example with our data we might get something like this:

Tokenization Output and Statistics: Total Number of Sentences: 2 Sentence 1: - Raw Text: "a 200 pound person will weigh exactly 75.6 pounds on Mercury" - Tokenized Output: ["a", "200", "pound", "person", "will", "weigh", "exactly", "75.6", "pounds", "on", "Mercury"] - Number of Tokens: 11 - Average Token Length: 4.272 - Vocabulary Size: 10 (excluding duplicates) Sentence 2: - Raw Text: "a 200 pound person will weigh approximately 75 pounds on Mercury" - Tokenized Output: ["a", "200", "pound", "person", "will", "weigh", "approximately", "75", "pounds", "on", "Mercury"] - Number of Tokens: 11 - Average Token Length: 4.0 - Vocabulary Size: 10 (excluding duplicates) Overall Statistics: - Total Number of Sentences: 2 - Total Number of Tokens: 22 - Average Tokens per Sentence: 11 - Average Token Length: 4.136 - Vocabulary Size: 10 (excluding duplicates)By observing data statistics, testers can gain insights into the characteristics and properties of the tokenized data. Testers should have access to visualization tools or interfaces that provide visual representations of the tokenized source of record. These tools can help testers explore the structure and relationships between tokens, identify patterns, detect anomalies, or just gain a high-level understanding of the tokenized data. Visualizations can include lots of formats: word clouds, frequency histograms, scatter plots, tree graphs, and so on. Testers should have visibility into the model’s outputs during the training process. This can involve observing predictions, intermediate representations, or other relevant outputs generated by the model on a subset of the training data. Observing the model outputs provides insights into how the model is learning and adapting to the tokenized source of record. This one gets interesting to show without a more full example. But with our sentences, consider this:

Tokenized Input: ["a", "200", "pound", "person", "will", "weigh", "exactly", "75.6", "pounds", "on", "Mercury"] Intermediate Representation: [0.82, -1.45, 0.37, 0.12, 1.55, -0.89, 1.21, 0.73, -0.55, 0.98, 1.08] Tokenized Input: ["a", "200", "pound", "person", "will", "weigh", "approximately", "75", "pounds", "on", "Mercury"] Intermediate Representation: [0.86, -1.51, 0.42, 0.08, 1.52, -0.85, 1.19, 0.69, -0.52, 0.96, 1.10]Each token in the tokenized sentences is associated with a corresponding value in the intermediate representation. These values are learned by the model through the training process and capture the encoded information about the tokens in a continuous representation space. A “continuous representation space” mainly refers to mathematical or conceptual space where data points are represented as continuous vectors or points rather than discrete categories or labels. But what about predictions here? Well, let’s say the AI model is now reading further in the spec and it starts to see this:

a 200 pound person will weighWhat will come next? Well, the prediction might show up as this:

Prediction Log: Input: "a 200 pound person will weigh" Predicted Output: ["exactly", "approximately"] Confidence: 50%The confidence value is indicated as 50%, signifying an equal probability assigned to each predicted word. Let’s say it was correct and the next word was “exactly.” Then the next prediction might be:

Prediction Log: Input: "a 200 pound person will weigh exactly" Predicted Output: ["[NUMBER]", "[PLANET]"] Confidence: 50%So the AI is figuring that whatever is next will be a NUMBER of some sort and a PLANET name. Notice that the AI, in this case, is not using the “pounds” or “on” part of the sentence. Maybe we removed that via a training rule. Or maybe it learned that when this kind of sentence occurs, it always uses the same weight measure as the weight of the person and the “on” part is assumed for a planet. So this:

"a 200 pound person will weigh exactly 75.6 pounds on Mercury"Becomes this:

"a 200 pound person will weigh exactly 75.6 Mercury"And, in fact, internally, it might even be training as:

"200 pound exactly 75.6 Mercury"This is a really simple example. But even with this, I bet you can see how things could potentially go wrong if certain contextual information were not included. Imagine the scenario I’m describing to you being quite a bit more complex. Say, something like this:

Scenario: CRO Customer, Built-In Resources

GIVEN a CRO customer with a standard plan that has a CRO service provider

AND a CRO service provider called "TestCRO" that has the following:

a published any sponsor billing rate card

a published deleted sponsor-specific billing rate card

WHEN you replace the plan's original CRO service provider with TestCRO

THEN the rates for each of the built-in resources for a location match the any sponsor billing rate card

And then consider when that scenario is just part of a larger feature spec with many such scenarios:

Feature: Billing Rate Cards

Requirement: A plan should always use the "active" billing rate card.

Requirement: A plan should never use rates from a deleted billing rate card.

Scenario: CRO Customer, Built-In Resources

GIVEN a CRO customer with a standard plan that has a CRO service provider

AND a CRO service provider called "TestCRO" that has the following:

a published any sponsor billing rate card

a published deleted sponsor-specific billing rate card

WHEN you replace the plan's original CRO service provider with TestCRO

THEN the rates for each of the built-in resources for a location match the any sponsor billing rate card

Scenario: CRO Customer, Custom Resources

Scenario: Sponsor Customer, Built-In Resources

Scenario: Sponsor Customer, Custom Resources

Testers should be able to observe evaluation metrics that assess the performance of the AI model trained on the tokenized source of record. These metrics can include accuracy, precision, recall, as well as various other measures that are relevant for the specific task, such as similarity or coherence. Observing evaluation metrics helps testers assess the effectiveness of the training process and identify areas for improvement.

I’m going to be talking about many of those types of evaluations in subsequent posts so I’ll hold off on that here.

Testers should be able to perform detailed error analysis on the model’s performance during training. This involves observing and analyzing errors made by the model, examining misclassifications, understanding common failure modes, and identifying patterns or trends in the errors. Error analysis provides insights into the challenges that the AI model is facing as it trains on the tokenized source record. This can potentially point to limitations of the model itself.

Trustability

That last part is key. This is about a primary quality attribute: trustability. If we don’t trust the system, why would we expect our users to? And if we don’t trust the system, why are we exposing it to our users in the first place?Interpretability

And that leads to another key important quality: interpretability. Depending on the interpretability techniques employed, testers should have observability into the interpretability aspects of the AI model. This can involve observing feature importance, attention weights, saliency maps, or other interpretability outputs that help understand how the model makes predictions, like I showed you above, based on the tokenized source of record.Reproducibility

The ability to reproduce really just focuses on being able to observe and control as I talked about above. But let’s consider a few specifics here.

Testers should ensure that the version control of the source of record and any associated data or resources is well-maintained. This includes keeping track of the specific versions of the source of record and any changes made during the testing process. Reproducibility requires the ability to recreate the same tokenization and training environment using the exact version of the source of record.

Testers should document and reproduce the tokenization pipeline used to tokenize the source of record. This includes capturing the steps, configurations, and any pre- or post-processing applied during the tokenization process. A clear and well-documented tokenization pipeline allows for consistent tokenization of the source of record and that means we can better facilitate reproducibility of the training data.

If randomness is involved in the training process — random initialization, random shuffling of data, etc — testers should ensure that the random seeds are controlled and properly documented. Reproducibility requires setting and preserving the same random seeds to obtain consistent training results across different runs.

If the training data is split into subsets — training set, validation set, and so on — testers should document the specific splits used. This includes providing information on the data partitioning strategy, the proportions of each subset, and any specific considerations in the split process. Reproducibility requires using the same data splits for consistency in training and evaluation.

Testers should document all hyperparameters used during the training process. This includes parameters such as learning rate, batch size, regularization techniques, optimizer settings, and any other relevant hyperparameters. The key point here being that reproducibility relies on being able to reproduce the same hyperparameter settings for consistent training outcomes.

Finally, testers should save checkpoints of the trained models and document the process of saving and restoring the models. This can help make sure that the trained models are capable of being reloaded and evaluated consistently. Reproducibility requires preserving the trained models’ states because this allows for reusing and comparing them during the testing process.

But let’s consider a few specifics here.

Testers should ensure that the version control of the source of record and any associated data or resources is well-maintained. This includes keeping track of the specific versions of the source of record and any changes made during the testing process. Reproducibility requires the ability to recreate the same tokenization and training environment using the exact version of the source of record.

Testers should document and reproduce the tokenization pipeline used to tokenize the source of record. This includes capturing the steps, configurations, and any pre- or post-processing applied during the tokenization process. A clear and well-documented tokenization pipeline allows for consistent tokenization of the source of record and that means we can better facilitate reproducibility of the training data.

If randomness is involved in the training process — random initialization, random shuffling of data, etc — testers should ensure that the random seeds are controlled and properly documented. Reproducibility requires setting and preserving the same random seeds to obtain consistent training results across different runs.

If the training data is split into subsets — training set, validation set, and so on — testers should document the specific splits used. This includes providing information on the data partitioning strategy, the proportions of each subset, and any specific considerations in the split process. Reproducibility requires using the same data splits for consistency in training and evaluation.

Testers should document all hyperparameters used during the training process. This includes parameters such as learning rate, batch size, regularization techniques, optimizer settings, and any other relevant hyperparameters. The key point here being that reproducibility relies on being able to reproduce the same hyperparameter settings for consistent training outcomes.

Finally, testers should save checkpoints of the trained models and document the process of saving and restoring the models. This can help make sure that the trained models are capable of being reloaded and evaluated consistently. Reproducibility requires preserving the trained models’ states because this allows for reusing and comparing them during the testing process.

Predictability

Just as the ability to observe and control led to being able to reproduce, being able to reproduce helps us with the ability to predict. Testers should establish performance benchmarks or baselines for the AI-enabled product. These benchmarks represent the expected level of performance based on previous tests or perhaps on industry standards, when and if they become available. By comparing the actual performance of the product to the established benchmarks, testers can assess its predictability in achieving certain performance levels.

Testers should monitor the training process and evaluate the system’s ability to converge to a stable and optimal state. “Convergence” here refers to the process by which an AI system iteratively refines its state towards a stable and desired outcome. So this includes observing the convergence speed, loss function trends, and other indicators of training progress. Predictability in training convergence means that the system consistently reaches a similar state when trained on the same data and configurations.

Testers should assess the AI system’s ability to generalize its knowledge beyond the training data. This involves evaluating its performance on unseen or test data that represents real-world scenarios. Predictability in generalization means that the system can consistently perform well on new, unseen examples, demonstrating its ability to apply learned knowledge to novel situations. This is highly relevant to our case study example where the feature specs will presumably be modified, added to, and so on over time.

Testers should conduct sensitivity analysis by varying different parameters, settings, or inputs to observe how the system’s performance changes. By systematically modifying these factors and observing their impact on performance, testers stand a good chance of assessing the predictability of the system’s behavior and are more likely to be able to identify its sensitivity to different conditions. Importantly, this is where you can start to see if the system’s predictions degrade and, if so, under what conditions.

Likewise, testers should evaluate the system’s robustness by subjecting it to variations in the input data, such as different tokenization techniques or different subsets of the source of record. This allows testers to assess the system’s predictability in maintaining performance across different data variations and ensures its reliability in diverse scenarios. So, for example, we had this:

Testers should establish performance benchmarks or baselines for the AI-enabled product. These benchmarks represent the expected level of performance based on previous tests or perhaps on industry standards, when and if they become available. By comparing the actual performance of the product to the established benchmarks, testers can assess its predictability in achieving certain performance levels.

Testers should monitor the training process and evaluate the system’s ability to converge to a stable and optimal state. “Convergence” here refers to the process by which an AI system iteratively refines its state towards a stable and desired outcome. So this includes observing the convergence speed, loss function trends, and other indicators of training progress. Predictability in training convergence means that the system consistently reaches a similar state when trained on the same data and configurations.

Testers should assess the AI system’s ability to generalize its knowledge beyond the training data. This involves evaluating its performance on unseen or test data that represents real-world scenarios. Predictability in generalization means that the system can consistently perform well on new, unseen examples, demonstrating its ability to apply learned knowledge to novel situations. This is highly relevant to our case study example where the feature specs will presumably be modified, added to, and so on over time.

Testers should conduct sensitivity analysis by varying different parameters, settings, or inputs to observe how the system’s performance changes. By systematically modifying these factors and observing their impact on performance, testers stand a good chance of assessing the predictability of the system’s behavior and are more likely to be able to identify its sensitivity to different conditions. Importantly, this is where you can start to see if the system’s predictions degrade and, if so, under what conditions.

Likewise, testers should evaluate the system’s robustness by subjecting it to variations in the input data, such as different tokenization techniques or different subsets of the source of record. This allows testers to assess the system’s predictability in maintaining performance across different data variations and ensures its reliability in diverse scenarios. So, for example, we had this:

a 200 pound person will weigh exactly 75.6 pounds on MercuryThe system should be able to generalize to something like this:

a person that weighs 200 pounds on Earth will weigh 75.6 pounds on MercuryAnd the above brings up a good point. As a tester, you may have noticed that nowhere in the previous examples did we specify that we meant a person weighed 200 pounds on Earth. Did you assume that? If you did, it’s highly unlikely the AI would have assumed something different. In this case, that assumption happens to be correct. But what if the assumption was wrong? Finally, testers should perform detailed error analysis to understand the patterns and types of errors made by the AI system. By identifying common failure modes, testers can assess the predictability of the system in handling specific types of challenges or edge cases. This analysis helps improve the system’s predictability by addressing known error patterns. Sometimes these error patterns occur due to faulty assumptions like I just talked about.