In part 1 of this post we talked about a human learning to play a game like Elden Ring to overcome its challenges. We looked at some AI concepts in that particular context. One thing we didn’t do though is talk about assessing any quality risks with testing based on that learning. So let’s do that here.

One of the key qualities of any game — just like any application — is trust. The player has to trust that the game is playing by a certain set of rules. And that the time invested in learning those rules will pay off because it will allow the player to get better at the game and overcome challenges. If players feel that the game is “rigged,” so to speak, or the deck is so stacked against them that no amount of learning will improve skill, players get demotivated and simply stop playing the game.

In any of the Soulsborne games, which are known for their overall difficulty, it’s critical to understand the experience that players are getting. Since so much of the game is predicated upon combat, a key quality to look at is the “fairness” of that combat.

But is fairness something that can be ferreted out by AI? Well, let’s take a look.

To spoil the ending a bit: yes, AI can determine that there are “fairness” problems. What it generally can’t determine, however, is why except in the most obvious case. Understanding why a quality like “fairness” may be compromised requires some interpretability and explainability, two key qualities that testers are going to have to get familiar with when working in an AI context.

The Game Uses AI

The combat in a game like Elden Ring is predicated upon enemy AI. Meaning, the game utilizes algorithms to create dynamic encounters. One very common example is the use of finite state machines. These are used to model the enemy’s behavior as a set of discrete states and transitions. Each state represents a specific behavior or action, such as idle, attacking, or dodging, and the transitions between states are triggered by certain conditions or events. You’ll see this a bit later on when I talk about what happens when you close the distance to certain boss enemies.

But how do you test for this? Do you just sit there and play the game, over and over, fighting the same boss and trying to see how “fair” it is? How do you, as a human tester, actually know that the boss is obeying the rules of the algorithm?

Well, that’s where you can leverage AI to augment (not replace!) your human testing. So let’s dig into this a bit.

An AI Tester

For those who aren’t familiar with the game at all, let me provide an example of an encounter.

This example shows a boss fight and, particularly, one where the boss has a so-called “Phase 2,” which means they transform into a more powerful version of themselves at some point during the fight. I kept the sound on in this video so you can get a feel for the full experience. Sounds often do play a part in the combat, such as indicating what type of attack an enemy is getting ready to unleash on you.

It’s a slightly over five minute video which shows that boss fights are not necessarily over quickly. (Well, when you’re under-leveled or unskilled, they can be over very quickly.) Even if you’ve never played the game, you can certainly see a lot of the mechanics in action. There’s a lot of learning that goes on there to get good at this encounter.

Incidentally, for those who are fans of the game, I should note that Boss Fight Database has put together a compilation of all boss fights in the game, if you want to see them.

Similar to my “Flappy Tester”, which showed how to write an AI to test a version of Flappy Birds, I did write a tool to test the AI that the bosses use in Elden Ring. I’ll cover that a bit here and then talk about some details.

Due to non-disclosure agreements, I’m not allowed to release the full source code of this test harness but I can release enough to show the basic ideas. I should also note that the original tool was written in C++ but I’m not allowed to show the basic ideas in that language, so I’ll use Python instead.

More importantly, however, I can show footage of the bosses in action and use that footage to describe what an AI tester (a tool) can find and what an AI tester (a human) can find. So note what I did here: I wrote an AI test harness to test the AI of another program. But the analysis of what the “AI Tester” showed me required … well, me. It required a human.

I’m purposely using “AI Tester” to encompass both the tool and the human to show we don’t have to get stuck on coming up with different terms for each. In my experience, very few people actually get confused about this distinction in the real world.

Data and Structures

So, first, let’s consider a structure representing some of the data for the boss’s attacks. Pretty simple stuff:

|

1 2 3 4 5 6 7 8 9 10 11 12 |

class BossAttack: def __init__(self, timing, animation, hitboxes, damage, effects, behavior): self.timing = timing self.animation = animation self.hitboxes = hitboxes self.damage = damage self.effects = effects self.behavior = behavior ... class MargitAttack(BossAttack); class GodrickAttack(BossAttack); |

In this example, the BossAttack class represents a single attack of a given boss and then I can subclass that for individual bosses. This base class contains various attributes to store the attack’s characteristics.

- timing: A float value representing the timing of the attack.

- animation: A list of float values capturing animation parameters, such as attack type, trajectory, and speed.

- hitboxes: A list of tuples, where each tuple contains the (x, y, z) coordinates defining a hitbox for the attack.

- damage: A float value indicating the damage potential of the attack.

- effects: A list of integers representing the presence of specific effects associated with the attack.

- behavior: A list of integers encoding the sequence of actions or events that make up the attack pattern.

You might recognize those characteristics as what we ended the previous post with.

It’s useful to store multiple attacks and organize them within a structure as well. Again, pretty simple stuff:

|

1 2 3 4 5 6 7 8 9 10 11 12 |

class BossAttacksCollection: def __init__(self): self.attacks = [] def add_attack(self, attack): self.attacks.append(attack) def get_attack(self, index): return self.attacks[index] def get_num_attacks(self): return len(self.attacks) |

The BossAttacksCollection class provides methods to add attacks to a list, retrieve those attacks by indexing into the list, and obtaining the total number of attacks stored.

The idea here was that I could populate instances of BossAttack with the desired attack data and store them within a BossAttacksCollection object. This allowed me to manage and access the boss’s attack information effectively during training of the AI as well as being useful for analyzing the decision-making processes within the AI system. So how that might look for a given boss is:

|

1 2 3 |

class GodrickAttack(BossAttack): def __init__(self): self.attacks = BossAttacksCollection() |

Put another way, this was a very simple mechanism to provide some observability.

Numerical Representations

Everything, of course, is numbers. So let’s consider the numerical representation of some boss’s attack attributes to be fed to the AI tester. It’s most useful to structure this as a matrix or tensor, where each row represents an attack and each column represents a specific attribute. Here’s an example:

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 |

import numpy as np num_attacks = n timing_dim = 1 animation_dim = 3 hitboxes_dim = 3 damage_dim = 1 effects_dim = 2 behavior_dim = 5 attacks_data = np.zeros((num_attacks, timing_dim + animation_dim + hitboxes_dim + damage_dim + effects_dim + behavior_dim)) for i in range(num_attacks): attacks_data[i] = [ timing_value, animation_value1, animation_value2, animation_value3, hitbox_x, hitbox_y, hitbox_z, damage_value, effect1_presence, effect2_presence, behavior1, behavior2, behavior3, behavior4, behavior5, ] |

In this example, there are dimensions for each attribute.

- timing_dim: 1 (a single float representing timing)

- animation_dim: 3 (three floats for animation parameters)

- hitboxes_dim: 3 (three floats for hitbox coordinates)

- damage_dim: 1 (a single float representing damage)

- effects_dim: 2 (two integers for effect presence)

- behavior_dim: 5 (five integers for behavior sequence)

The attacks_data matrix is created as a NumPy array with the shape (num_attacks, total_dimensions), where total_dimensions. is the sum of all the attribute dimensions. This is thus creating a numerical representation for the attacks.

In C++, I used xtensor for this.

In that video above, all that you saw taking place on the screen is essentially represented by these matrices. In fact, that example you saw in the video was used to generate training data.

What happens here is that the attacks_data matrix is populated with numerical values representing each attack’s attributes. Each row in the matrix corresponds to a separate attack, and each column represents a specific attribute value for that attack. This numerical representation could then be fed to the AI tester for training and prediction. Just to bring this home a little bit, here’s an example of an attacks_data matrix representing the numerical representation of a particular boss’s attack attributes:

|

1 2 3 4 5 6 7 8 9 |

class GodrickAttacks(BossAttacks): ... def generate_data(self): self.attacks_data = np.array([ [0.4, 0.7, 0.2, 0.5, 0.6, 0.8, 1.0, 2.5, 1.0, 0.0, 1.0, 1.0, 0.0, 1.0, 0.0, 0.0, 0.0, 0.0, 1.0, 1.0], [0.2, 0.5, 0.3, 0.8, 0.4, 0.6, 0.7, 1.5, 0.8, 1.0, 0.0, 1.0, 0.0, 0.0, 1.0, 0.0, 0.0, 0.0, 1.0, 0.0], [0.6, 0.3, 0.9, 0.2, 0.2, 0.4, 0.5, 1.2, 0.5, 0.0, 0.0, 1.0, 1.0, 0.0, 0.0, 1.0, 1.0, 0.0, 0.0, 1.0] ]) |

In this example, there are three attacks, and each attack is represented by a row in the attacks_data matrix. The matrix has the shape (3, 19) since the total number of attribute dimensions is 19 (1 + 3 + 3 + 1 + 2 + 5). Here’s a breakdown of those columns.

- Column 1: Timing values of each attack.

- Columns 2-4: Animation values (e.g., attack type, trajectory, speed) for each attack.

- Columns 5-7: Hitbox coordinates (x, y, z) for each attack.

- Column 8: Damage values for each attack.

- Columns 9-10: Effect presence indicators for each attack.

- Columns 11-15: Behavior sequence indicators for each attack.

The main point to take away from this is that the structure and content of the attacks_data matrix depends on the specific attributes and their values in the context of a given boss’s attacks. And, once again, what you saw in the above video is essentially what gets reduced to extremely large matrices like you see in the code above.

Preprocessing the Data

In my case study post, I mentioned how preprocessing happens on data. The context of that post was text tokenization but the idea of data preprocessing is just as relevant here. Case in point: the numbers in the above matrix can represent normalized or scaled values. And by this I’m referring to the process of adjusting the numerical values to a specific range or scale that’s appropriate for the attributes you’re using in your model.

Normalization is where the values of a variable are rescaled to a range between 0 and 1 or -1 and 1. What this does is make sure that the values fall within a consistent scale. This prevents certain attributes from dominating others due to their larger magnitude.

Scaling, on the other hand, involves transforming the values of a variable to a different range or scale. This is without necessarily restricting them to a specific range like normalization does. Scaling is useful when certain attributes have different scales or units.

In the context of the attacks_data matrix in my AI tester, scaling — specifically, feature scaling — was used. The specific method was called z-score scaling and what that does is transform the values of each feature to have a mean of 0 and a standard deviation of 1. You do that by subtracting the mean of the feature and dividing by the standard deviation. And when I say “feature” here just understand that as referring to an individual measurable property or characteristic of the data that’s used as an input to the model.

The AI Tester Code

Okay, so now let’s look at how some of this comes together. I’ll try to give you some idea of the structure of a simplified implementation for training an AI agent on a modified version of Elden Ring combat scenarios. First you need to create an instrumented example of the game itself:

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 |

class EldenRing: def __init__(self): self.player_attributes = {} self.enemy_attributes = {} self.environment = {} self.training_data = [] def get_training_data(self): return self.training_data def update_game_state(self): pass def simulate_combat(self): self.training_data.append({ 'player_attributes': self.player_attributes, 'enemy_attributes': self.enemy_attributes, 'environment': self.environment, # much other relevant data }) def apply_ai_decision(self, decision): pass |

This class represents the game environment and maintains the game state, including player attributes, enemy attributes, and various environmental factors. The update_game_state() method updates the game state based on actions and events.

The simulate_combat() method simulates the combat based on the current game state. This method also applies combat rules, damage calculations, and other mechanics relevant to combat in the game. The method also collects data on actions taken (“swing sword at enemy”), the outcomes from those actions (“sword intersects with enemy hitbox”), and any game state changes during combat (“enemy health reduced by x amount”).

How this works is that the get_training_data() method in the class returns the collected training data. The training data is stored in the training_data attribute, which is initially an empty list. During the combat simulation in the simulate_combat() method, all the relevant data I just talked about is collected and that data is then appended to the training_data list. Also appended to this training are rewards (“attack led to the boss losing health”) and punishments (“boss lost no health due to attack” or “player lost health”).

The ability to simulate the combat, and attach positive rewards to it, is where a lot of the controllability comes in. This is, unfortunately, one part of the source that I can’t release even in broad details.

I’ll come back to that apply_ai_decision() method in a bit.

With the game class established, I now had to create the AI that would be used for playing the game for training and testing. The overall structure was this:

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 |

class AI: def __init__(self): self.model = None def train(self, game_data): pass def make_decision(self, game_state): pass def save_model(self, filename): pass def load_model(self, filename): pass |

This class essentially encapsulates the AI agent. It includes methods for training the AI model, making decisions based on the game state, as well as saving and loading the trained model. Those last parts are important because that’s how I can get reproducibility.

The train() method is what trains the AI model using game data collected during the combat encounters. The make_decision() method is how the AI model, during training, decides what actions to take, based on the current game state. It’s actually here that the rewards and punishments are established based on the simulated combat.

It’s the decisions made here that the AI is trying to optimize. Remember, this is about a boss encounter. So any decisions being made are in the context of the boss attacking the player. The specific decisions made are thus how to respond to those attacks. It’s not enough to just respond, of course. The desired outcome — the training objective — is to defeat the boss. Defeating the boss requires getting their health down to zero.

This is where we start to check for our predictability. Meaning, can the model start to predict how to better counter the attacks of a boss and, in turn, perform attacks on the boss such that the boss is defeated?

Finally, there’s a test harness that brings this all together and here I’ll just give a simplified runner for it:

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 |

def main(): er = EldenRing() ai = AI() for episode in range(num_episodes): er.reset() while not er.you_died(): er.update_game_state() ai_decision = ai.make_decision(er.get_game_state()) er.apply_ai_decision(ai_decision) game_data = er.get_training_data() ai.train(game_data) ai.save_model("trained_model.pth") ai.load_model("trained_model.pth") if __name__ == "__main__": main() |

What you essentially have here is a training loop that provides structure for training the AI agent on combat scenarios in the game. The main parts are really this:

|

1 2 3 4 5 |

er.update_game_state() ai_decision = ai.make_decision(er.get_game_state()) er.apply_ai_decision(ai_decision) |

The game state is updated, a decision is made based on that game state, and then the decision is applied. Applying the decision updates the game state. And the loop continues.

Instrumenting the Game

It’s crucial to note that the testing is done on an instrumented version of Elden Ring. You could apply a reinforcement learning algorithm to the actual gameplay, including the graphics. That is prohibitively expensive not to mention exceedingly difficult given the graphical fidelity of the game.

But you don’t need to do that. Remember all of the graphical details, including the animations, are ultimately reduced to number. So the mechanics that play out are reduced to number and that’s what the AI learns from.

Ah, but that’s not what the human learns from, right? You, as a player, are not seeing all those numbers. You are seeing something like this:

That’s the boss Malenia coming to make your life difficult. If you want to see what Malenia looks like behind the scenes, here’s a C-based representation of what she “looks like” when she’s trained on. Note that’s a 16 megabyte C file!

But does this matter in terms of assessing how fair or not the boss is? Well, yes and no. Mechanically, it does not. Dynamically, however, it does. I’ll come back to that.

My main point here is that instead of rendering graphics for real-time gameplay, my AI tester interacts with a simulated environment where combat calculations and interactions occur. In a tester context, what you might say here is that we don’t have an end-to-end test but, rather, an edge-to-edge test. Or we’re using a form of integration testing that doesn’t require the full user interface.

The focus is on the underlying game mechanics and rules rather than visual rendering. This is very similar to what I had to do in my Pacumen example, where I built reinforcement learning around the game Pac-Man. Specifically, I used a series of instrumented maze layouts. For example, I have the Open Classic layout, which shows Pac-Man as “P” and ghosts as “G” and pellets as “.” characters. The maze boundary is made up of “%” characters.

The numerical representation is quite a bit more involved in a game like Elden Ring but essentially it’s just a very large matrix of those numbers I showed you earlier. You’ll have lots of matrices that look something like this:

Attack Data

[Slash, 2.5, 50, 0.8, 20]

[Thrust, 3.0, 60, 1.2, 25]

[Sorcery, 10.0, 80, 2.5, 50]

...

Environment Data

[100, 80, [10.2, 5.1, 2.0], [8.5, 4.5, 2.2], 3.5, 60]

[75, 65, [9.8, 4.9, 2.1], [8.3, 4.3, 2.1], 3.1, 50]

...

The attack data is made up Attack Type, Attack Range, Attack Damage, Attack Speed, and Attack Stamina Cost. The environment data is made up of Player Health, Enemy Health, Player Position, Enemy Position, Distance to Enemy, and Player Stamina. Then you get the following:

Combat Data

[Slash, 2.5, 50, 0.8, 20, 100, 80, [10.2, 5.1, 2.0], [8.5, 4.5, 2.2], 3.5, 60]

[Thrust, 3.0, 60, 1.2, 25, 75, 65, [9.8, 4.9, 2.1], [8.3, 4.3, 2.1], 3.1, 50]

...

The overall takeaway here is that you’re using an instrumented and abstracted version of the game in order to train and test.

A Little More Code

Let’s get a feel for what some of the code would be in that test harness I showed you. The make_decision() method looks something like this:

|

1 2 3 4 5 6 7 8 9 10 11 12 13 |

def make_decision(self, game_state): player_attributes = game_state['player'] enemy_attributes = game_state['enemy'] environment = game_state['environment'] if player_attributes['health'] < 0.5: decision = {'action': 'use_healing_item'} elif enemy_attributes['attack_type'] == 'powerful': decision = {'action': random.choice(['dodge', 'block'])} else: decision = {'action': 'standard_attack'} return decision |

The method receives the current game state as an input. The game state is assumed to be a dictionary (in Python, although in C++ it was a map) containing information about the player, enemy, and environment. The method then extracts relevant information from the game state.

The decision-making process in the above example is based on some very simple conditions but I can assure you the real thing was a bit more complex. This should give you a general idea, however. For example, if the player’s health is below a certain threshold, the AI decides to use one of the player’s healing flasks. If the enemy’s attack type is deemed “powerful,” the AI randomly chooses between dodging or blocking. Otherwise, the AI decides to perform a “standard attack.”

Whatever decision is reached is returned as a dictionary (map), where the 'action' key represents the chosen action.

Again, the actual decision-making logic is much more complex and sophisticated. For example, the decision to “dodge or block” may be predicated upon various factors, such as whether the character has any stamina left. Or whether the shield the player is carrying is perceived to be strong enough to block the attack.

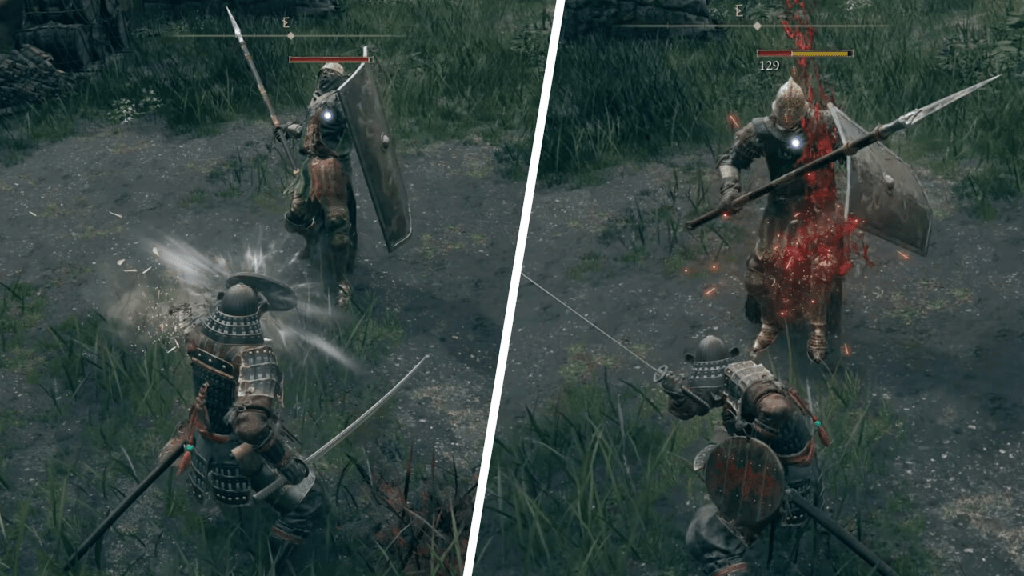

In the above visual the decision was made to perform what’s called a guard counter. The left part of the image shows the first part of that, which uses the shield to block and then perform an immediate standard attack. This is effective because, as the right part of the visual shows, this attack is essentially carried out immediately if the guard counter is timed appropriately.

This is helpful in this case where an enemy has their own quite large shield as well as a length-based weapon like the spear. The point here is that a dodge was deemed not effective because the spear would likely reach you even through your dodge. And the guard counter move resulted in a loss of poise for the enemy, so their own shield was not in place to block.

And even the dodge/block dilemma may be irrelevant if the immediate attack spewed a bunch of lava on the ground as here:

In that case, gaining distance by running is the better option than gaining distance by dodging. And blocking would do nothing for you except leave you standing in a pile of lava. I’ve never found that to be a very life-extending position to be in!

I said I would come back to that apply_ai_decision() method and here’s a rough idea of what that would look like.

|

1 2 3 4 5 6 7 8 9 10 11 12 13 |

def apply_ai_decision(self, decision): action = ai_decision['action'] if action == 'use_healing_item': self.player_attributes['health'] += flask_value elif action == 'dodge': pass elif action == 'block': pass elif action == 'standard_attack': pass else: # took no action |

Here the method takes an AI decision as input and extracts the action from it. Based on the extracted action from the AI decision, the method applies some logic to update the game state. The example shows a few hypothetical actions that could be taken. Notice that it’s also possible that no action at all was taken.

If you saw a lot of that “no action” situation happening as a tester, you would definitely want to find out why. Why is the AI taking no action at all? Even a human player will tend to do something, even if it’s just panic rolling around.

Did This Actually Work?

Yes, the AI tester could train on Elden Ring and it could provide insights on the difficulty level of a given boss.

By training on a large dataset of boss combat experiences, the AI tester could learn patterns and correlations between player performance and boss difficulty. It could analyze many of those factors I mentioned — player attributes, enemy attributes, environmental conditions — as well as player outcomes (success or failure) to determine the difficulty — and thus presumed fairness — of a boss encounter.

But let’s dig into this a bit more.

Is the Boss Even Possible?

It was possible to discover that a certain boss encounter might require timings and reflexes that are extremely challenging for people to handle. Through extensive training and analysis of gameplay data, the AI tester was able to identify specific patterns, attack sequences, or mechanics that demanded exceptionally fast reaction times and precision.

That got into a quality: possibility. Meaning: was it possible to defeat the boss at all? Or did it require processing information and making decisions at speeds far beyond human capabilities?

Note that in these cases, it’s quite possible the AI would have been able to beat the boss while a human would not.

But what if it was just a case that the boss was exceedingly difficult but not impossible? Maybe what seemed “impossible” for a human was possible if you allowed for practice, skill improvement, or the development of specific strategies.

Help the AI Understand Humans

One thing it was possible to do was provide the AI tester with information about the limits of human reflexes and reaction times. The AI tester could then analyze the combat scenarios and compare its requirements to those limits.

Note that the training objective changed here a bit. It wasn’t just “defeat the boss.” It was “defeat the boss in a way that is feasible for humans.” And even then it was refined in to “feasible for humans with no discernible motor skill or reaction timing problems.”

Suitably augmented, by evaluating the timings and mechanics of the boss encounters, the AI tester could determine if certain aspects of the game exceeded what was physically possible for human players. Let’s consider a few examples of what had to be encoded into the AI tester.

- The speed of nerve signal transmission and processing within the human nervous system affects reaction times. The time it takes for a sensory stimulus to be detected, transmitted to the brain, processed, and for the motor response to be initiated can introduce inherent delays.

- The speed at which humans perceive and recognize stimuli can vary. Different senses, such as visual, auditory, or tactile, have different processing speeds. For example, visual stimuli tend to be processed relatively quickly compared to auditory stimuli. (Consider that I said sounds in the combat can have a part to play in indicating what’s going on.)

- Human reaction times can be influenced by cognitive factors such as attention, decision-making, and motor planning. The time it takes to assess a situation, make a decision, and initiate the appropriate motor response can contribute to overall reaction times.

Obviously there’s lots of wiggle room here, right?

It’s challenging to provide precise values because all of this can vary depending on lots of factors. Now, that being said, it’s generally accepted that simple reaction times — response to a single stimulus — for visual stimuli can range from around 150 to 250 milliseconds in your average adult. Complex reaction times — response to a more complex or choice-based stimulus — can be longer, typically ranging from 250 to 500 milliseconds or more.

Even here we have to allow for the fact that skilled individuals can exhibit faster reaction times due to training, practice, or just plain old familiarity with specific tasks.

To incorporate information about human reflexes into the AI tester’s decision-making process for determining if boss encounters were potentially impossible based on human limitations, it was necessary to introduce a check within the ai.make_decision() method.

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 |

class AI: def __init__(self): self.model = None def make_decision(self, game_state, human_reflexes): if self.model.is_boss_encounter(game_state): boss_timings = self.model.get_boss_timings(game_state) if boss_timings < human_reflexes: decision = 'Impossible' else: decision = self.model.choose_strategy(game_state) else: decision = self.model.choose_action(game_state) return decision |

A parameter called human_reflexes was introduced to the method, which represented information about the limits of human reflexes. Then the human tester could compare the timing or speed requirements of the boss encounter with the known human reflex limitations. The AI tester could retrieve the relevant boss timings using the get_boss_timings() method from the model.

If the boss timings fell below the limits set by the human reflexes data, the AI tester would determine that the boss encounter is potentially impossible for human players. Otherwise, it would proceed with the regular decision-making process based on the AI model’s strategies.

Notice here how we have aspects of controllability and observability. And repeated trainings would help us with the predictability aspect of all of this. For example, if a boss was deemed as “potentially impossible” and we tweaked a few settings, could we predict if those tweaks would lead us back into “possible for humans” territory?

Value Judgments, Fairness and Quality

So let’s consider some examples of boss fights. In each of the videos below, I’ve kept the sound off so that you can just focus on what’s happening. I’ll call out a few things at specific timestamps.

Margit, The Fell Omen

Margit is a boss that players will most likely encounter early on in the game. He’s meant to be a good start to showcasing what the boss encounters will be like.

When applying the AI tester to Margit, it found some problems but did not relegate him to “potentially impossible.” However, what problems did the AI tester find? Applying an explainability process to the outputs, it essentially reported one particular finding

Margit has an abundance of delayed attacks with very long windups leading to the instant appearance of a hitbox.

You can actually see that in the first 15 seconds of the above video. When I refer to “instant appearance of a hitbox,” I mean the chance where you can hit him or punish him for one of his moves. A “hitbox” is just the border or outline of a character or object that will invoke a collision of some kind, such as your sword with his body.

Why did the AI tester call out “delayed attacks” and “instant appearance of a hitbox”?

These delayed attacks are moves that you can only dodge by learning their strict timing. That’s important because this is as opposed to reacting naturally to an incoming attack. Consider this example of a natural reaction to an attack:

That’s to another boss named Rennala where the player’s own hitbox was missed due to natural reaction — jumping out of the way of a phantasmal beam of death!

Delayed attacks have a lot of advantages — from the boss’s perspective. As one example, it punishes players who “panic roll.” It also diverts the player away from purely reactive play. That’s all good!

The problem is when the delayed attacks are relied upon too much. And that is what the AI tester was cueing into, although that’s certainly not what it said. What it simply said was that it noticed a lot of attack patterns that suggested delayed attacks and the appearance of a hitbox as being an issue.

But what was the actual issue here? Well, it turns out that the AI tester found something interesting where you can use the delayed attacks to your advantage. Consider this example with a Margit fight:

Even just watching that, I bet you can see it looks … odd, right? The above situation can happen … yet only under specific conditions. Margit takes a swing and when he sees you roll close to him, he pulls out his dagger and then, at three seconds in, the dagger disappears. He gets ready to swing his club down on you by holding it over his head. This means he’s going to perform one of his delayed slam attack — but — only if you’re in front of him. So circling to his left — see 4 to 5 seconds in — makes him try to find you and he apparently holds the move until he sees you in front of him, see 7 seconds in.

Arguably, this is actually a bug in the combat mechanics that the AI tester found.

What this does highlight is that, with Margit, positioning is often just as important for avoiding his attacks as rolling. Sticking close to him for most of the fight generally isn’t safe. Look at about 20 seconds in to the combat video that starts this section and watch up to 30 seconds. He wallops pretty good there! In fact, he kills us.

This same situation happens around 50 seconds in. He almost kills us with the same attacks again, although this time we barely survive and manage to heal up a bit.

From 1:24 to 1:27, notice that windup! And then the immediate attack with his blade at 1:29. This is a situation that the AI tester was cueing into as potentially problematic even though it was able to find a strategy above that seemed to exploit this delay.

The problem you might have trouble seeing here, but the AI tester did see, is that some of Margit’s attacks hit faster than you can roll. And you have to make sure you’re a safe enough distance away from him to roll before those attacks get used. His three-hit combo is a good example. Right at about 2:05 in the video, you can see some instrumented numbers that show the roll animation with that combo. If the number turns red, you took a hit.

It’s very hard to roll through that combo without getting hit at least once. The timing is either extremely tight or, worse, it might be downright impossible. The AI tester flagged that part as potentially impossible. What’s happening here is that the second or third hit always catches the tail end of your rolling animation before you have a chance to act again, either to roll or block or do anything.

Now, from a challenge standpoint, this would be okay … if the attack telegraph gave you time to position yourself properly before Margit’s hitbox became available. But the problem is that the first sword swing of this combo comes out so quickly that unless you’re already a safe distance away, there’s not much you can do. Look at the second case of this at around 2:15 in the video.

It’s one of many moves Margit makes that just doesn’t give you adequate time to adjust your positioning. You can see it yet again at around 2:28 and at 2:33. So it’s very consistent. And because it’s consistent the AI tester was able to flag this through a lot of training data.

But here’s what the AI tester couldn’t tell you. What all of this means is you aren’t rewarded for taking initiative in the fight and going on the offensive. Unless you use what might be considered an exploit in Margit’s attack pattern, as shown above. This isn’t helped by the fact that lots of Margit’s recovery windows aren’t very clear. A recovery window refers to a specific period of time during or after an action where a character — either you or the enemy — is vulnerable and unable to perform any actions.

Also check out around 2:45 where you’ll see a split screen dynamic. At the end of many combos, Margit makes it look like he’s done attacking but as soon as you get close he’ll pull out his knife and start another chain of attacks. That’s deceptive from the mechanic standpoint.

Now, you could argue that it’s realistic from an enemy standpoint, right? After all, a “real” enemy might not fight fair! But, from a gameplay perspective, it narrows your opportunity to engage with Margit and it funnels players into being a lot more defensive.

That last point is not something the AI tester could discern. That’s a human value judgment. What the AI tester could do is provide some context for understanding the rationale of that judgment.

What Did Margit Teach Us?

I spent a lot of time on Margit just to give you a feel for what the analysis part of all this looks like. And here’s where we get into what the AI tester could not analyze but a human could and thus leads us to some interpretability of what we’re seeing represented. This part was crucial for understanding a potential quality issue with Margit. What our analysis means is that your options for approaching Margit never seem to change with your skill level. You still have to engage with Margit the same way you did in your first few attempts. This means playing very passively and only waiting for the handful of openings that you know are safe. And this means all of your decisions are basically the same. You just sort of go through the same chart of actions while making fewer mistakes in timing.

So what can we learn from this from a quality perspective?

The AI tester gave us some numbers but what we can determine is that Margit’s recovery window is ambiguous. The AI tester didn’t say that but we can ferret it out from the data. A quality suggestion is that his animation should better communicate when he is and isn’t stun attacking us.

The other quality challenge goes back to that positioning. It’s considered bad game design to have a boss only follow up with attacks, like his knife attack, as soon as you get close. Why? Because it feels like you’re not being allowed to actually play the game. You’re essentially being goaded into making a mistake and you really can’t do anything to counter the move he makes.

So another quality aspect here would be those constant knife moves of his need to be scaled back a bit. Either give them a bit of a longer windup or telegraph better the conditions under which they might occur.

Godrick the Grafted

This is likely the second main boss you’ll encounter if you follow the game’s progression. Godrick has a combination of in-your-face melee attacks and mobility patterns, such as leaps and jumps. Thus he’s very similar to Margit in a lot of ways. Where Godrick differs is that he has some area-of-effect attacks as well.

Godrick does relies on delayed attacks quite a bit. This is particularly with one of this basic axe swings, which can see at around the 10 second mark in the video.

While the AI tester could determine that, what it couldn’t suggest is that a common problem with these moves is they’ll be delayed to a degree where it just looks and feels unnatural. In fact, notice how this sort of matches Margit’s “odd delay” that we looked at, but perhaps not as egregious.

Notice what I’m saying here. I’m more talking about a visual aspect of the fight and how it “looks.” Okay, yes, you’re fighting some weird larger-than-human demigod here that grafts body parts onto himself. Nevertheless as human players, we do apply a sort of real-world logic to these combat encounters. Game designers know this. And this is needed because this is how humans determine their actions.

When you see someone (or something) like Godrick raise his weapon, your instinct tends to be “I should do something!” but you also realize that when the weapon is at its apex, the next likely thing to happen is for it to come crashing down.

Good game design taps into those human instincts and that means the dynamics of the game — as distinct from the mechanics — rely on your human intuition. This intuition doesn’t have to rely solely on the rules of the game. What this does is get you into a state of what’s called “flow” and that translates to a feeling of naturalness, which is a quality.

Delayed attacks, when done poorly or done way too much, essentially force the player to go against that intuition. By itself, this doesn’t have to be a bad thing. It can be it’s own particular sort of challenge. In fact, to a certain extent, if you want a high difficulty to your game, you have to do this to some degree.

The goal is balance. You want the mechanics to punish bad habits from the player, not good habits. Margit, for example, punished panic rolling and that’s a good thing to get players out of the habit of. When you have balance, you can force the player to consider different responses and you lead them toward better rather than worse responses.

The problem is when this mechanic is overdone or used way too much, particularly in one combat scenario. What you end up doing is constantly punishing the player for acting on instinct. As a player, when a delayed attack comes up with Godrick, a part of your mind goes “Oh, okay. Here this creature is not acting the way a normal creature probably would, so I have to wait this extra bit because that’s how the game does it.” You’ve been taken out of the dynamics and forced to rely solely on the mechanics.

The above is not something the AI tester could discern. It is something you, as a human tester, could understand when the details of the outcome of the model are made explainable.

The AI tester thus judged that Godrick had a lot of balance in terms of how he presented his mechanics to the player. In particular, Godrick’s downtime between attacks was deemed quite reasonable. This was framed around various attack patterns. Godrick also has a little mini-earthquake he generates. You can see that at about 34 seconds in the video. At around 50 seconds in you can also see Godrick’s more powerful wide-arc swing of his axe. His jump attack is similar, which you can see around 1:07 in the video.

Much like Margit has a three-hit combo, Godrick has a five-hit combo. Look at around the 1:12 timestamp. That looks and feels pretty natural; he has momentum from his attacks and so he keeps trying to hit you with opposing swings. There’s not an extended delay to each swing. So it’s interesting to learn how to move through them but still difficult enough to avoid, thus requiring some skill.

What the AI tester was able to determine is the almost-guaranteed hit that we saw with Margit is not the case with Godrick.

Incidentally, contrast that five-hit combo animation with his delayed windup starting right at 1:22. It’s a good way to see the difference there in terms of the instinct for how to respond to attacks.

Godrick has what’s called a phase 2 and, as I mentioned earlier, this is where the boss somehow augments themselves and gets a bit more powerful in some way. This is usually triggered by reducing their health to some amount. This phase 2 transformation takes place at around 2:30 in the video.

In general, the AI tester found no major issues with Godrick’s attack patterns and, as a human tester looking at the game, we can see that’s because Godrick has very clear attack telegraphs. Margit didn’t always make it clear when his attacks were finished. Godrick is pretty much the polar opposite of that and his phase 2 makes that most clear. There’s adequate time to identify his attacks as they’re about to come out.

It’s also always clear when it’s safe for you to retaliate. Thus the fight feels more natural and has more flow from a human perspective. That’s not something the AI tester could discern but it’s something we could better demonstrate from the findings of the AI tester, which matched human experiences.

So, from a quality perspective, the AI tester helped us gather numbers but here’s how a human can interpret them: with Godrick, you don’t get punished for correctly identifying his behavior pattern. The dragon attacks he has in his phase 2 are a good example. You have to learn how to read the moves and actions of his new arm and figure out how to dodge them.

I mentioned earlier that there’s one exception to Godrick’s good attack patterns and this exception was found by the AI tester although a human tester should certainly find this as well. This has to do with his whirlwind attack.

This attack has an extremely short startup and he can apparently use it whenever he wants. It’s kind of like Margit’s three-hit combo. Look right about from 3:20 to 3:23 and you’ll see it in action. And again at around 3:40.

With Godrick, if you walk in to punish one of his moves, and thus exploit his hitbox, there’s a chance he’ll do the whirlwind before you have a chance to reposition safely. It comes out way too fast to reasonably react to with human reaction times. Thus positioning is not a reasonable strategy here because you aren’t left enough time to actually do that. Again, similar to the Margit situation.

The AI tester was able to find that correlation between the two bosses. It didn’t make them “potentially impossible” as a whole but it did suggest some potential “fairness” issues.

From a quality design perspective, a way to handle this is to simply make the whirlwind have a slower startup. And, as with Margit, perhaps make a few of Godrick’s attacks less delayed.

Lichdragon Fortisaxx

Here’s an example where the AI tester was actually not all that helpful. And if you watch a bit of the video, see if you can intuit why before you read on.

What do you think? What stands out to you about that fight, particularly compared to what you see in the Margit and Godrick situations?

A problem here is the overwhelming visuals, right? They serve the aesthetic very well but they do harm to the mechanics. “Too visually busy” is a common quality complaint about this boss encounter.

You can have a hard time figuring out what attack you’re actually being hit with due to all the visuals. So it’s hard to learn how to counter him. Likewise, those same visuals can make it hard to determine if you’re hitting him, particularly when he gets near the walls. Fortisaxx kind of blends into the area, with the dark trees and his dark skin.

So before you can even evaluate your response to an action, you have try and figure out what the action even was. That wasn’t a problem with Margit or Godrick given the battle arena they were in. Further, with all the lightning over his body, it can make it very hard to see where Fortisaxx’s hitboxes are coming out during his attacks.

None of this is anything that the AI tester could tell you because what I’m talking about here entirely relies on the human visual system and the graphical rendering of the environment and the boss.

From a quality perspective, here’s an example where the special effects could likely be toned down. But, again, this is not something that the AI tester could discern. Remember: the visual component wasn’t part of the training.

One thing the AI tester did notice, however, was that if you’re underneath Fortisaxx’s body for any length of time, external lightning bolts start to hit you. You have to be ready to dodge when that happens.

It’s a cool idea and it does keep players from just trying to “hide” beneath him to avoid his other attacks. But, from a game design perspective, it’s hard to tune this lightning properly. Why? Well, the game design idea was that they wanted the lightning hits to land only at moments when the player was not in the middle of dodging a regular attack. But since every move has different timing, there’s no guarantee that this timing will happen.

What this means is that you’re locked into a very risky position but you didn’t actually make any mistakes. Which, again, means your skill doesn’t really matter in this context. No matter how you good you get, you are still at the whim of a timer that you can’t see.

The trick here is that it also impacts your ability to attack. Once you attack, you lock yourself into an attack animation which you can’t stop. What this timer does is punish aggression, similar to Margit, and a bit less so on Godrick, where being close to the boss was bad.

Thus no matter how good you get at the fight, Margit will still pull out his knife when you try punishing some attacks. Godrick will still pull out his whirlwind. Here, Fortisaxx’s lightning always has a chance to disrupt your attack opportunities.

These are aspects the AI tester found but it required a human to analyze the data and understand why it mattered and what it said for “fairness.”

Quality Notes

I want to look at two more boss encounters but let’s stop a second here and assess.

With the above three bosses, I showed you a common theme that hinges on limitations of what you can do against the boss. There’s no way to actually level your skill and get better in those particular situations. These are not obstacles that can be countered with some improvements to your playstyle based on what you learned. No amount of training will get you better.

Collectively this demotivates you to get better (at least at a certain point) and removes engagement with the boss. Thus replayability — a quality, in this context — takes a hit. Bosses become a slog you have to get through rather than an exciting challenge that you want to see if you’ve gotten better at.

So when we explain the outcomes of the AI tester, what we find is a potential issue: fighting bosses feels the same every time because there’s not a lot you can do to give yourself a true advantage in some of those conditions we just talked about.

More Boss Training

Let’s consider two more bosses.

Fire Giant

I think if you just watch the video before reading on you can probably intuit some of what a human tester would notice about this boss that an AI tester would not. As a tester, watch the video and just see what stands out about this particular boss.

Before we get to that possibly obvious point, one thing that’s a problem in general in games like these is enemies that have way too much health and provide way too much damage. I’ll come back to that in a moment.

In the case of this Fire Giant, you also have what’s called significant “empty time.” This is where you just have to close the distance to the boss and usually dodge attacks while running around. It’s thus not really combat; it’s just trying to get to the combat. You can see that at the start of the video.

From an AI testing standpoint, this is really challenging to train on and test because the AI has to learn to get close to the boss in the first place. Then it has to learn to avoid attacks while it’s getting close to the boss.

What the AI tester found was that these giants have very wide reaching attacks that don’t seem dodgeable, even on your horse. How was this conclusion reached? Mainly because it there seemed to be a lack of “iframes” with these giant characters, which the AI tester did pick up on. An iframe means “invincibility frame” and it allows players to momentarily turn invincible during rolls and, in particular, when mounting or dismounting the horse.

But let’s go back to that obvious thing I mentioned. It is obvious, right?

As you can see, the Fire Giant is a BIG boss.

This means the camera causes problems. The Fire Giant’s attacks tend to come at you “off screen,” as it were. As you close on the boss to attack him, you can clearly see his foot but his attacks also come from his hands. He has a face in his stomach that can also attack. By contrast, think of Fortisaxx. He was big but you could at least see his body twisting or starting to move.

This can punish certain playstyles or builds. A melee build has to get close to the boss to exploit the hitboxes. A ranged class, however, has less of an issue in this regard. All this said, the AI tester wasn’t considering his visual size at all. Remember it was just going on the combat data. And the combat data says nothing about the boss’s size. So here’s an example, like with Fortisaxx’s visuals, where the AI tester’s ability to make certain determinations is compromised.

Now let’s add to this situation what I mentioned earlier: lots of health. The Fire Giant is a guy you just have to chip away at. And it takes a long time. And all that progress can be undone by a simple timing mistake where, in just one or two hits, he kills you. So that brings in the extreme damage aspect.

Either one of those on their own can be okay. But together, it’s a quality problem.

An enemy with such huge health is a bit of mental stamina challenge and that can be a positive variation on combat. As a player, you have to settle in for a longer fight. But that’s undone when the enemy can do such egregious damage to you. The longer a fight lasts, the more likely someone is to make mistakes. But the boss, of course, never does.

So while the AI tester did help provide the numbers for the above findings, what it can’t really do is suggest what to do about it.

A better approach here, from a quality design perspective, would be that the Fire Giant perhaps only has devastating attacks if the player accumulates enough small mistakes over time. This is as opposed to the boss effectively being able to one-shot you due to one mistake.

So allowing the player to make mistakes while still providing a long, drawn out fight is a possible way to introduce “fairness” into this particular encounter.

You’ll also note from the video that the Fire Giant can summon fireballs and these things can track you for a very long time before exploding. Your only option is really to just kite them along, keep ahead of them, and let them explode out of range. Here an example of a quality suggestion might be allowing the player to lead the fireballs back to the boss, essentially turning his own weapon against him.

Further, the fireballs do leave fire on the ground as well and if you run into that fire, you get stunlocked.

These kinds of things are disruptive to the pacing of the fight. Here we see a similar situation to Fortisaxx. Fortisaxx had his lignthing that you had no choice but to feel from; here when these fireballs come out, you are similarly limited. And, again, this was like Godrick’s whirlwind and Margit’s knife.

The AI tester was able to draw these correlations from the encounters but it did require a human analyzing the data to understand the commonality from a player perspective.

Malenia, the Blade of Miquella

And here we come to perhaps the most notorious boss of the game.

Malenia is an overly aggressive boss that can unleash some pretty long combo strings of attacks. This is before even giving you a chance to respond. She is, however, very vulnerable to hit stun. Meaning you hit her hard enough, and she gets stunned out of her current actions.

This is a way to counter her behavior directly. The idea here is favoring aggression: the player has to be allowed to knock her out of her combos. And that can happen. It’s being rewarded for taking the initiative. But with Malenia, it’s not consistently viable.

That was something the AI tester determined. But how?

Well, some of Malenia’s moves have what’s called “hyper armor.” There’s a chance — like Godrick’s whirlwind, Margit’s knife, Fortisaxx’s lightning, Fire Giant’s fireballs — that she can initiate this armor during the attack engagement window.

Thus she can only be put into hit stun sometimes. It’s not reliable and you can’t really predict when those times are. This is partly because her attacks come out so fast but also because it’s entirely random. So if you’re mid animation in your attack and she gets hyper armor, there’s not enough time for you to exit the combat animation and dodge or run.

She does have a way to back herself off by leaping backward. This allows you, as the player, to not simply spam the hit stun technique. She can also then use gap closers to prevent the leap back from having too much empty time. You can see a lot of this happening in the first minute of the video, where she can jump far back out of range and then close the gap to the player very rapidly.

But her dance moves and hyper armor potentially negate those great aspects of the fight. You always have to hesitate before you fight her because there’s this chance she might just armor through whatever it is you’re trying to do.

She also his this thing called “waterfowl dance.” If you’re trying to hit stun her when does her dance, dodging was initially considered by the AI tester to be potentially impossible. You can see an example of this at 1:06 in the video. You can see a particularly devastating version of this at around 1:45. In fact, she kills us. I’ll come back to this dance in a moment.

There’s also a life steal mechanic. She can essentially get her health back. Unless you dodge. And it’s a cool mechanic because if you bring summons with you — like spirit ashes — she can steal their health. See around 3:15 in the video where a spirit ash summon is used. And those computer controlled ashes can never dodge as well as a human. So what you bring in to help you in the fight, can actually turn against you. Look at around 3:31 to 3:36 and you’ll see her health bar go back up as she steals life.

That’s a cool mechanic!

And in those cases, it would make most sense to try and attack her relentlessly to prevent her life stealing. But that’s not something you can even think about with those hyper armor issues.

There’s even another problem: she heals on block hits. What this means is if you use a shield and block her attacks, she heals a bit from that. That discourages you being defensive. But, as we just saw, being aggressive punishes you for reasons that improving your skill won’t help because that hyper armor is not something you can counter or defend against.

It’s crucial to see that point here with Malenia because it was a common theme of literally every boss we looked at here. They have some mechanic that you can’t determine when it will occur (it’s not telegraphed) and you can’t counter (when it happens, it happens).

Let’s go back to that waterfowl dance because I think it shows something interesting. There are strategies that players have suggested for her waterfowl dance. You can see those listed in the video at 5:40.

The idea here is that “bloodhound step” will avoid the attack more easily. This is a skill that allows the user to become temporarily invisible while dodging at high speed. A single use of this ability provides sixteen invulnerability frames at 30 frames-per-second. If you use the ability multiple times in a row, uses after the first provide fourteen iframes. This can drop to as low as five iframes.

A challenge here is that this means one particular option is encouraged above all others. This would mean this fight, and only this fight, demands a very specific playstyle. Having players radically adapt their build and playstyle is considered a bad game design practice.

What about freezing pots? These are a consumable item in the game. This category of items is utilized for their immediate or temporary effects. A freezing pot can be thrown at enemies to inflict frostbite damage. The idea is you throw this at Malenia right as she’s about to do her waterfowl dance. A challenge here is that Malenia can gain a status resistance the more you proc her. “Proc” here means when she feels the effect of the status element (in this case, frost) given how much buildup of it there is.

Let’s consider this in another context. Say you have a sword that does 40 bleed damage. You’re fighting something with 400 bleed resistance. This means you would have to hit them ten times and then the bleed would proc. A challenge here is that status effects dissipate over time. So depending on how fast you hit the enemy, it could take 11 to 15 hits for a single proc.

The freezing pot causes 380 frost buildup and it always inflicts the same amount of frost buildup regardless of your attributes. Malenia’s frost resistance is 306. So one hit of a frost pot will cause her to proc.

Finally, the whole “get good” thing is focusing on the margin of error, particularly for dodge. The AI tester deemed that this windows was just too small, particularly when you are up close with her. But let’s break this down a little more.

If you watch that closely, there are actually two hitboxes. Malenia’s sword has its own hitbox and that’s where the majority of her damage comes from. The second hitbox is actually the globe — which represents the spins of her sword as part of wind damage — and that’s what hits players with what’s called chip damage. Chip damage refers to the small amount of damage delivered to a player who is blocking an opponent’s special move.

What the AI tester found is that you could reliably block the timing with the sword (at the risk of giving her more health), but this wind damage effect would punish rolling to the point that any rolling strategy was “potentially impossible.” Yet the problem here isn’t the hitbox though but, rather, it’s the amount of distance Malenia can cover. Even if you dodge through her on the first flurry of attacks she will immediately track a 180 degree turn for the second flurry.

The AI tester was able to cue into an interesting fact, though. Malenia’s second flurry attack range will be significantly shorter and this is because she doesn’t entirely turn a whole 180 degrees — if, that is, you manage to roll towards her back. This is a little bit like what was found with Margit where you could sort of exploit that he wanted you in front of him before he slammed his club. So the most consistent strategy the AI tester found for Malenia is to avoid the first flurry by running away as soon as she winds up, rolling towards the second flurry, then do a 180 for the third flurry while also rolling towards the attack. The data seemed to suggest that rolling towards her attacks meant she would move right past you both times.

So what the AI tester was cuing into is that Malenia’s ability to cover a good amount of distance could actually be used against her.

The first part of the above video, up to 15 seconds, shows a waterfowl dance counter-strategy. Then starting at 15 seconds in, you can see the video slows down to show you the adopted strategy. What this breaks down to is:

- The moment you see her raise into the air, gain some distance from her. (See 22 second mark.)

- This allows you to dodge the first flurry of the attack. (See 26 to 29 mark.)

- As soon as her second flurry starts, roll towards her. (See 30 to 32 mark.)

- Roll towards her again to dodge the third flurry. (See 33 to 36 mark.)

The dodge timing of this, however, is very precise. But the AI tester did determine this as fair. The interesting thing here is that this strategy is not really intuitive at all. Which goes back to that human instinct issue and how much and to what extent to reinforce those as part of gameplay dynamics.

So while Malenia gets a lot of hate online for being “unfair,” what the AI tester is suggesting is that she is fair but you do have to use one of her abilities — covering great distance — against her by doing what might seem counter-intuitive: roll towards her as she attacks you because her speed covering that distance means she can’t land a hit on you.

One other thing, however, that the AI tester found that it did render “unfair” was that Malenia’s waterfowl dance move has no recovery. What that means is you can study this move for as long as you want and eventually learn to dodge it perfectly — but you still get no chance to punish. There is no instant appearance of a hitbox and thus even your perfect dodge does not set you up to deliver an attack.

Results?

So what was the conclusion? Was the game “fair” or not, at least in terms of boss encounters?

It’s complicated! Overall, the AI tester judged most of the bosses “fair” in that they were not “potentially impossible.” But the AI tester did find aspects of their combat that were deemed to be “potentially impossible.”

What did that mean, though?

That actually led to an interesting bias that was inserted into the data. It turned out that enough of the training data was from extremely good players — the developers — who often took no damage at all during the fights. In other cases, it became known that some of the training data included combat scenarios where “developer cheats” were turned on that made the player take no damage.

What this meant is that the AI tester was learning based on the idea that “fairness” was predicated upon being able to fight a boss and take no damage. If it was impossible to take no damage, then some aspect of the boss was deemed potentially impossible and thus unfair. What this meant is we had a bias in the data and that had to be accounted for.

As a bit of an aside, a guy named Joseph Anderson became a bit famous for his Shattered Masterpiece video where he really critiques the boss design in the game. This was countered in What Joseph Anderson Got Wrong About Elden Ring and In Defense of Elden Ring Boss Design.

I bring that up because what it shows is a lot of subjectivity around the quality of the boss design in terms of their mechanics and the perceived “fairness” quality.

Generalize All of This

As a final note, while I gave you a lot of detail for humans and AI working together to test an AI in one particular context, you can certainly apply the broad strokes of the thinking and analysis here to AI with any context. AI giving an analysis that requires interpretability and explainability is not limited to games. It applies to any AI-enabled product where you have an AI that is operating behind the scenes and providing something to users.

You also have to be very careful on what data that AI-enabled product is trained on. The AI you are testing should be looked at for fairness (it’s not negatively biased), accountable (on its decisions), secure (from malevolent hacking or co-opting) and transparent (on its internal operations). These are both internal and external qualities when you have a product that is AI-enabled to some degree. This is ultimately what allows for trustability, which is going to continue to be a key quality attribute in an increasingly AI-enabled world.

These were two long posts that did quite the deep dive but I hope this was a fun and interesting example to showcase some of these ideas!