I’ve posted quite a bit on game testing here, from being exploratory with Star Wars: The Old Republic, to bumping the lamp with Grand Theft Auto V, to ludonarrative in Elden Ring. I’ve also shown how comparative performance testing is done with games like Horizon Zero Dawn. These articles offered a bit of depth. What I want to do here is show the breadth of game testing and some of the dynamics involved since it’s quite a specialized sub-discipline within testing.

What I’m going to do here is present a lot of information but there is going to be a throughline to each element. By the end of this post, I hope you can see how this breadth of testing in a game context is a very discernible spectrum. So let’s dig in. First, let’s set up some context.

Game Quality Can Be (Initially) Problematic

If you’re a gamer, you certainly know the story of Cyberpunk 2077. It released in December 2020 and had numerous bugs and was virtually unplayable on last-gen consoles. It was touted as being playable on them, however, which was a major sore point with the gaming community since the claim was obviously and demonstrably false. There were a lot of stories about the quality assurance on this game and the politics thereof. A lot of that was more noise than signal but, for testers, probably an interesting episode to watch.

More recently we just had The Callisto Protocol (December 2022), which was not quite as unplayable but had massive and regular stuttering issues on the PC, although apparently not on consoles. Many of these have been fixed at the time of writing.

The common thread here is that games release and, on day one, often have very obvious bugs that people wonder: how could this possibly have not been seen in testing?

The simple answer is: the problems probably were seen in testing, at least to some extent. In the case of Cyberpunk 2077, we know that they were seen. Often there is hope that a so-called “Day 1 Patch” will resolve many things. Which does, when looked at from the outside, seem to argue for just pushing the release one more day. Other times it’s that the pure combination of hardware and software makes it not as feasible to just “check for quality.” Games have to run under a wide variety of conditions and are extremely demanding of computer resources, both CPU and GPU, that introduce a series of, quite literally, varying variables.

Consider the release of Assassin’s Creed: Valhalla where numerous analyses were done to show how the barometer of the PlayStation 5 graphics settings compared to the PC graphics settings were able to showcase a variety of possible bugs in the implementation of the latter. Probably one of the better analyses I’ve seen of this is this video from Digital Foundry.

Yet I’m actually not here to talk about these points. How games release in the industry is often timeline based and that’s often based on game development studios working with commitments that are made to their publishers.

Game Testing Can Be Difficult

What I do want to re-emphasize a bit here, particularly in relation to my above-mentioned previous posts, is how difficult game testing can be. Now I talked about this specific point before in a few articles: “Testing Games is Hard”, “Testing Like a Gamer”, and Gaming Like a Tester.

Saying “testing games is hard” isn’t meant to make excuses for shoddy performance of games, particularly as those game publishers increase prices and try to entice with pre-order bonuses of some sort. Rather, it’s simply to show that being a game tester — particularly one that works closely in the design and development process — can be a very challenging, but extremely rewarding, aspect of specializing in testing. That specializing part is critical and that’s why this post is titled with “Testing Specialty.” I feel like I can best show this by the breadth of game testing.

In what follows I’m going to use a lot of terminology from the Unreal Engine, simply because it’s been popularized so well and is easily referenceable if you want to look up terms. Just know that any terms I mention here have parallels in quite literally all other major gaming engines, whether that’s CryENGINE, Frostbite, R.A.G.E, Dunia, Havoc, Infernal Engine, and so on.

Tester Insights Into Game Fidelity

Here I lump a lot of things under “game fidelity.” What I mean by that are the possible experiences — visual, auditory, tactile, interactive — that a gamer can have and the underlying mechanisms that amplify or degrade the quality around those experiences. I find way too many game testers are not engaged at these multiple levels of abstraction.

Fidelity Around Positioning

One obvious challenge is the positioning of characters. This is a bit harder than it seems. Consider this:

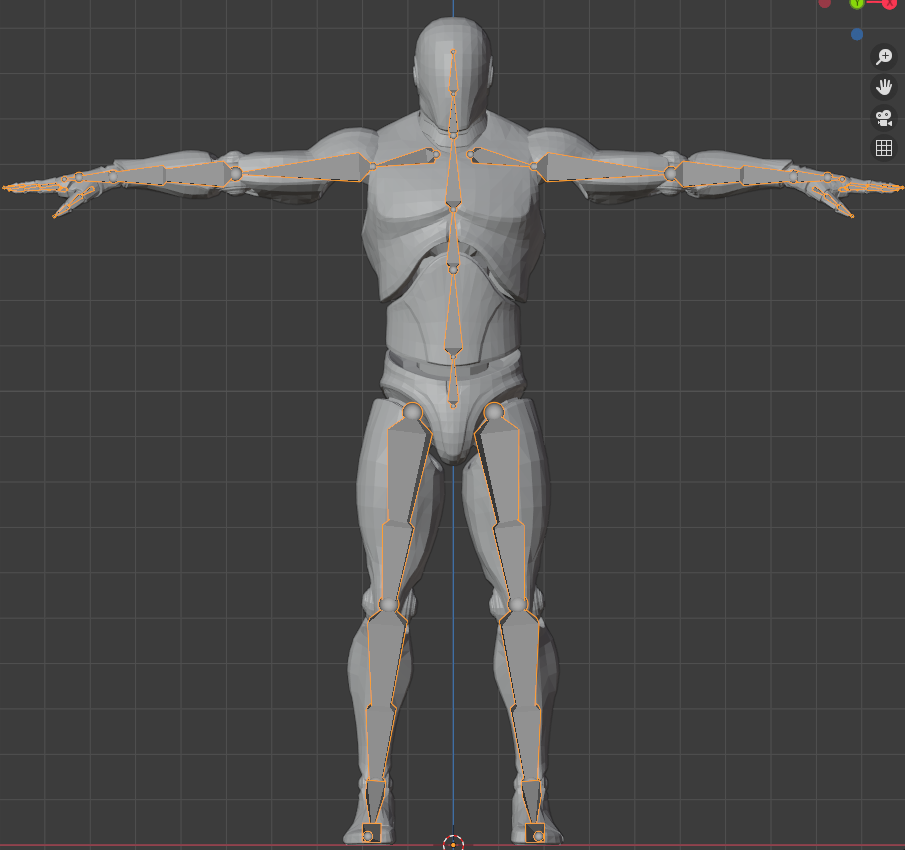

Clearly that’s not looking too good, right? What we want is this:

That seems so simple and yet what developers do to make that work is the use of what are called control rigs that help implement inverse kinematics, in this case applying to the character’s legs.

This is done so that, in this particular case, the character’s feet can look natural on sloped surfaces. But this applies to any situation where the character’s body has to naturally conform to aspects of the environment.

Now here’s a possibly interesting thing: I’ll often have game testers tell me: “I don’t need to really know all that, though. I’m just doing play-testing.” But, as I’m arguing, testing in game context is not — or at least should not — be just about “play-testing.” It should be about making determinations around the quality of experience. That matches what testing should be about in any context, not just for games. Testers always need to be active participants in engaging with developers about how game engines work, how those engines enable game experiences, and how those experiences can have varying levels of quality. Those varying levels of quality are starting to cost game developers, and their publishers, in terms of reputation as well as sales. So there is a real-world, economic incentive here as well if you need a way to make the argument.

Assuming you’ll give me the benefit of the doubt here, let’s say a tester needs to know about this. What do they need to know? Well, in this context, a game tester should know that a control rig is basically a construct controlling properties to drive animation. This gives developers the ability to animate what are called skeletal meshes. Think of this as literally like a skeleton made up of a bunch of polygons. This construct has a hierarchical set of interconnected “bones” (joints, really) which can be used to animate the vertices of those polygons.

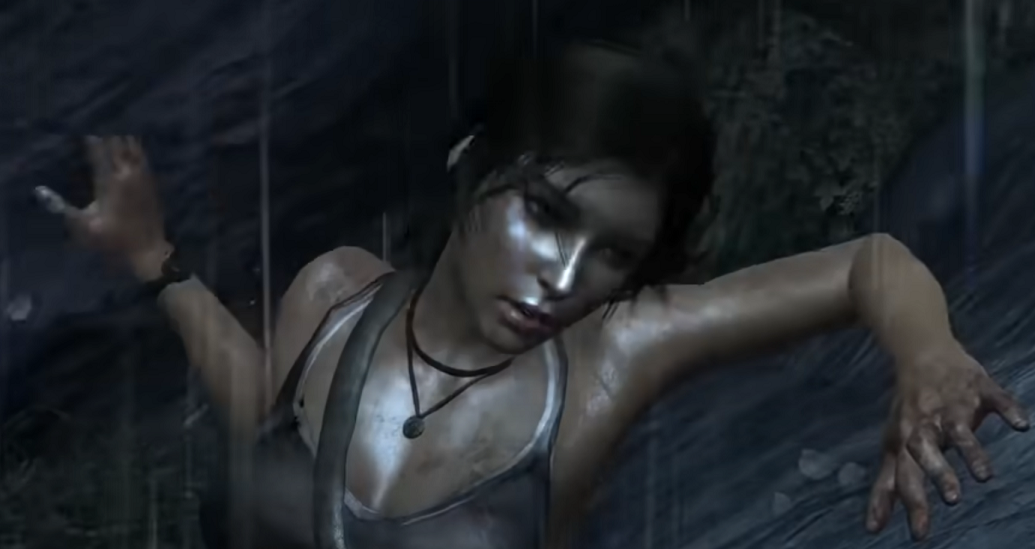

Inverse kinematics, put simply, is the use of kinematic equations to determine the motion of something to go from one position to another. In the context of game engines, you might have what’s called an “inverse kinematic rig” and what this does is handle not just movement but also joint rotation. If you play any of the rebooted Lara Croft games, starting with 2013’s Tomb Raider, you’ll see this play out in action as Lara moves through tight spaces and puts her hand up against walls.

Notice here how Lara’s one (upper) hand is clipping through the rocks but her other is not.

Okay, so maybe with that example, now, as a tester, you see how the underlying mechanics manifest. But … so what, right? After all, how do you test for all such conditions like this? Or do you?

The answer is: you don’t. You really can’t. At least not in some systematically, repeatable way. But you do look for the general verisimilitude of those mechanics in action. Here’s one of many, many examples:

Watching that snapshot play out in action, you see how Lara moves and how her movements are constrained by the environment around her. This isn’t simple! This takes a lot of dedication to trying out Lara’s movements in various ways in the environment and noting broad classes of problems where her joints don’t rotate in quite the correct way or her body clearly has to contort in a way that no human body could.

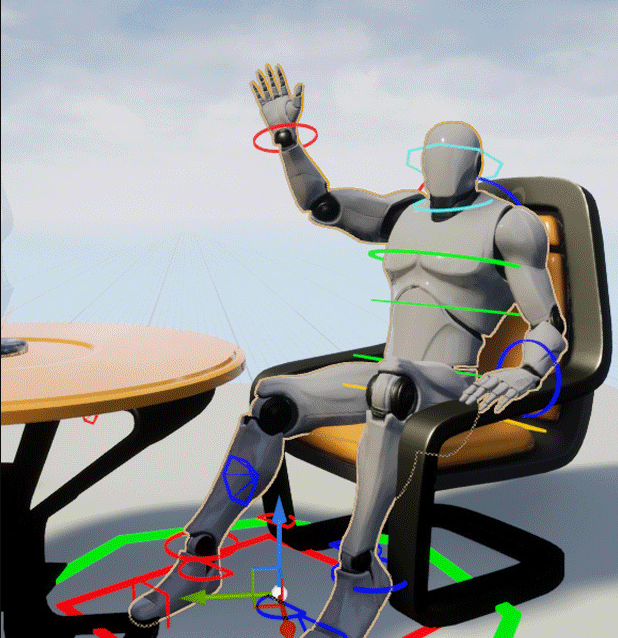

From a testing standpoint, we can look broadly for clipping issues or odd bone kinematics and then have developers investigate that scene or level to investigate the priority lines and control bands that dictate where the character can place their body and how their body movement conforms to the surrounding environment, such as ground and walls. We can try things like: “Hey, I’m going to sit in the chair and see how this looks.” And if that looks terrible, developers may start looking at something like this:

Now, let’s consider an example here that I’m going to ask you to think quite a bit about. Consider the game Jedi: Fallen Order (November 2019), where your character, Cal Kestis, spends a great deal of his time running around, particularly doing wall-running.

Not only is this visually important, in terms of the character actually looking like they’re running against a wall, but mismatches in the geometry and how your character’s body responds to that geometry can make environment puzzles like this very frustrating for the player. For example, it can lead to cases where the player is visually on the wall, but control structures in the geometry don’t recognize that and so the player falls because the game thinks the player has undershot or overshot the wall.

I want to provide an extended example so I managed to go through one sequence that shows a lot of this in action. Consider the following from the game:

Consider all of the possible issues in geometry that are being dealt with there! I want you to think about what it means to test this sequence, given all the timing related aspects that are inherent in what’s going on and the environment physics that are being modeled, such as ice and air updrafts and rope physics.

Here’s where this becomes tractable. Many of the issues that would or could occur are part of what we might call equivalence classes. It’s like if we had a bunch of styled buttons on a web page. We can either individually style them or we can use a CSS class to style them as a group. If we can style those buttons as a group by applying a class attribute, we have a better chance of all the buttons reflecting the styling we want (including updates to the styling) and thus we, ideally, have an equivalence class for testing. If one button is styled incorrectly, they probably all are.

The same applies with gaming engines. We can apply physics and control bands and priority lines across the range of “runnable walls” or “ice slides” or “hanging ropes” and that dramatically simplifies the testing experience and what we have to look for.

Also, something I want to note from a personal standpoint: some parts of both Tomb Raider and Jedi: Fallen Order were very frustrating for me. But, that said, it was not frustration born of not trusting the game or how its environment was modeled. Rather, it was just the timing that I knew I had to get right. By which I mean I trusted the game enough that it was getting the details right that any failings were likely mine as I learned how to time my movements.

And that’s critical! Gamers being able to trust the experience is something that testers can apply as a guiding heuristic. Actually, that’s the case whether the application you’re testing is a game or not. But it’s crucial in games where players will invest many hours — sometimes hundreds of hours — in the game.

Let’s consider that character movement for a bit. Key to all of that realistic looking movement is that developers can use specific skeletons around the various characters.

Here you can see that blur in the image which shows the rig is in motion and how that rig — acting as a skeleton for the actual character — moves. So developers can put different skeletons onto characters or alter the existing skeleton. But then testing has to make sure that animations still line up correctly, that weapons or other items appear in the character’s hands correctly, and so on. A simple change can lead to something like this:

And keep in mind, this isn’t just a case of positioning the weapon in the hand in one context but it would be a case of making sure the weapon appears in the character’s hand through all possible motions of the underlying skeleton. So when the player decides to perform an attack, the animation must show the weapon reliably in their hand.

Fidelity Around Mechanics

Developers often use hit interfaces and this is so game characters and game objects can implement hit functionality.

So a given weapon or item has a certain boundary around it which dictates when a “hit” occurs between that item and some other item. This, by itself, is pretty simple. A weapon, or item, has a hit interface that allows the developer to establish a boundary by which collisions with other objects will make sense. That can go badly awry though when the hit areas are not modeled well. It can be the case of an enemy who is clearly way out of weapon range to the player still being able to inflict damage. Consider this from Dark Souls (September 2011):

Here I’m dodging some enemies who are both swinging at me. Their swords have defined hit interfaces around them. In this case my dodge, combined with their distance and the type of weapon, means there should be no hit registered against me. Let’s check out what this looks like in action with Dark Souls 3 (March 2016), with a little test instrumentation added.

Here you can see the game in action but with various skeleton mesh instrumentation as well as what are called “hitboxes” shown. If you’re seeing potentially odd things there, then that’s a good thing. There are a lot of issues with the hitboxes in the game but it’s not so much related to the hitboxes but rather the issues are around frame issues. What you see, when testing the game, is that the hitbox for the player is generally good. The weapon hitboxes are also, generally, good although could probably serve to be flattened a bit. The problem in the game is the timing around when those weapon hitboxes start from the standpoint of frames. Two-handing the great sword, which is part of what you’re seeing in the video, can hit half an animation earlier if characters are too close to each other.

There’s a ton we could talk about and investigate here but let’s keep moving along.

From a testing standpoint, a lot of what I just described gets tied into what’s called chaos destruction. This is terminology used within the context of physics engines. Some games are striving for fully destructible environments.

That scene is one from Battlefield 2042 (October 2021) that shows some of the destructibility of environment meshes. How all this works is that developers use chaos destruction to fracture meshes.

As a note, I’ve said “mesh” a lot in this post. Just know that a mesh, in this context, is a piece of geometry that consists of a set of polygons. When you fracture meshes, you essentially model the dimensions along which something disintegrates or demolishes to some extent. Physics fields are used to apply that destruction to specific objects. You can use various levels of fracturing. Here’s an example of a character in action, shattering some pots:

So that’s one thing for testers to be aware of: destructible environments and making sure that destruction physics seems to work in a way that makes sense. “Makes sense” here means given how you are hitting the objects and what’s around the objects that may cause the fragmented parts to bounce off walls or fall off cliffs.

This idea of hit interfaces and even destructible meshes applies also in combat where we have what are called directional hit reactions. Here you can see that in action a bit as a character engages with an enemy.

Visual elements there are showing how the game engine is helping us understand how the directional hit mechanics work. This leads to a lot of other equivalence classes that allow you to reduce the overall testing effort but still apply it to specific situations. For example, you might try applying this against human-sized opponents, as in the visual, but also against much smaller opponents and much larger opponents.

Behind the scenes, developers use vector operations to calculate the hit direction and these vectors are actually modeled into the interactions.

Developers use root motion animations so that enemies, when hit, stumble in the right direction.

Root motion refers to the motion of a character that is based off animation from what’s called root bone of the skeleton. The “root bone” is a reference to a defined origin point (0,0,0) with no rotation applied. So what ends up happening is we look at our root motion:

There’s a lot to be said here but what’s crucial to understand is how all of the above really swirls around the mechanics of how characters move or animate and the dynamics that this allows in the game, such as interaction with the environment. In the above visual, you have armature bones that are used to move the body, which has its root bone in the hip, and the inverse kinematic controllers around the arms and legs perform the movement.

All of what I just described was certainly modellable within the Dark Souls context I showed earlier but this also brings up a good point: turning on all possible instrumentation to view what’s happening can actually compromise testing quite a bit because it can be harder to reason about what’s going on. As with the use of any such tooling to support testing, you do have to be careful in how much of it you use.

A bit off-topic and yet certainly related, let’s consider client and server discrepancies. Consider this:

What that visual is showing you is a case where the client (left part of image) shows that the player picked up some object, in this case a sword, but that’s not being reflected on the server (right part of image), where the sword is shown as clearly still on the ground. So this means the server is not recognizing damage correctly because, as far as its concerned, the player has not picked up the weapon that will cause damage. This can obviously be very important in any multiplayer contexts such as PvP. In the Dark Souls context, situations like this would be even more rage-inducing than the game itself for many players.

Fidelity Around Dynamics

The very same type of math and logic talked about above can also handle another specific type of aspect of game dynamics. Many games give enemies the ability to see the character if the player moves into their line of sight, which are usually framed as “light cones” that represent vision.

This kind of thing is really important in stealth-based games that rely on you navigating your way around patrolling enemies. Consider games like Dishonored (October 2012), Shadwen (May 2016), or Styx: Master of Shadows (October 2014) where being stealthy is a core mechanic. At it’s simplest level, this means enemies may get hostile and chase you but then lose interest if you run far enough away or break their line of sight.

What you’re seeing in that visual is that the character has moved in the cone of vision of an enemy (represented on the ground by the red sphere in between them) and now the two characters are linked by a line fo sight, represented by red spheres around their midsection. This can now be used as an input to other game mechanisms, such as a type of path finding mechanism that allows the enemy to pursue the character.

Now I’ll ask you to consider a game series like Hitman. Specifically, consider the following sequence:

This is a mission in Marrakesh where the idea is you want to get the camera man’s outfit as a disguise. There are various characters in the game, some of whom will entirely ignore you and others who will actively challenge you if they spot you. Sometimes they challenge you only if you are in an area that you are not supposed to be, given your choice of disguise.

Consider the sequence you see in that video. Don’t look at it as a game player. Look at it as a game tester. Think about what it means to test just this particular sequence in a game that is filled with many such sequences. And as you look at that example, realize that it — like the Jedi: Fallen Order example shown earlier — shows all of what I talked about above. So in these snippets, you are seeing the breadth of testing, or at least what testing can be, in this context.

Fidelity Around Appearance

Let’s look at one more area. A lot of what I mentioned above shows a lot about graphics and geometry and how mathematics work in line with that. One of the core killer quality issues for games is frame rate issues. Frame rate is how the game is effectively appearing to the player, in terms of how smooth it plays or how jaggy the experience is.

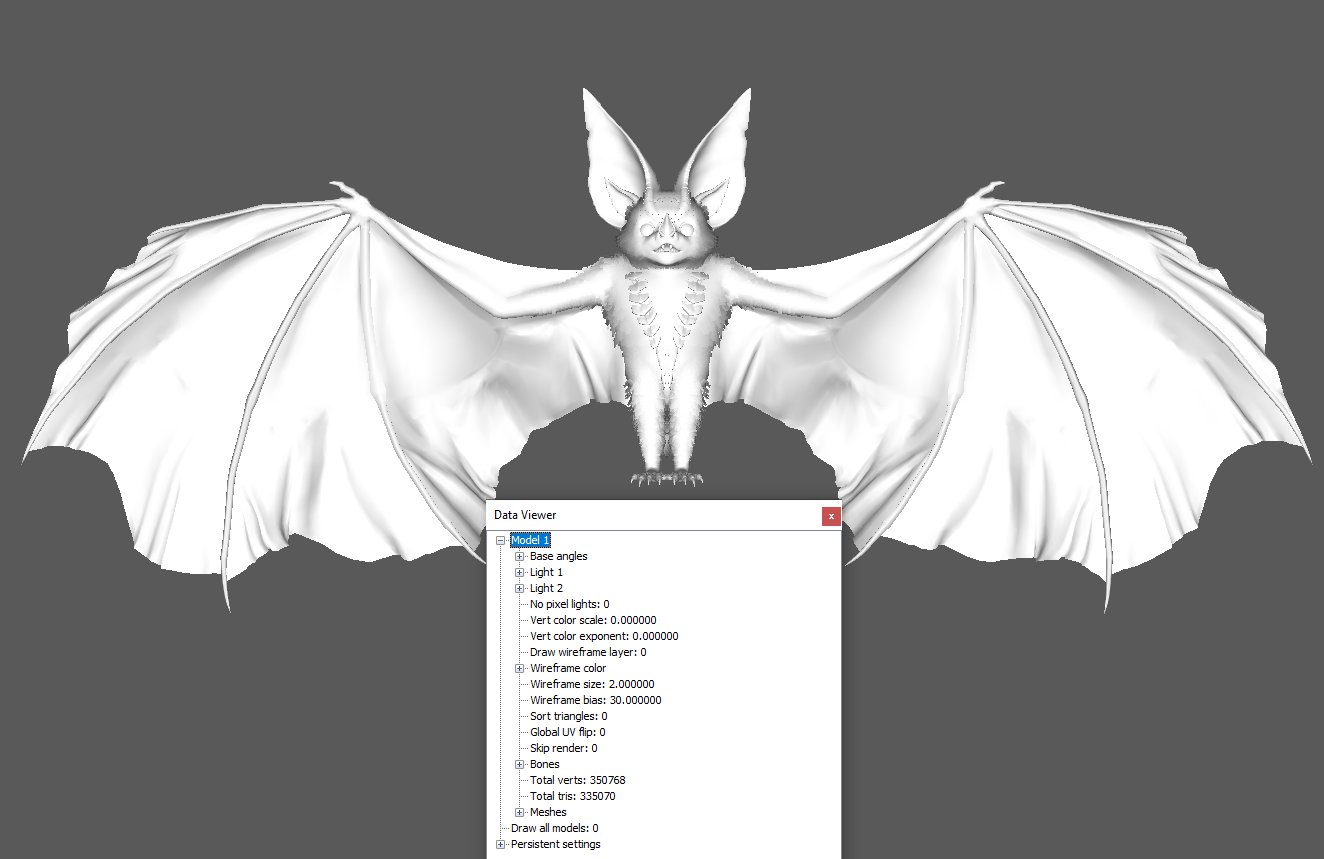

Consider that Final Fantasy Origins: Strangers of Paradise (March 2022) had a whole lot of frame rate — not to mention resolution and visual — issues. This, like Cyberpunk 2077, seemed to manifest more on consoles than it did on PC. Testing actually shows that this is because the models the developers were using are extremely unoptimized. One example of this was thirty megabyte geometries for some of the most common enemies. Consider this:

By the way, some of these model images can be opened in a new tab so you can see a bit of a larger view if you want to check the numbers I’m about to tell you about.

That’s the game’s bat model. If you saw this as a tester, what might you think? Perhaps nothing — if you don’t know what to look for. A key thing would be those “total verts” and “total tris”. Here these are 350,768 and 335,070, respectively. Is that bad or good? As a tester, even if you’re not responsible for answering that, you should want to be able to answer it. That bat model serves as a specification for that particular enemy. And that specification is showing something way out of whack.

But let’s break this down a little bit. Verts is short for vertices. A tri (triangle) is the simplest form of polygon. Put very simplistically, 1 tri = 3 verts, 2 tris = 4 verts, and 3 tris = 5 verts.

A vertex at it’s most basic is simply a point in space; it’s an X, Y and a Z coordinate that gives a position. A triangle is the flat shape you get when you join up three vertices positions. This is what forms the shell of a three-dimensional model that players will see and interact with in game.

In any given game, each vertex traditionally has a lot more information attributed to it, such as color, normal (the direction that it points in, for lighting), tangents and sometimes binormals (for normal mapping) and various UV coordinates (for texture mapping).

What this means is that vertices is one of the most precise way to convey mesh complexity. For game optimization, the smaller the better for either triangle or vertex count. That said, the less you have, the lower the quality of the visual. So you want to optimize but while maintaining an acceptable visual fidelity.

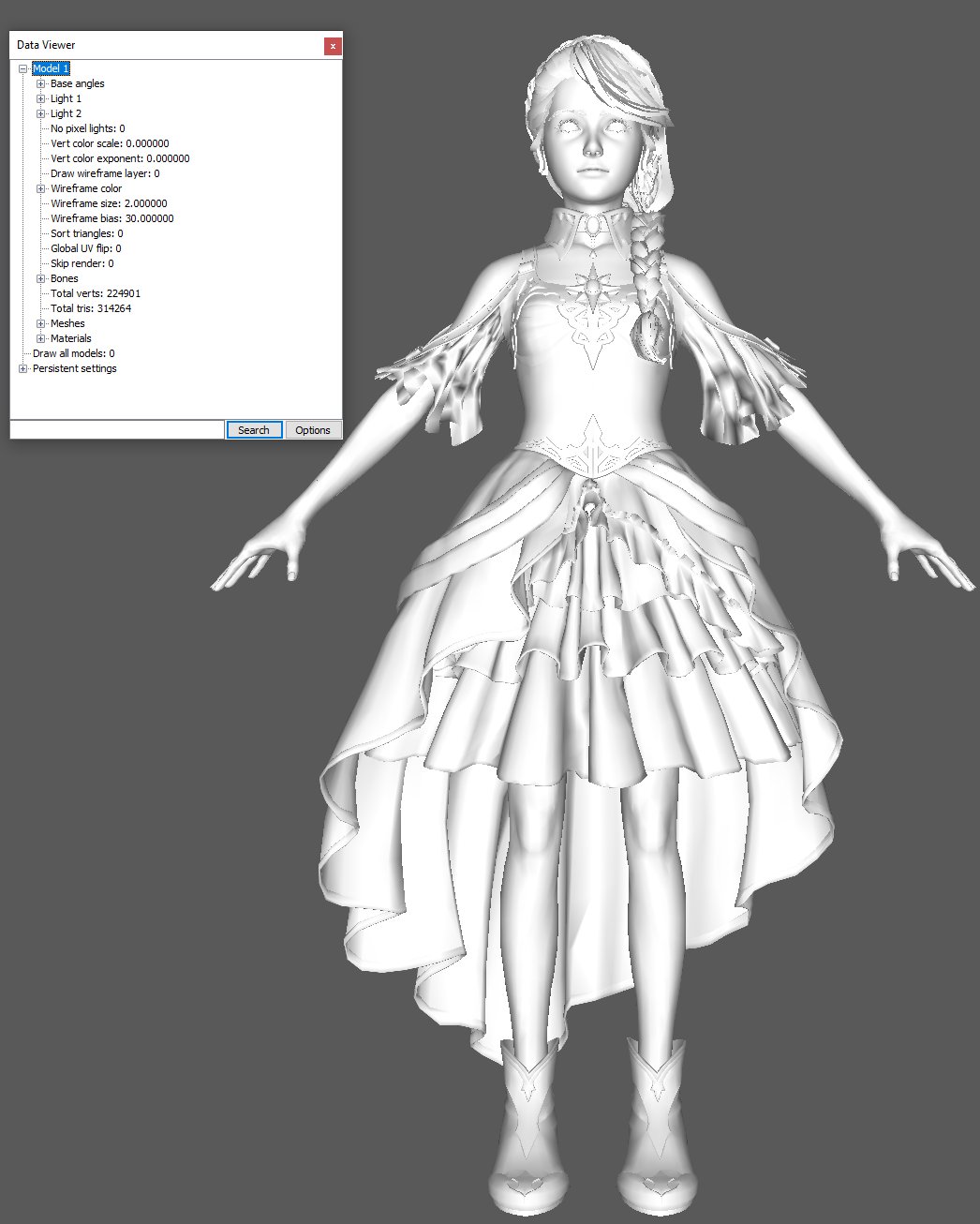

Let’s consider another model from the game:

Here the verts are 224,901 and the tris are 314,264 which, even for a seemingly more complex overall model, is simpler than our bat. How about this one:

Verts are 273,623 and tris are 144,537. One more, which is a more complete model:

Here our verts are 234,263 and the tris are 393,105.

Now, given all you just looked at above with these numbers, what stands out to you? What stood out to testers is that the bat — a very common enemy and thus there are many of them in the game — has way more verts and tris than any of the other ostensibly more complex models. This shows a very poorly optimized model. And since that model is serving as a specification, and since testers should be good at finding gaps in specifications, this is something testers should be able to help developers spot.

The bat isn’t even the worst offender. There’s a boss model in the game that’s ninety megabytes — and that’s the geometry alone, with no textures or anything applied to it — and it has 1.8 million tris.

From a pure resource standpoint, the number of vertices will affect the memory size of your mesh in RAM but will be rather negligible in terms of GPU speed. Whereas, the number of triangles affects GPU utilization more than it affects the memory size. From an experience side of things, this can give us clues as to how the user will experience the game based on certain graphic cards or on certain resolutions.

Now, how does this actually manifest? Well, a character called The Minister in the game drops an NVIDIA GeForce RTX 3090 — no slouch of a graphics card — to twenty-five frames per second and this is largely because of the character’s fur coat. You can see that bit of testing by the following:

Consider another scene from the game were the graphics settings were specifically put on minimum with no shadows. Shadows are one of the known performance degraders. Here is how that scene plays out:

The frames per second drop terribly, going to 8 at one point which, needless to say, is pretty awful. If you watch the video, you can likely discern exactly what’s causing the performance hit there.

The Breadth is Large

What I hope you saw here is that just the breadth of game testing, much less the depth, is an exciting field. How exciting it is, however, depends in my experience on whether you, as a tester, are fostering testing as a specialty within the environment, such that you are engaging in design and development, or are just doing so-called “play-testing.”

Games, just like any applications, have many amplifiers and degraders of quality that testers have to be looking for. Likewise, just as in any testing, testers want to be looking for how much testability is a part of the process. Testability, at minimum, means controllability and observability. So consider that in relation to some of what I showed you above. Developers may look at all of that in the context of their game engine, but that game engine is still an abstracted interface from what the player will actually be experiencing.

Let’s even approach this breadth from yet one more angle.

Player Emotion and Trust

Game mechanics interact to generate events, which in turn provoke emotions in players. To cause emotion, an event must signal a meaningful change in the world. In fact, to provoke emotion, an event must change some human value. A human value, for our context, is anything that is important to people that can shift through multiple states.

So consider how this might play out in a given game. Here I’ll point you to a review of Dishonored 2. A key part from that review is:

I’m surprised when a guard is immolated after I shoot him with a sleep dart. But it happens for a reason. In Dishonored 2, certain bottles of alcohol burst into flame when smashed—a trick useful for burning down the nests of Karnaca’s parasitic bloodflies. This is a universal rule that exists outside of the player’s direct involvement—a rule that can trigger when, for instance, a recently tranquilized guard drops their drinking glass onto a bottle. It’s not about realism—this is a game in which one of the main characters has a parkour tentacle—but it works, and feels immersive, because everything has its own defining laws within the fiction. The biggest joy of Dishonored 2 is in discovering these systems, and manipulating them to your own ends. That wouldn’t work if you couldn’t trust in its simulation of the world.

This is another area of trust. In this case, the player is trusting in the simulation of the world and not just the geometry that underlies that world. See, once again, how the breadth works? The geometry of the world morphs into the simulation of the world! This is a case where testers can act as great user advocates. You try things out and see what experiences you have and you can encourage the design and development towards that “bumping the lamp” experience I mentioned in my above-referenced post.

As another bit from that review:

The first time I play the opening mission, I kill my target. Later, a guard announces to his men that their leader is dead. The second time through, I again kill my target, but hide his body in a secret room, locking the door behind me. This time, the guard announces that their leader is missing. With no way to access the room containing his corpse, his fate remains a mystery. It’s a tiny thing—a single voice line—but it builds that trust. It would make sense for the game to treat dead or alive as a binary state, but Dishonored 2 knows that these details are important. It respects your ingenuity, acknowledging when you’ve done something clever.

This is a game that was very well tested. You can see an article — “Dishonored 2 QA testers are doing things devs didn’t even think of” — that amplifies that very point.

This, my friends, is the fun and the challenge of testing in a game context.

But, crucially, we need more test specialists in this context, not just “play-testers.”

Cards on the table: I don’t do games. But I listen to people who do, and I know people who started out in games testing. I also talk about testing to non-testers, and there is a bit of a sense amongst non-testers that games testing must be great, “because you get paid to play games all day”. And we both know that to be untrue – though when I was job-hunting, I came across some games firms who promoted that view, if only to get away with paying the smallest amount possible for testers. Which may explain some of the test failures you’ve seen.

The other thing I notice is that some of the character placement and motion issues you’ve identified are things that my other half, who lectures in digital media and art, tries to address in her teaching. So it makes me wonder: whet qualifications do the developers of these games have? Did they come to games development via an IT-oriented route, or do they have qualifications and/or experience in digital animation for film and tv?