What I want to show in this post is a history where “teaching” and “tutoring” became linked with “testing” which became linked with “programmed instruction” which became linked with “automatic teaching” and thus “automatic testing.” The goal is to show the origins of the idea of “automating testing” in a broad context. Fair warning: this is going to be a bit of a deep dive.

I would also ask readers to note that this post is titled A history as opposed to THE history.

The Pedigree of Replacing Humans

Perhaps worth noting here is that “replacing humans,” or at least replacing human activities, has, as one of its focal points, an emphasis on computers — and computing — in general.

Before electronics, before calculating machines, and even before the Industrial Revolution kicked off, there were computers. However the term didn’t mean the same thing it does today. A “computer” was a person! Specifically, it was a person who performed calculations, generally for a specialized purpose.

The computer profession — and here I mean people — originated after the development of the first mathematical tables in the sixteenth and seventeenth centuries. These were logarithmic tables designed to perform complex mathematical operations through addition and subtraction. These were also trigonometric tables designed to simplify the calculation of angles for disciplines like surveying and astronomy.

Computers were the people who would perform the calculations necessary to produce these tables.

The first permanent table-making project was established way back in 1766 by a guy named Nevil Maskelyne. His goal was to produce navigational tables that were updated and published annually in the Nautical Almanac and Astronomical Ephemeris.

Maskelyne relied on (human) computers to perform his calculations, but as the Industrial Revolution, which started around 1750, rolled on, a French mathematician named Gaspard Riche de Prony established what was essentially a computing factory in 1791. This “factory” was made up of human computers, most of whom lost their jobs in the 1789 French revolution. These computers were tasked with compiling accurate logarithmic and trigonometric tables to aid in performing a new survey of France as part of a property tax reformation.

De Prony relied on a small number of skilled mathematicians to define the mathematical formulas and a group of “instructors” to organize the tables and create the instructions for doing the calculations. With all that in place, his ninety or so (human) computers needed only a knowledge of basic addition and subtraction to do their work. This reduced the (human) computer to an unskilled laborer.

Okay, great, so why am I going through all this? Well, this trend of the so-called “unskilled laborer” moved up into the twentieth century in various guises. Consider the Comptometer room at the Stratford Co-operative Society.

This shows young girls and boys working as human computers on model ‘E’ comptometers in 1914. Or consider the Computing Division of the United States Veterans Bureau in 1924 with human computers.

As the First Industrial Revolution progressed past 1840, we got to the Second Industrial Revolution, starting in 1870 and ending around 1914. Along the way, the “unskilled workers” in many fields moved from using simple tools to mechanical factory machinery to do their work. This takes us to the Third Industrial Revolution, starting in 1947. Here people would attempt to bring such mechanical tooling to computing as well.

I won’t talk about all that history here any further as that isn’t the focus. Needless to say, most of the early computers — and here I mean the machines — were created specifically to perform calculations. So as those machines were evolved to function with less need for human intervention, they naturally came to be called “computers” themselves after the profession they eventually replaced.

Tooling (Ideally) Supports Humans

All this history can sound dire. We have a catalog of humans replacing themselves. But we don’t, really. We have a history of humans augmenting themselves.

Humanity as a whole has progressed with advancements in knowledge and in technology, with the two driving each other. This happens in testing as well. All of the experimentation, and thus testing, in our sciences was based on the ability of humans to produce tooling, like microscopes and telescopes. In fact, while researching this post, I came across a book written by Herbert Hall Turner back in 1901. It’s called Modern Astronomy: Being Some Account of the Revolution of the Last Quarter of a Century.

In this book, Turner says:

Before 1875 (the date must not be regarded too precisely), there was a vague feeling that the methods of astronomical work had reached something like a finality: since that time there is scarcely one of them that has not been considerably altered.

Here Turner was referring to the invention of the photographic plate in relation to the telescope. Scientists were no longer sketching what they saw through telescopes but recording exactly what was seen onto huge metal plates coated in a chemical that reacted to light.

In addition to that, telescopes were getting larger, meaning they could collect more light to see fainter and smaller things. Turner presents a diagram showing how telescope diameters had increased from a measly ten inches in the 1830s to a whopping forty inches by the end of the nineteenth century.

And that evolution has continued. The Gran Telescopio Canarias is an optical telescope with an aperture of 10.4 meters or almost 410 inches. There’s even a planned 30 meter (about 1,181 inch) telescope that has been halted in construction for some time.

Turner’s point there was that tooling has certainly changed quite a bit. Another point Turner was making is that tooling had worked to support a discipline.

But … Did We Replace Astronomers?

Historically we can realize that both then and now, no one confused “doing astronomy” with the simple act of “using a telescope” any more than many people would confuse “doing mathematics” with “using a calculator.” This, I would argue, is in the same way that many don’t truly confuse “doing testing” with “using automation.” That being said, many testers would actually contest that claim and suggest there is exactly such confusion in the industry.

A key point to my example above from Turner’s book is that a strong desire for more knowledge about the size and contents of the universe, to see further and fainter things, drove the advancement of telescopes. Astronomers became really tired of using cumbersome photographic plates and so the invention of digital light detectors was pioneered mainly by astronomers.

Note: by astronomers! There’s a corollary here. How much automated tooling is actually constructed and designed by testers, as opposed to developers?

Such tooling tends to begat more tooling. For example, the invention of digital light detectors saw improvements to image analysis techniques, which were needed to understand the more detailed digital observations. Those techniques then fed into medical imaging, such as magnetic resonance and computerized tomography scanners, now used to diagnose a whole continuum of ailmments.

My point here: tooling, working in concert with a discipline and supporting it, is not a new thing.

So, no, we did not replace astronomers with tooling. But then did we replace “manual astronomy”? And, if so, what even is such a thing?

The “Manual” Qualifier

Now we come to a phrase that a lot of modern testers like to trot out.

“THERE IS NO MANUAL TESTING”

I already talked about this a bit in my post on manual testing deniers but framed in terms of what I just talked about here, a tester might say we don’t refer to “manual astronomy” (done by human eye through telescope) and “automated astronomy” (done by analysis tools working with telescopes).

And that’s true! Thus the contention becomes: so why do we say “manual testing”?

The same sort of argument can be made to say that we don’t speak of “manual programmers” or “manual developers.” That, too, is very true.

So how did we get here?

How did we get to a point where one discipline — testing — got a qualifier attached to it — “manual” — where other disciplines did not? A lot of testers ask this question but almost as if there’s no real answer to it beyond some obstinate industry that simply devalues human testing.

Yet it’s really not that simple. So let’s talk about that history a bit because the history shows us some very strong currents, including how to combat those currents. Sometimes to fight a current trend that you believe is harmful, it helps to understand the historical forces that led to it.

Machines for Teaching

For the record, we’ll exclude something like 1954’s Auto Test here!

There actually is some relevance to the assumption that this automated simulation of driving is, as the ad states, a “REAL TEST of Driving Skill behind the wheel.” But clearly there’s a play on meaning here with “automobile” and we have to recognize that. That said, the time frame is spot on. We are going to head back to the 1950s and 1960s.

As computers (and not the human ones) advanced in their capabilities, there was a push to apply technology in the classrooms. The history of this impetus is very well understood: the launch of the Sputnik satellite on 4 October 1957 by the Soviet Union. It was felt a “space race” was coming — really a technology race — and thus American education had to prepare its youth for that. Better schooling was required in math and science.

In September 1958, the United States Congress passed the National Defense Education Act. This provided funds to improve teaching, particularly of science and mathematics. The federal government thus made money available for innovative approaches, like using technology in the classroom. This was mirrored by money supplied by private institutions like the Carnegie and Ford Foundations.

Some of these innovative approaches were to see if it was possible to improve the efficiency of instruction, which was referred to as “manual instruction”, by which it was understood to mean, “instruction provided ‘manually’ by a human teacher.” Guiding this were a combination of psychological ideas around behavioristic strategies and technological ideas around so-called computer-aided instruction.

Of relevance here is an article from George A.W. Boehm entitled “Can People Be Taught Like Pigeons?” This appeared in the October 1960 issue of Fortune.

This article talked about an alleged revolutionary new method called “programmed instruction.” Such instruction was delivered via purpose-built devices known as “teaching machines.” The method’s inventor and principle advocate was a Harvard professor named Burrhus Frederick Skinner. According to Skinner:

The project had forced us to consider the education of large numbers of pigeons . . . . One great lesson of our experimental work seemed clear: it is unthinkable to try to arrange by hand the subtle contingencies of reinforcement that shape behavior. Instrumentation is essential. Why not teach students by machine also?”

That quote comes from B.F. Skinner’s article “Teaching Machines” in the November 1961 issue of Scientific American. The project he refers to was, quite literally, called “Project Pigeon” (from 1944) which morphed into “Project Orcon” (from 1948 to 1951). I’ll let you investigate whatever parts of the history you might be interested in here but the key question on some people’s minds was exactly what Skinner asked: “Why not teach students by machine also?”

Certainly testers can see an echo of that today in: “Why not test software by machine also?”

The Pedigree of Machine Teaching

Let’s actually take a step back from that and consider the work of Sydney Pressey back in the 1920s. Pressey was a psychology professor at Ohio State University. He created a machine that was designed to test his students’ knowledge by presenting them with multiple-choice answers to questions.

How this worked is that the student responded by pressing one of five keys or knobs. If that choice was correct, the machine moved on to the next question. If incorrect, the student would try again. The machine kept track of errors so that if the student took the test a second time, the machine would only stop on questions that the student had previously got wrong.

Look at the wording on the ad at the bottom here.

This machine was functioning somewhat as a “teacher” (note the quotes) and a bit as a “tester” (again, note the quotes). This notion of “teaching” and “testing” was intertwined for the obvious reason: teachers administer tests.

This starts us on the path to introducing the term “automation” or “automated” in the context of “testing.” There was a desire to go from “manual instruction” to “programmed instruction” and here we can read “programmed” as “automated.”

Worth reading here is a particular publication by Sidney Pressey called “A Third and Fourth Contribution Toward the Coming ‘Industrial Revolution’ in Education.” This is from School and Society, volume 36, published in 1932. Note the reference to an “industrial revolution” which, as we know, was based in large part on mechanization that eventually led to automation.

Certainly testers could argue that the software industry has put an emphasis on an “industrial revolution in testing.”

Programmed (Not Manual?) Instruction

Okay, so let’s hop forward in time again.

In 1958, and before he wrote the previously mentioned articles, Skinner started using his new machines, which were an update on Pressey’s concept.

Instead of choosing from prepared answers, however, students using Skinner’s machine would write their own replies.

The programs Skinner used consisted of a sequence of up to several thousand small steps, or “frames” as they were called. These were printed on fan-folded paper and loaded into the machine. Rolling the paper forward exposed a single frame in a window. Each frame consisted of a sentence with one or more missing words. Basically something like this:

Realistically, you would get frames that were likely linked in concept or content to some degree. For example:

The student wrote their response, in pencil, on a separate roll of paper located in a smaller window to the right of the text. Pulling a knob on a slider located above the windows rolled both frame and response forward, uncovering the correct answer while at the same time covering the response with a transparent shield, to prevent the student from cheating by changing their answer.

The students graded their answers as correct or incorrect by inserting a pencil point in one of two holes and making a mark.

An interesting thing to note here is that feedback on the efficacy of the reinforcement was really important. That’s because the question being asked of this approach was: “Are students learning better?” Testers: think about whether people ask if automation means we are “testing better.”

With the Skinner frames, it was felt that too many errors by students — around, say, five to ten percent — would indicate that the programmed instruction being executed by the machine needed its own improvements. Why?

Well, the idea here was that so-called “Skinnerian programming” required instructional sequences so simple that the learner would hardly ever make a mistake. To write such programs that would accommodate that idea meant atomizing the subject matter into very tiny pieces. The idea behind making each step as small as possible was that it would maximize the frequency of reinforcement.

I’ll ask the testers in the crowd: what does that sound like?

Today, if we want a tool to “do testing” we have to essentially break down how to do something in a very atomic way. Let’s leave aside whether a tool can actually “do testing” for a moment. Even if you frame this as checks rather than tests, that’s fine. Either way you have to break down very atomically what the tool has to do.

Skinner considered his programs acting in, or rather through, the machine as a “tutor.”

Like a good tutor, the machine insists that a given point be understood before moving on.

His use of “understood” here is interesting, right? Is the machine really “insisting” that something be “understood?” Would the machine even recognize if it was? How would it determine that from a lucky guess? Or a student who was simply given the answer?

This is what we have to deal with in terms of tooling that we say executes tests or checks. We have to tell it exactly what to look for. The tool certainly doesn’t understand a bug on its own. The tool certainly doesn’t understand what it’s actually doing.

Yet undeniably, historically speaking, this idea of a machine being some barometer that can utilize a human pattern of behavior has a pedigree from the earliest times that humans started to interact with computing technology, in particular around the ideas of “tutoring” or “testing.”

In Skinner’s aforementioned article, “Teaching Machines”, he said that for the first time we had a “true technology of education.” Notice the wording: a technology of education. Skinner further asserted:

Machines such as those we use at Harvard could be programmed to teach, in whole and in part, all the subjects taught in elementary and high school and many taught in college.

Wow! That’s a pretty grand vision, to say the least. And this vision stayed with him for quite some time. Consider that in Skinner’s “Programmed Instruction Revisited” (published in the Phi Delta Kappan in October 1986), he says:

With the help of teaching machines and programmed instruction, students could learn twice as much in the same time and with the same effort as in a standard classroom.

What is this starting to sound like, if you’re a tester? Automation, right? “Let’s automate! We can test twice as much in the same time and with the same effort. In fact, probably even less effort! It’s the “technology of testing!”

And The Teachers … ?

What about the teachers in all this? Was everyone just asserting they had reached their shelf life and it was time to be consigned to the dust bin of history?

Well, according to proponents of programmed instruction, there would be benefits for teachers, too. Programmed instruction would “liberate” them from the drudgery of what Skinner referred to as “white-collar ditch-digging.”

We can almost imagine the argument being framed against “manual teaching” here, right? And, in fact, that terminology was used. Do away with all that manual labor! Freed from the likes of drilling (one form of testing in a teaching context), the handing out of routine information, and the correction of homework, the teacher could emerge, according to Skinner, “in his proper role as an indispensable human being.”

So the notion of “manual teaching” versus “programmed instruction,” which was framed as “automated teaching,” was a very real thing. Yet, supposedly, this automation would free up the human. Yet, of course, it rests on the assumption that any of the human part could be automated in the first place.

Now, to be sure, educators did sound off. The concern was that administrators would, in fact, use so-called “teaching machines” to replace teachers. After all, isn’t that what history showed happened in these contexts? Beyond that, there was a fear that the machines would reduce education itself to a rote, mechanical — dare we say algorithmic? — process. Schools could essentially become factories, just churning out some notion of “teaching” without any understanding of the nuance of teaching. And if that was the case, what did it say about the education of the kids coming out of those “factories”?

For their part, parents worried that all this “programmed instruction” was something that felt just a little bit like an animal psychology experiment. It didn’t help that a lot of the work went back to the training of pigeons. Sentiments of “I don’t want my child taught like a pigeon” were heard often.

The momentum was set, however.

Notice the wording there? “Robot teachers,” indeed.

The Automation Hype Accelerated

As the momentum increased, quite a few United States equipment manufacturers were designing teaching machines or actively drawing up plans to do so. Meanwhile, educational publishers became very worried that their textbooks would become obsolete and so they ramped up on the production of “programmed texts.” There are lot of estimates you’ll find out there depending on what sources you reference but by the end of 1962, it seems that at least five hundred or so programmed courses were available and this was across a range of elementary, secondary, and college curriculum subjects.

Think about how many companies started to try and produce automated testing software as software test automation became the rage in the late 1980s and early 1990s. Think of how that amplified in the open source community. More tooling! Liberate the human! “Robot testers,” indeed.

And Yet … The Automation Hype Fizzled

The problem was: this programmed instruction turned out to be the equivalent of a fad. Interest died out. There’s a lot of history as to why and the above cited concerns were only some of the reasons but there was actually another one that was much more important.

One of the biggest problems with programmed instruction was simply that kids hated it. Think of the kids here as testers in our context for a second. What did they hate? Well, they felt the instruction was too rigid. There were too many tiny, elementary steps often leading to one — and only one — word answers. The kids were bored! They felt they weren’t actually learning anything.

Educators often found the students were good at memorization as a result of the approach, since memorization (read: scripted activity) was reinforced, but not as good at generalization, lateral thinking, and abductive inference.

How those kids felt is much like how perhaps a modern tester might feel if their only job is to write automated scripts that do rote and algorithmic checks of an application. Granted, there might be some thought in coming up with the scripts. But we know that’s not all testing is, right? We know we can’t rely on the equivalent of “one-word answers” when it comes to the assessment of what we’re evaluating. We can’t just write a script that “remembers” (read: regression test) what was the case prior to the exclusion of so many other possible issues right now.

Jerome Bruner, who in 1960 co-founded the Center for Cognitive Studies at Harvard, wrote a hugely influential book that year called The Process of Education. On the outcome of the man-versus-machine debate, he says:

Clearly, the machine is not going to replace the teacher — indeed, it may create a demand for more and better teachers if the more onerous part of the teaching can be relegated to automatic devices.

Now that’s an interesting statement. Read “onerous part” as the part that is entirely algorithmic, such as checking answers on a given quiz or repeating drills.

This would perhaps be equivalent today of saying “We may create a demand for more and better testers if the more onerous part of the testing can be relegated to automated tooling” where the “onerous part” might be the rote, algorithmic work that testers label under, say, regression testing.

Machine Instruction Moves to Tutoring

Still with me?

Okay, so, at around the same time Skinner was promoting his ideas but before they were implemented in schools, a guy named Norman Crowder embodied the idea of “branching” in a form of instructional books called TutorTexts.

Crowder invented a stand-alone desktop machine called an AutoTutor to deliver these programmed texts.

Since “tutoring” was largely focused on “testing”, you can almost read that as “AutoTester.”

The main distinction from Skinner’s approach is that Crowder’s allowed the aforementioned “branching.” Skinner machines were entirely linear; but Crowder’s would allow the response at one step to dictate what the next step should be, which might not be the “next” one necessarily.

Do you see how the interplay of “teaching” and “tutoring” and “testing” was aligning with this idea of automation? Are you starting to see where “automated testing” first had its life as a term and as a concept?

Harvey Long, at IBM, bought many of those Crowder-style machines for the company for a research lab they hosted internally. Long wrote a programmed text to teach FORTRAN, which was a relatively new language at the time and was making inroads. In July 1961, Long published his findings in a confidential in-house technical report (referred to as IBM Technical Report 00.817) and was called “Should the Digital Computer be a Teacher?”

Long’s report concluded that “automated teaching,” contrary to perhaps some viewpoints, was more than a passing fad and that, yes, the digital computer should be looked at as a teacher.

Incidentally, given the language mentioned there, it’s worth noting a 1962 FORTRAN manual. It was called … wait for it … FORTRAN Autotester.

Here we got the explicit notion of an “autotester” and thus some “automated testing,” in concept. The authors were Robert E. Smith and Dora E. Johnson and they said their work was:

Designed to emancipate the scientist and engineer from the need for the professional programmer.

Notice: “emancipate.” Just like we had “liberate” before.

But this is interesting, right? This was an idea of not even needing a programmer. The context of the book is a bit fascinating in its own right and I recommend folks check it out if curious. If you read the book, you’ll actually note the context is quite a bit different than what you might assume but I highlight it to show how the phrase “autotester” — and thus the idea of “automated testing” — was oddly situated in this time frame; it morphed quite a bit.

Incidentally, around the time of that book we also got G.F. Renfer’s “Automatic Program Testing” from the 1962 Proceedings of the 3rd Conference of the Computing and Data. Renfer’s work was mostly focused around attempts to automate the generation of test data through various forms of selection. As another historical note, it was in the previous year that the book Computer Programming Fundamentals came out, published by Herbert Leeds and Gerald Weinberg. This is one of the first known books to provide a chapter specifically on “Program Testing.”

So what I hope you see here is that there were convergences as “program testing” started to be thought about and the thoughts of automation that I’ve been talking about here. The key thing that aligned it all was the idea of humans intersecting with technology.

Tutoring and Testing Become Automated

In 1966, Patrick Suppes (philosopher of science) and Richard Atkinson (professor of psychology) also worked on computer-aided instruction. Suppes did this with mathematics; Atkinson with basic reading. They, like others before them, created personal tutors. Suppes published an article in the September 1966 Scientific American called “The Uses of Computers in Education” and there he said:

The computer makes the individualization of instruction easier because it can be programmed to follow each student’s history of learning successes and failures and to use his past performance as a basis for selecting the new problems and new concepts to which he should be exposed next.

Also worth reading is the article “The Teacher and Computer-assisted Instruction.” This was from 1967 but was reprinted, in 1980, in “The Computer in the School: Tutor, Tool, Tutee”, which was edited by Robert Taylor as part of the Teachers College Press. There we learn that Suppes saw three levels to this program concept:

- Drill and practice programs. (Suppes: “merely supplements to a regular curriculum taught by a teacher”)

- Tutorial system. (Suppes: “to take over from the classroom teacher the main responsibility for instruction.”) Meaning teachers were still there to respond to questions but were mainly supervisors.

- Dialog system. Here the idea was the computer responds to questions by the students, doing away with the teacher altogether.

So we go from supplementing, to taking over, to replacing.

And Yet … All This Died Out Again

Much like the previous “autotutors” and “autotesters” and “programmed instruction”, this fad too passed. The reasons are many and historically interesting. But one part was interesting on its own. Suppes found that groups not using the the “automated” approach did as well or better than the the groups using it. But why?

We learn about this from Andrew Molnar who wrote “Computers in Education: A Historical Perspective of the Unfinished Task”, published in THE Journal (volume 18, number 4) in November 1990. Molnar wrote:

What [Suppes] found was teachers who said, ‘We aren’t going to let those computers beat us, kids — we’re going to work like heck day and night, and we’re gonna do as much as we can to beat them.’ And they did as well, and that became a component of the sales pitch — that is, the idea was that, yes, teachers and students could do well, but they had to include special pressure, and in those cases where you didn’t have the teachers and you didn’t have that motivation, the technology did do it well.

Ah, now think about that mentality there, folks. That’s an interesting parallel with modern automation and how some conceive of it. Let’s reframe Molnar’s point for our context. Yes, if there is pressure to include and incorporate specialist testing, it will potentially do much better than any tooling you care to bring in. But if such motivation for specialist testing is lacking, or such specialist testers are absent, the tooling can do at least some things well; the algorithmic bits anyway.

And, crucially, if you think that testing is only about the “algorithmic bits” then, you might think: why do I need specialist testers at all? The “automated tester” (the tool) can do the job just as well.

That very distinction right there was the battleground, speaking a bit hyperbolically, between those who thought “manual teaching” could be fully automated and those who did not. And the reasons the approaches failed to take hold was because of how people framed a counter-narrative.

So, again, by the mid 1970s all of the above — programmed instruction, autotesters, autotutors, computer-aided instruction — were seen, largely, as failures. The book Never Mind the Laptops by Bob Johnstone says:

Part of CAI’s mystique was based upon the idea that teaching could become scientific. But learning — even such an apparently simple subject as elementary mathematics — turned out to be much more complex than anyone had anticipated.

Indeed! And the same could be argued for testing. It’s often more complex than people who are not specialist testers might at first think.

One interesting note about the failures of all the systems was the often poor quality of the programming texts that were created. James Martin and Adrian Normal in “The Computerized Society” (published in 1970) said:

Programmers, hurriedly attempting to demonstrate the new machines and infatuated with the ease with which they can make their own words appear on the screen, are producing programs as bad as the home movies of an amateur.

Testers? What does that sound like? People who get so caught up in the automation that they just create scripts without necessarily a consideration to the qualities of those scripts.

John Kemeny, one of the inventors of the Dartmouth Time-Sharing System and the BASIC programming language, was one of the more vocal to speak out against computer-aided instruction as somehow replacing the teaching function. In his 1972 book Man and Computer he says:

There are very few CAI applications in which a computer can give the same quality of education that a human teacher can provide …. In writing a CAI program the instructor must anticipate every possible response to a long series of questions. The more freedom provided for the student, the harder it is to anticipate the responses. Therefore there is an almost irresistable temptation to straight-jacket the student.

Yes, much as, we might argue, an automated tool that “does testing” really straight-jackets the act of testing. Why? Because it’s not really capable of “doing testing” any more than those “programmed instructions” were capable of “doing teaching.”

Kemeny felt that computer-aided instruction might be good for “rote learning” and “mechanical drill” but, even then, there were limitations, since students could be poor spellers or poor typists. But he did note one thing of interest: writing programs turned out to be a very good way to help develop thinking skills. As he said:

The student learns an enormous amount by being forced to teach the computer how to solve a given problem.

And, see, that’s an interesting point and it’s one that could have been used previously with programmed texts to frame the idea of supporting teachers but never replacing them. Consider this in our technology context. Do we ever teach the automation tool how to solve a problem? No! We don’t. But the very act of automating, if sufficiently guided, should help instruct people on the differences between reflective human beings and unreflective tooling.

This is one of the ways testers should be combatting the technocracy of automation in the specialist testing field rather than worrying about “tests and checks” or focusing on how there is no such thing as “manual testing.”

Notice how historically such arguments of “There is no manual teaching!” would have been ineffective. If teachers said: “Those tools don’t test, they check”, it would have been a fruitless exercise.

Programmatic Testing Started To Look At Automation

In terms of the programmatic history going on at the same time here, in 1972, we got Program Test Methods, which was edited by William Hetzel. This book came about due to a Chapel Hill Symposium, organized by the University of North Carolina, which was held in June of that year. The book focuses on the problems in testing and validation.

In August of 1975, we got Donald Reifer’s “Automated Aids for Reliable Software”, the synopsis of which was:

Recent investigations on the use of automation to realize the twin objectives of cost reduction and reliability improvement for computer programs developed for the U. S. Air Force are reported. The concepts of reliability and automation as they pertain to software are explained. Then, over twenty automated tools and techniques aids identified by this investigation are described and categorized.

Worth noting here was an economy of scale argument, in terms of improving reliability but with less cost of doing so. This will come up in the teaching context in a bit.

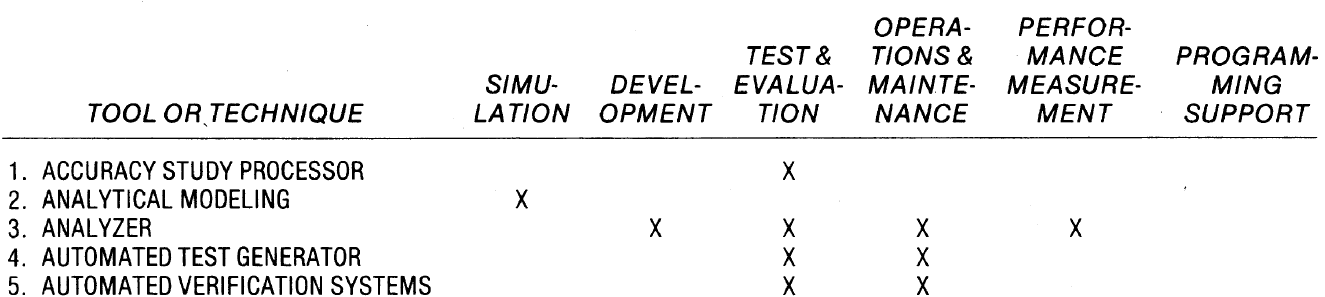

Also, in 1977, we got “A Glossary of Software Tools & Techniques” written by Donald Reifer along with Stephen Trattner. They talk about “automated aids.”

They also break down various tools and techniques.

So, for example, they speak of the following:

- “Automated test generator. A computer program that accepts inputs specifying a test scenario in some special language, generates the exact computer inputs, and determines the expected results.”

- “Automated verification systems. Computer programs that instrument the source code by generating and inserting counters at strategic points to provide measures of test effectiveness.”

These two areas — the purely programmatic and the teaching/education context — were intersecting in pretty fascinating ways as the technology industry started to accelerate.

Automation Didn’t Go Away

While the focus on computer-aided instruction and thus a particular form of automated testing started to wane a bit, the idea of “automation” replacing “manual” aspects of human activity was still very much present.

Consider Douglas Parkhill who published The Challenge of the Computer Utility in 1966. There he talks about “automatic publishing” by which he meant an author “writing at the keyboard of his personal utility console.” This was distinct from “manual publishing,” which was literally writing something out without the aid of a machine.

John Kemeny talked about how “before very long dictated letters will be automatically transcribed by computer.” Thus did we have the idea of “automated transcription” to replace “manual transcription.”

As yet another thing to consider, launched in 1965, the Interuniversity Communications Council, known as EDUCOM, aimed for collaboration in, as they put it:

All information processing activities . . . as examples, computerized programmed instruction, library automation, educational television and radio, and the use of computers in university administration and in clinical practice.

You can read more about this in the article “EDUCOM Means Communication” in the January 1966 EDUCOM: Bulletin of the Interuniversity Communications Council edited by Carle Hodge. Key thing to note there was the wording of “library automation” that would replace “manual librarian” activities.

Consider, as another example, a report published in the U.S. Department of Health, Education, and Welfare in June of 1967. This report was titled “The Production and Evaluation of Three Computer-based Economics Games for the Sixth Grade, Final Report” by Richard L. Wing. The report shows a lot of the efforts and research into, as the report puts it, “automation in education.”

In fact, we can even step back once more to the late 1950s. Specifically, Don Bitzer, in 1959, came up with PLATO (Programmed Logic for Automatic Teaching Operations).

Obviously the “Automatic Teaching” part stands out, right? But this was actually quite different than the Skinnerian stuff we looked at earlier, at least as it evolved. Remember one of the complaints about computer-aided instruction was the writing of the scripts or the programmed texts, the quality of which was often perceived to be lacking.

In the PLATO context, a graduate biologist named Paul Tenczar realized that writing what was coming to be called “courseware” was still a terrible process, hence the low quality of the outputs. So he designed a new language for the use of such creation and called it TUTOR. As the “Mark IV” iteration of PLATO was being considered for schools in 1972, the so-called return-on-investment argument kicked into gear.

Bitzer said that to “compete” with “manual (human) instruction,” you had to deliver the automation at a dollar per student-hour. Thus was an economies of scale argument put in place similar to what we saw in the programmatic context. As Bitzer and some of his associates worked on this idea with the Control Data Corporation, it was never billed as replacement for teachers — and yet it sort of was. One executive said:

If you want to improve youngsters one grade level in reading, our PLATO program with teacher supervision can do it up to four times faster and for 40 percent less expense than teachers alone.

You can see the article “Machine of the Year: The Computer Moves In” in Time from January 1983 for details on that. Note that he is mentioning “teacher supervision” at least, so the teacher wasn’t quite replaced.

But educators were worried because this was still billed as a form of “automatic teaching” and that idea was fought successfully well enough in the past that getting traction on it was very difficult. Testers should take note of that. It’s quite the opposite situation we face with automation in our context. I’ll come back to that near the end of this post.

Yet Tenczar and others kept insisting that the goal was never to replace teachers but rather to augment them and/or support them. The idea was to allow the unique abilities of a digital tool to support what the teachers were doing. Tenczar said:

We saw this as a great boon for teachers. It could free up teachers from the mundane repetitive process of much of teaching to address single students for their unique problems.

Again note the wording: “free up.” Just as before we had “liberate” and “emancipate.” And note how these are always framed around negatives: “mundane”, “repetitive”, “onerous”, “white-collar ditch-digging” and so on.

History Repeated A Bit

These discussions, in the 1970s into the 1980s, were the same discussions that happened in the 1950s and 1960s. It’s almost like perhaps people hadn’t learned from history how to frame arguments around automation in terms of how to sell it as a viable concept. Teachers and educators, on the other hand, had learned exactly how to frame arguments to refute the unreflective acceptance of the “automation of education.”

And I would argue that is exactly the problem I see with many testers today. They, too, haven’t learned from history. Thus they fight the same battles from history without really moving the needle forward. Had teachers acted then like testers do today, the education context of our society, at least in the United States, would probably be radically different — and not for the better.

Eventually we, as a society, largely got past the ideas of programmed instruction and computer-aided instruction — and thus “automated teaching” — but we still do leverage technology (like iPads and focused educational apps) in the context of education. The teaching discipline has a whole lot of struggles, to be sure, but having to defend itself against being “automated away” is not really one of them at this point.

Personal aside: teachers are still, I believe, devalued much as testers believe they are devalued. Testers, however, seem to be aligning around the idea that the use of “manual” in the phrase “manual testing” is a large part of why that is. Whereas teachers do not have that same type of concern.

There’s a metric ton of history here, as you can probably see. I’ll have to leave it to you, dear reader, to investigate, should you find it interesting. As a starting point, perhaps look at the evolution beyond the history I left off with here, starting around 1986 or so and into the 1990s.

And then notice that the late 1980s through 1990s are when we started to get an influx of “automated testing” tooling, like 1985’s AutoTester tool by Randy and Linda Hayes or 1986’s preVue from the Performance Awareness Corporation. The tooling kept on coming with Compuware’s MVS PLAYBACK and TestPartner, Segue Software’s QA Partner (eventually becoming SilkTest), IBM’s Rational Robot, Mercury Interactive’s WinRunner (eventually becoming QuickTest Professional) and so on.

So … What Happened?

The test specialty as a whole, and I most certainly lump myself in with this, did not respond well to the rise of “automated testing.” There was not enough caution, there was not enough of a framing narrative to combat the apparent ease of replacing “manual testing” with a tool.

This lack of having a good narrative — combined with the presence of a strongly persuasive economy-of-scale argument — only accelerated as the open source movement gained traction and such tooling moved into that venue, with Selenium being a watermark moment in 2004.

We’re facing another challenge of this with artificial intelligence and machine learning solutions framed around so-called “testing tools.” I wrote about that before when I asked “Will AI perform testing?”. I also posed an AI test challenge and a follow-up suggesting the challenge was not met. I should note I raised the ire of many an AI tool evangelist with this, many of whom still won’t speak to me.

Learn From History; Frame Narratives Accordingly

On the one hand there’s comfort here. There’s a relatively unbroken line, stretching from the sixteenth century to now, that has worked to replace human activity and eventually attach the word “automation” or “automated” to that.

On the other hand, there’s discomfort here in that there’s a lot of history to learn from in terms of how to frame counter-arguments to a technocracy. But my strong opinion is that testers seem to have abandoned just about all of those historical lessons and have returned to arguments that have rarely, if ever, worked.

So I return to the point I made in calling for testers to craft a new narrative. Testers have abdicated the discussion to others — mostly developers — on the ambit of testing. I have watched an industry shift, particularly since around 2009, to thinking that many testers are needless contrarians (at best), fractious sophists (as a “charitable” middle ground), or borderline delusional (at worst).

Even worse, these testers are simply repeating their talking points only to each other, providing a wonderful echo chamber, but not doing much to convince the people they presumably most want to convince.

If you look at the history I describe above — and I tried to provide as many broad references as I could to get you started — and if you compare that with the history we’re putting in place now, the parallels are undeniable. The technological context is different, for sure. But the way to frame a narrative to combat it is exactly the same.

So my call to fellow specialist testers: let’s understand history. Let’s learn from history. And let’s apply the lessons of history. Let’s not get stuck in a prison of our own making.

Whew! This was a long read folks and I hope, if you stuck it out, that it provided you with some interesting tidbits to think about.