This post will be a bit of a divergence from my normal posting style although very much in line with the idea of stories that take place in my testing career.

In fact, this post marks the inaugural start of a series of posts I’ll be putting up called “Thinking About AI.” These will be an evolution from my recent “AI Testing” posts.

My goal with this new series will be to provide a basis for how to reason about the systems that are likely to pervade more and more aspects of our lives than they already do. While “Thinking About AI” will serve as a thematic throughline for each post, any given post will either be standalone or part of a series within the broad theme.

But before those posts I’ll focus here on the broader backdrop that underlies them.

All of this led to some parallels in terms of various aspects of my personal and professional life. And while I realize that aspect is likely interesting to no one except me, it might help some who wrestle with their own struggles of “Am I in the right career? Am I working on things that truly matter to me? What if the things that truly matter to me are somewhat orthogonal to what my ‘career’ seems to be?”

If you want to see one place where I tried to frame some of this, feel free to check out my post “Why Have You Stayed in Testing?”

A Basis in Experimentation …

Some readers may be quite familiar with the fact that I talk about games and gaming quite a bit. I do that because I believe, based on a whole lot of empirical evidence, that a lot of good test thinking comes out of considering the design and play of games.

By “test thinking” understand that to mean “thinking and acting experimentally.”

Learning how to test games is often not very distinct from learning how to play them, because both involve a heavy amount of experimentation. I feel that exact same situation plays out with thinking about, and engaging with, artificial intelligence.

… But A Focus on Computation

The focus here in this post, and the “Thinking About AI” series, is really more on computation.

Arguably computer science should have been called computing science. Or, at the very least, perhaps there should have been a shift to that terminology as the technology evolved more beyond the hardware itself and into what the software that was running on that hardware actually did.

When I say “computation,” please understand that this isn’t just about the mechanics of programming or coding. It’s about computational design, the latest incarnation of which is most manifested in applications of artificial intelligence, one subset of which is machine learning.

Computation is what’s transforming the design of our products and services. This is the intersection of humans and technology, an area that’s always fascinated me. Design is what will determine how humanizing or dehumanizing that intersection is.

So, by that logic, my following post series should perhaps be called “Thinking About Computation.”

Yet I put the focus on artificial intelligence because that’s where a lot of current interest is. It’s certainly the area where we have the most hyperbole, both for and against. It’s also the area where we likely have more of a chance for people to be displaced in the not-too-distant future.

When Computing Was Human

It’s probably worth keeping in mind that the first computers weren’t machines. They were instead human beings. They were humans who computed, by which was meant “worked with numbers.”

Consider that way back in 1613 a poet named Richard Brathwait, in his The Yong Mans Gleanings, said:

What art thou (O Man) and from whence hadst thou thy beginning? What matter art thou made of, that thou promisest to thy selfe length of daies: or to thy posterity continuance. I haue read the truest computer of Times, and the best Arithmetician that euer breathed, and he reduceth thy dayes into a short number: The daies of Man are threescore and ten.

Did you spot that interesting word in there? Computer. I’ll say again: this passage was from 1613.

So who is this “best Arithmetician” that Brathwait is referring to here? Some have argued that he was conceiving of the “computer of Times” as a divine being that would be able to calculate the exact length of a person’s life. Others suggest that Brathwait was legitimately referring to a person who’s very good at arithmetic.

As an interesting related note, the mathematician Gottfried Leibniz said this:

If controversies were to arise, there would be no more need of disputation between two philosophers than between two calculators. For it would suffice them to take their pencils in their hands and to sit down at the abacus, and to say to each other . . . Let us calculate.

That was in his 1685 book The Art of Discovery. Here when he says “calculators” he’s referring to people and “calculate” as an action they do. Note that he didn’t use the term “computers” or “compute.” This makes it all the more interesting that Brathwait seemingly did hit upon that term.

As far as we know, Brathwait is the first to actually write the word “computer” even if we’re not quite sure what he meant by it. According to the Oxford English Dictionary there was an earlier usage. Apparently the term “computer” was first used verbally back in 1579. No details are provided and so, at least to my knowledge, there’s no way to actually corroborate that.

That said, the reference in the Oxford English Dictionary links the verbal use of the term from 1579 to “arithmetical or mathematical reckoning.” And this certainly was a task done by people. This is referenced by Sir Thomas Browne in volume six of Pseudodoxia Epidemica from 1646 as well as Jonathan Swift in A Tale of a Tub from 1704.

In 1895, the Century Dictionary defined a “computer” as:

One who computes; a reckoner; a calculator.

If we take a side trip into etymology, the root com originates from Latin, meaning “together.” The suffix puter likewise has its basis in the Latin word putare which means “to think or trim.” Some have proposed that the idea of computer meant a “setting to rights” or a “reckoning up.”

If you’re curious, I talked quite a bit about human computers and the context of automation in my history of automated testing post.

What that post showed, and what’s important here, is that eventually computing machines came along to replace the human computers. A relevant idea that really resonated with me comes from John Maeda in his book How To Speak Machine: Computational Thinking for the Rest of Us:

To remain connected to the humanity that can easily be rendered invisible when typing away, expressionless, in front of a metallic box, I try to keep in mind the many people who first served the role of computing “machinery” … It reminds us of the intrinsically human past we share with the machines of today.

Indeed! And as we try to build computing solutions that “act more like us” or “think more like us” or “learn more like us,” I think that Maeda’s reminder takes on an interesting focus.

Computing Eras

For the rest of this post, I want to talk a little about the idea of specific computing eras. Even more specifically, I want to bring up the idea of a particular bridging “era” that separates two of what are broadly recognized as the primary eras of computing.

Now, perhaps oddly enough, this all stems from a lot of research I’ve been doing into ludology and narratology in terms of how gaming evolved in the context of computing. The bridging “era” that I refer to provided a bit of a crucible. In my research I found that this crucible dramatically shaped the future development of simulations and games and thus had an outsized impact on ludic and narrative aspects, which have long fascinated me.

That matters here because a lot of this work intersected with research into artificial intelligence, such as algorithms being able to play games as well as a human could. This was, in fact, one of the early barometers that people proposed to understand if we had truly created an artificial intelligence.

Here I recommend the book Deep Thinking: Where Machine Intelligence Ends and Human Creativity Begins by Gary Kasparov. Yes, that Kasparov. The one who was defeated by IBM’s Deep Blue computer in a game of chess.

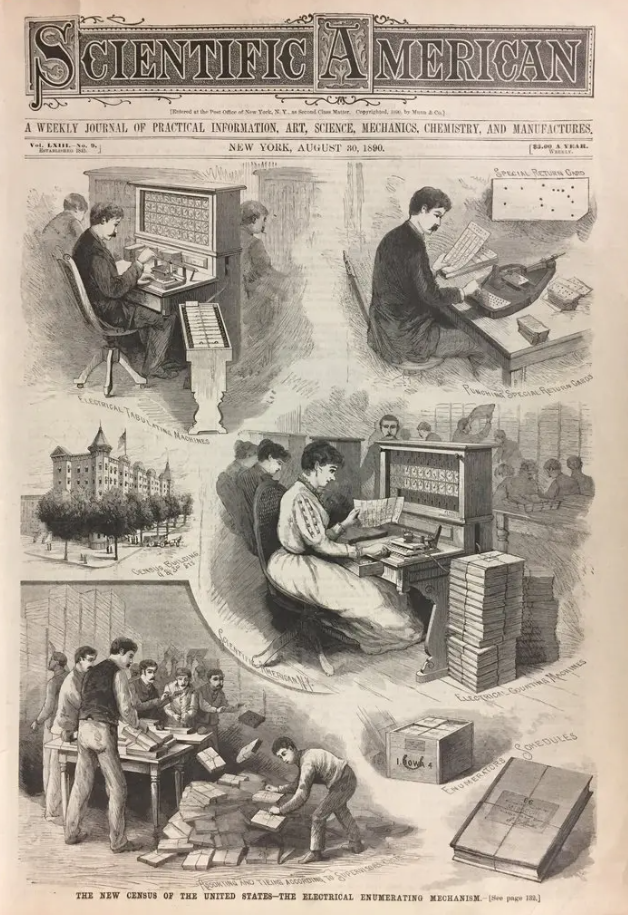

The book Computer: A History of the Information Machine presents an interesting way to frame some history around the computer itself and I’ll expand on that here a bit. In August of 1890, the magazine Scientific American put on its cover a montage of the equipment constituting the new punched-card tabulating system for processing the United States Census.

In January of 1950, the cover of Time Magazine showed an anthropomorphized image of a computer wearing a Navy captain’s hat. This was part of a story about a calculator built at Harvard University for the United States Navy.

In January of 1983, Time Magazine had to once again choose its “Man of the Year” and they, interestingly, chose the personal computer.

Following those threads leads you on an interesting journey on how the computer was viewed throughout a certain period of history. This, by definition, put an emphasis on the perceived importance of computing.

Going through that history, you see there was a time when large organizations began adopting computing technology at an ever-increasing rate. This was done as they saw such technology as the solution to their information- and data-processing needs.

The Computer Context

By the end of the nineteenth century, desk calculators were being mass-produced and installed as standard office equipment. This took place first in large corporations, then later in progressively smaller offices and, finally, in retail contexts.

While this was going on, the punched-card tabulating system developed to let the United States government cope with its 1890 census data gained wide commercial use in the first half of the twentieth century.

Analog Computers

Another thread winding through the late nineteenth century into the early twentieth was the emergence of a particular form of computing, known as analog computing. This reached its peak in the 1920s and 1930s.

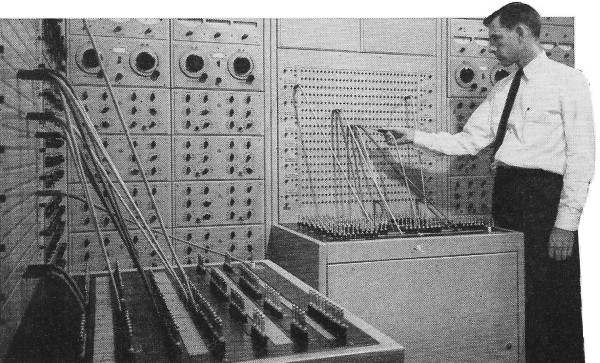

Analog computing was a method of computation that involved using physical models and devices to perform calculations by directly manipulating continuous signals. Engineers would build electrical or mechanical systems that represented the mathematical equations involved in a particular problem. Once that was done, they would then use these systems to measure the values required to perform the desired calculations.

Analog computers were utilized in various fields, including the design of emerging electric power networks, where the nature of this kind of computing could help analyze power flow and stability.

Yet, there was a downside. The analog technologies were characterized by slow computation times. This was partly due to the physical nature of the devices and the fact that they operated on continuous signals, which required time for various processes to start and finish.

Adding to that problem, analog computers often required skilled operators or engineers to set up and calibrate the devices for specific tasks.

Adjusting the physical components to represent the desired mathematical model was a manual process that demanded expertise. This human involvement obviously made analog computing less automated and more labor-intensive although, on the plus side, it did mean that at least someone had a pretty good idea of how the thing was actually working.

Another big challenge, however, was that analog computers were typically designed for specialized tasks. Or, rather, one particular specialized task. Engineers would construct analog systems tailored to solve particular problems or handle specific types of calculations. While the machines were really good at those dedicated tasks, they weren’t well-suited for general-purpose computing or solving a wide range of diverse problems.

Imagine if you had to wire up your computer a particular way to read your email. Then you had to rewire it entirely differently to browse the web. And rewire everything yet again to watch a video. Or you just had to get three different computers that did each of those things.

As a consequence of being designed for specific purposes, an analog computer that was optimized for one type of computation might not be suitable for other types of computations. If you needed to perform a different kind of computation, you were pretty much stuck with getting an entirely different analog technology with a different configuration.

Stored-Program Computers

During the Second World War, there was a significant demand for advanced computing capabilities to support various military efforts. The limitations of analog computing, such as those slow computation times and the need for human intervention, lit a fire under some researchers, often encouraged and supported by the government — to explore new approaches for more powerful and versatile computing technologies.

The breakthrough came with the creation of the first electronic, stored-program computers, which allowed for the storage of instructions and data in electronic memory.

This innovation paved the way for automatic and programmable computing, eliminating the need for human intervention during calculations and enabling computers to execute a variety of tasks.

The development of the first electronic computers gained momentum during and after the war. By the early 1950s, several of these machines had been successfully built and put into use.

Those machines found applications in various fields, including military facilities for cryptography and code-breaking, atomic energy laboratories for simulations, aerospace manufacturers for engineering calculations, and universities for scientific research.

Well-known and notable early examples of electronic stored-program computers from this era include ENIAC (1945), EDSAC (1949), and UNIVAC I (1951). Crude as they may seem today, these machines were the pioneers of digital computing and established the foundation for the rapid advancement of computing technology in the years to come. They provided the basis for generalized computing.

General Computers

Over all of this time, businesses emerged that specialized in the construction of relevant computers. Here by “relevant” I mean computers that were designed to cater to the needs of the engineering, scientific and, eventually, commercial markets. As a result, the application of computers transitioned from performing complex calculations to encompassing data-processing and accounting as well.

These businesses that were creating computing technology focused on government agencies, insurance companies, and large manufacturers as their initial primary target markets.

Thus the early development of the computer industry marked a significant transformation in the function of computers. From being solely a scientific instrument used for mathematical computation, computers evolved into machines that could process and manage business data as well as other forms of information. We started to see a democratization of the computer.

This democratization encouraged experimentation and companies began to create innovations focused on improving the various components of these machines. The result was the creation of technologies that allowed for faster processing speeds, increased information-storage capacities, better price/performance ratios, higher reliability, and a reduced need for maintenance.

So that was the computer context. This is what enabled a computation context, so let’s consider that next.

The Computing Context

As a result of the above dissemination of computers to a wider range of contexts, and thus skill sets, innovation began to take place in the operational modes of these machines. This included the creation of high-level programming languages, real-time computing, time-sharing, wide network capabilities, and various types of human-computer user interfaces.

These interfaces ranged from text-based terminals to graphics-based screens, enabling individuals to interact with computers in a more intuitive and user-friendly manner.

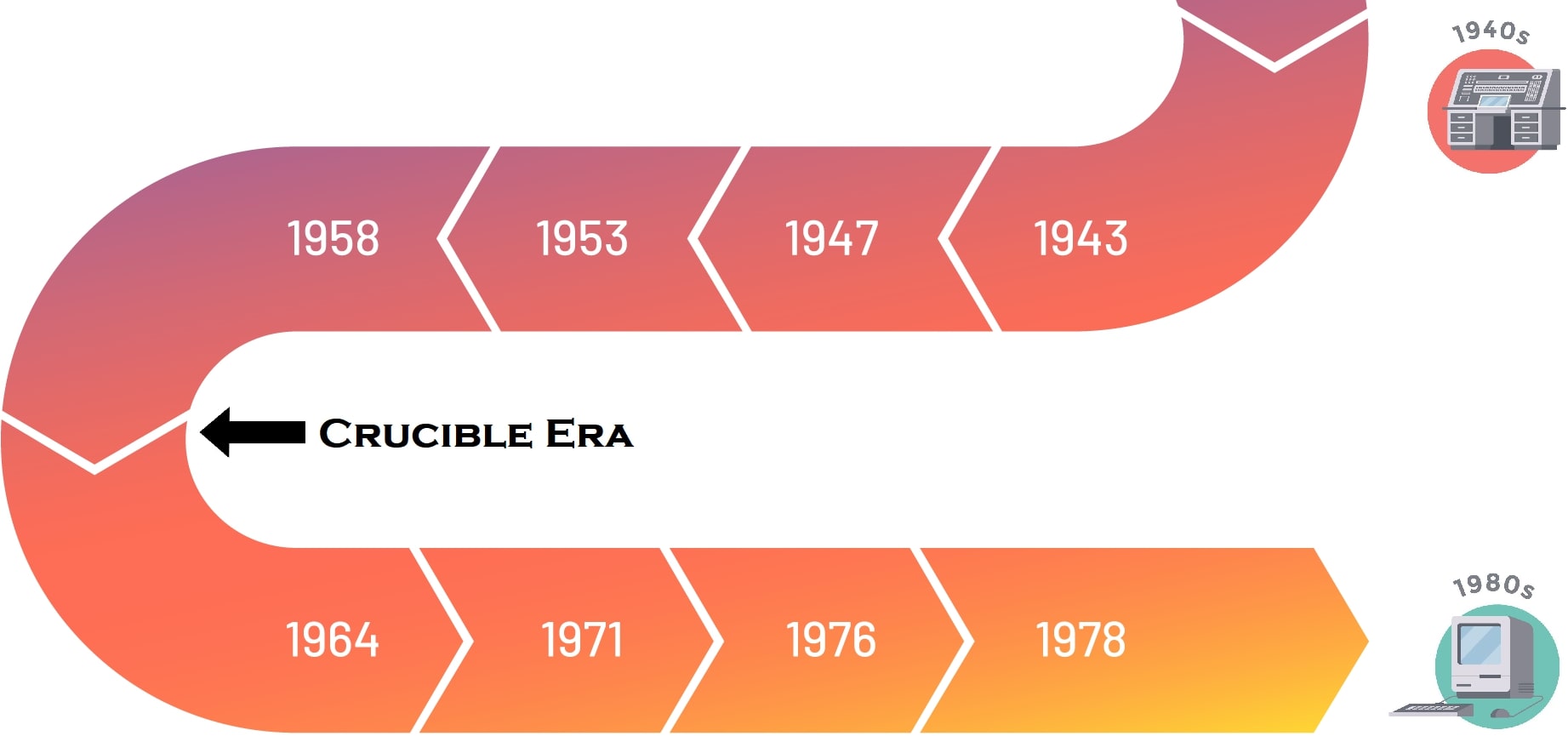

So bringing all this together, we had what we might call the mainframe era. This lasted from about 1945 to 1980. The personal computer era started briefly in 1975 and then ramped up in 1977 and has never stopped.

Between the mainframe and personal eras, however, was the transformational mid 1960s and early 1970s. This was the time period that introduced time-sharing, minicomputers, and microelectronics as well as the BASIC programming language. The latter was important because it democratized the idea of computing to a wide range of age groups and skill levels.

This, I believe, was a crucible era.

The Crucible Era

I take this term from an interesting book called Crucible of Faith by Philip Jenkins.

Just to provide some context, in his book Jenkins is talking about the religious heritage of much of the Western world, which has to do with binaries like angels and demons, Messiah and Satan, hell and judgment, afterlife and resurrection, as well as ultimate rewards and punishments.

During the period covered by the Hebrew scriptures — up to around 400 BCE or so — few of those ideas existed in what we would now call “Jewish” thought and those ideas that did exist were not prominent at all. Yet by the start of the Common Era, these concepts were thoroughly integrated and acclimatized into what I’ll call the Judahist religious worldview.

It’s historical parallax to use the term “Jewish” for much of religious history. Here, in contrast to Jenkins, I use the terminology of Judahist and Judahism to make that distinction clear. Not as relevant for this post, to be sure, but a matter of historical accuracy that I refuse to abandon any time I talk about this.

Judaism as we know it historically is the complex of religious beliefs and practices that were formulated and proclaimed by rabbinic scholars in the early centuries of the Common Era and developed over a long period in the Talmud.

Those scholars were working well after the revolt against Rome and the loss of the Jerusalem Temple in 70 CE. By that time the binaries were well in place and settled. But they became settled as Judahism was morphing into the early forms of Judaism.

In fact, Judahism was actually a complex tessellation of many beliefs and viewpoints. While there was a common spiritual leitmotif — Yahweh-only in belief, Temple-centered in liturgy, Judah-dominated in ethnicity — there were vast differences in detail. Yet, after the destruction in 70 CE, only two variants of those beliefs survived in some form: a particular variant of the Yeshua (Jesus) faith that became Christianity and a particular form of Pharisaism that became Rabbinic (Talmudic) Judaism.

What’s historically interesting is that virtually every component of those binaries entered the Judahist world only in the two or three centuries before the Common Era. This was just as the “Judahist” could be said to be shifting to the “Jewish.” In fact, according to Jenkins, we can identify a critical moment of transformation around the year 250 BCE.

Specifically there were two very active centuries — from 250 through 50 BCE — when all those binaries I mentioned came to be front-and-center and when the universe came to be thought of as a battleground between cosmic forces of Good and Evil; those two binaries acting as encompassing elements of all the others.

In his book, Jenkins describes this period as a “fiery crucible of values, faiths, and ideas, from which emerged wholly new religious syntheses.” And he refers to this as the “Crucible era.” The pace and the intensity of change were at their height during a generation or so right in the middle of this period, which would mean roughly between 170 and 140 BCE.

It was absolutely fascinating to me to see that we could lock down such a specific time frame for the emergence of so many ideas. At some point I realized that this was very much a parallel to what happened in computing.

The Computing Crucible

The crucible period of roughly 1965 to 1975 not only allowed the two eras of “mainframe” and “personal” computing to be bridged but also provided the basis for the development of software technology, the professionalization of programming, and the emergence of a commercial software industry.

The personal computer era itself can be broken down into hobby computers, home computers, and the broadly named personal computer. But before even that, there was the interesting rise of what we might call “people’s computing.” This was an era that began to overlap with the personal computer era but certainly saw its genesis before that, as computing moved from specialized practitioners to the more general public.

Unfortunately, this isn’t the place — and probably not even the blog — to be talking about the fascinating era of people’s computing. So I’ll have to abandon that thread here. Rest assured, however, I could talk — or post! — for days on end about this. In fact, I’ll do one follow-on post to this one to cover this idea briefly because there actually is some corollary here to testing and artificial intelligence.

And that brings up a good point. Has any of this really had anything to do with testing? You know, since this is, ostensibly, a blog about testing?

Testing: The Social and the Human Aspect

Our technologies have social implications. The impact of our technologies on society never could be, and still can’t be, evaluated independently of the technologies themselves. Nor can the mechanisms underlying these technologies be analyzed in isolation unless the social implications are considered comprehensively.

Here I’ll quote Greg Egan from his book Distress:

Once people ceased to understand how the machines around them actually functioned, the world they inhabited began to dissolve into an incomprehensible dreamscape. Technology moved beyond control, beyond discussion, evoking only worship or loathing, dependence or alienation.

Part of what testing does is help people understand how things actually are functioning. One focal point of testing is to help us make sure that this functioning can’t become beyond our control. Or, at the very least, we reduce the contexts in which this can happen.

That’s a large part of why I’ve chosen to be a quality and test specialist in my career: to make sure, to the extent that I can, that technology provides as humanizing an interface as possible; that technology does not become beyond our control. And that we consider the social implications and ramifications of the technology that we intersect with.

This is very much in line with what “people’s computing” in the 1960s and into the 1970s was all about. I suppose you could argue that’s a professional philosophy of mine rooted in a particular ethic: making sure the technology we adopt augments us and doesn’t replace us. And while augmenting us, it does so with as much of a humanizing interface as we can manage.

My Personal Ethos

This also leads into some personal statements of belief.

I already talked about my view of the ethos of testing as well as my perceived ethical mandate for what I termed “mistake specialists” in this context.

The use of that latter term is not mine. Daniel Dennett refers to philosophers as “mistake specialists” in his book Intuition Pumps and Other Tools for Thinking.

Along the same lines as my referenced posts, but speaking quite a bit more broadly, I’ve come to believe that the practical “meaning of life” may be no more than this: develop a livelihood that allows us to find shelter from the elements, provide easy access to sustenance and a means of transportation, and to whatever creature comforts may be available to us.

As part of my youth ministry, I talk about such ideas and how maybe the “meaning” really comes in when we conduct our lives in such a way that will allow others to do the exact same things.

However, there’s a wider ambit to this for me. Doing the above allows something else to happen.

I believe part of our spiritual “meaning” — note: spiritual, not necessarily religious — can come from the satisfaction of innate human curiosity and the attendant appreciation and awe of anything that’s bigger than us. That can be the nature of divinity; that can be the workings of nature or the universe; that can be the warp and weft of history; or that can be something like the rise of technology.

A book that drew a lot of this together for me is TechGnosis: Myth, Magic, and Mysticism in the Age of Information by Erik Davis. I highly recommend this book. Another book I highly recommend is Authors of the Impossible: The Paranormal and the Sacred by Jeffrey Kripal.

I very strongly believe that when curiosity and appreciation work together, they form a feedback loop in which the very act of understanding and of coming to a belief is a large part of our meaning.

Two more books that guided my focus on this are Surpassing Wonder: The Invention of the Bible and the Talmuds by Donald Akenson (for the curiosity) and Abiding Astonishment: Psalms, Modernity, and the Making of History by Walter Brueggemann (for the appreciation).

I find there’s a sheer delight in reading and learning what other people have thought about the world and their place in it. I believe very much in the idea of the “sacred encounter” by which I mean the shared human joy in the activity of inquiry, the engagement of intellectual discourse and encounter, and the recognition of a common human search for meaning that can be found beneath all of our diverse cultural, ethnic and religious backgrounds.

I intend zero hyperbole when I say that testing — when broadly defined and broadly applied — is what allows me to maintain and practice those beliefs in an intellectually honest but also emotionally fulfilling manner.

I’ve had many of my own “crucible eras.” I feel I’m in one of those periods right now as I reason about, and within, a technocracy that is adopting a form of computing that can do great harm but also do great good.

The choice of which it will be is entirely up to us.